Join Shane Gibson as he chats with Roelant Vos about a number of Data Engine Thinking patterns and his new book Data Engine Thinking.

Listen

Listen on all good podcast hosts or over at:

https://podcast.agiledata.io/e/data-engine-thinking-patterns-with-roelant-vos-episode-66/

Subscribe: Apple Podcast | Spotify | Google Podcast | Amazon Audible | TuneIn | iHeartRadio | PlayerFM | Listen Notes | Podchaser | Deezer | Podcast Addict |

Buy the Data Engine Thinking Book

https://dataenginethinking.com/en/

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

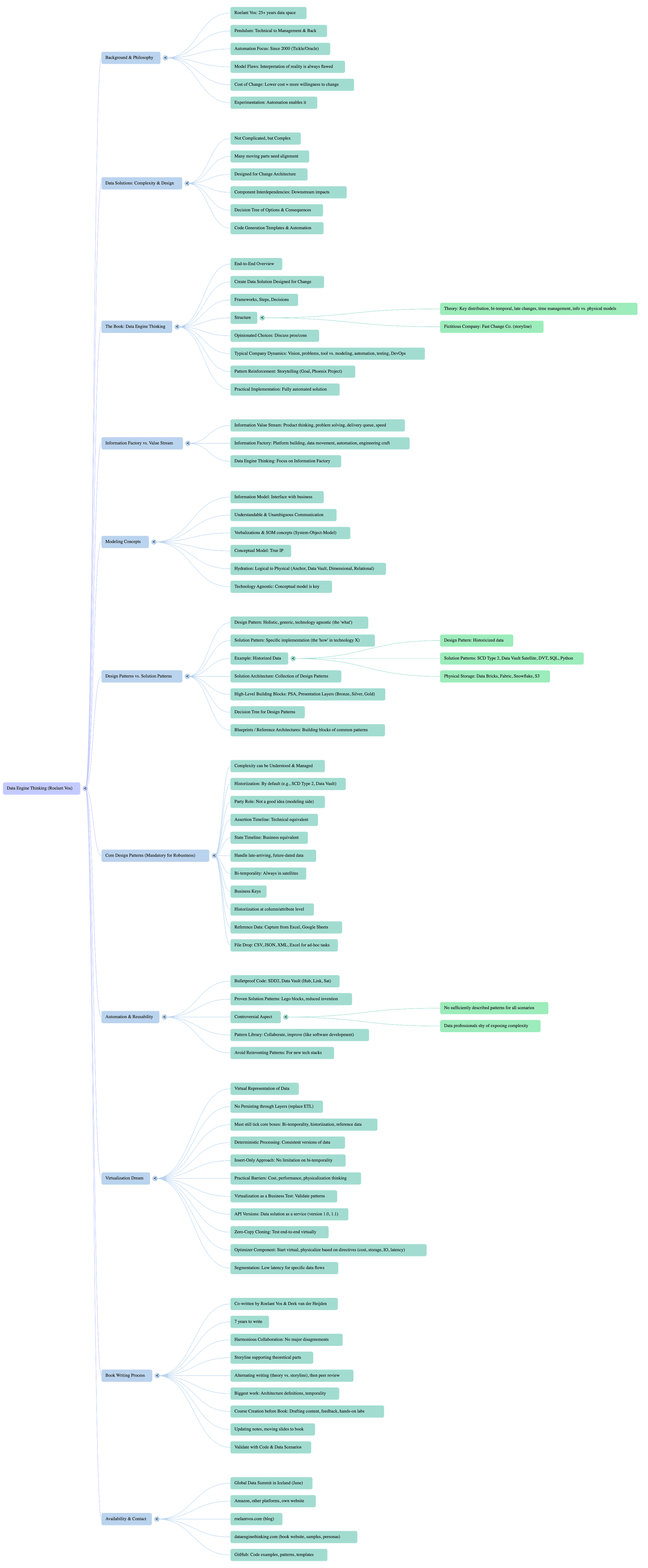

Google NotebookLM Mindmap

Google NoteBookLM Briefing

Detailed Briefing Document: "Data Engine Thinking: Automating the Data Solution"

Source: Excerpts from "Data Engine Thinking: Automating the Data Solution" – A podcast interview with Roelant Vos (co-author) by Shane Gibson.

Date: June 19th, 2025

Key Speakers:

Roelant Vos: Co-author of "Data Engine Thinking," experienced data professional (25+ years), with a strong focus on automation and technical data management.

Shane Gibson: Host of the Agile Data Podcast.

1. Core Concept: "Data Engine Thinking" and the "Information Factory"

The central theme of the book, "Data Engine Thinking," and the podcast discussion, is an end-to-end approach to building data solutions that are inherently "designed for change." This is contrasted with traditional, often manual, data practices.

Designed for Change: The fundamental goal is to create data solutions that can easily adapt to evolving business needs, data models, and technological landscapes. This is achieved primarily through automation and pattern-based design.

Information Factory vs. Information Value Stream: Shane Gibson introduces two concepts:

Information Value Stream: Focuses on the "product thinking" side – identifying problems, ideating solutions, prioritising work, and delivering value to stakeholders.

Information Factory: Focuses on the "platforms that support that work, and the way we move data through it all the way from collection through to consumption." Roelant confirms that "Data Engine Thinking" is "definitely more in the information factory area." This highlights the book's focus on the underlying architecture and automation capabilities rather than business process design.

2. The Imperative of Automation and Lowering the Cost of Change

A recurring and foundational idea is that automation is crucial for agility and innovation in data.

Historical Context: Roelant's journey over 25 years has consistently focused on automation, starting as early as 2000 with tools like Oracle Warehouse Builder and Tickle. He notes, "Absolutely. Yep. So I started that at 2000 actually... Working with Oracle Warehouse Builder made rest in peace. We used this stickle language to try to automate things."

Enabling Experimentation and Risk-Taking: Shane eloquently summarises the core benefit: "if the cost of change is lower, then we are more willing to change... We can manage more, change more often. We can iterate more often. We can take more risks. We can make earlier guesses because we know the cost of refining that guess in the future is lower than if everything was manual."

Addressing Flawed Models: Data models are "always going to be flawed" because initial interpretations of reality are incomplete. As understanding grows, models need refinement. Automation makes this refinement cost-effective: "The more you learn, the more you want to refine that model. And then you have to go back every time to update your code base. And that's why automation is such a critical thing."

Overcoming Complexity: Data solutions are described as "not complicated, but they're complex," due to "a lot of tiny moving bits, pieces." Automation is the key to managing this inherent complexity.

3. Pattern-Based Design: Design Patterns vs. Solution Patterns

A core distinction made in the book is between "design patterns" and "solution patterns," which provides a structured approach to building robust data systems.

Design Patterns (The "What"): These are conceptual, holistic, and technology-agnostic. They define "what do we need to do, how should work, what are sort of conceptual boxes that we tick." Examples include:

Historizing Data: The need to capture and store every historical view or instantiation of data over time. This is considered mandatory: "you always want to bring in a historicization pattern into your platform on day one... because you are gonna need it."

By-temporality: The complex concept of managing both "assertion time" (technical timestamp) and "state timeline" (business validity). Roelant states, "you have to have these two timelines in place at all times and solve the problems associated with it, like the bytemporal."

Reference Data Management: The ability to capture and iterate lookup data not originating from source systems (e.g., Excel, Google Sheets).

File Drop Capabilities: The ability to ingest ad-hoc files (CSV, JSON, XML, Excel) into the platform.

Solution Patterns (The "How"): These are specific implementations of design patterns on a given technology stack. They involve the concrete choices of how a design pattern will be realised.

Examples: For historizing data, solution patterns could be SCD Type 2 dimensions, Data Vault satellites, or specific methods in DBT, Oracle Warehouse Builder, SQL, Python, etc.

Technology Agnostic Design: The goal is that the core design pattern (e.g., historization) remains constant, but the "physical modeling can change depending on the technology, the user, the tools, all those constraints." The "technology becomes less of a... Yes, you need to optimize it, but it's also not really where the IP resides."

Mandatory Design Patterns: Roelant argues that certain design patterns are "mandatory" because "to truly work with data in your organization, there's no avoiding them. Sooner or later, you will run into these problems, so you might as well tackle it upfront."

Bulletproofing and Reusability: The aim is to create "bulletproof" and "reusable" code for common solution patterns, similar to how Data Vault hub/link/satellite loading code can be hardened. This reduces manual effort and increases reliability.

4. The "Engine" Concept and Future State

The book envisions a highly automated "Data Engine" that can intelligently manage and optimise data solutions.

Optimizer Component: A key component of the "engine" is an "optimizer." This allows the system to "calculate based on use patterns, what the best combination of physical, virtual objects are to deliver that" based on directives like "cost storage, IO latency." This means dynamically choosing whether to virtualize or physicalize data based on specific performance or cost requirements.

Config-Driven Platforms: The ideal future state is a fully configuration-driven, end-to-end platform where users can "pull up the design patterns, you tick some boxes for them, pull up the constraints around which technologies it's allowed to use, and it actually writes the solution patterns and the code and deploys itself."

Virtualization as a Test: Roelant suggests that virtualization, even if not physically implemented, serves as a test of the robustness of patterns: "If you can do it [virtualize], you can also physicalize... but if you can't virtualize it. Then something's wrong with patterns."

5. Narrative Approach and Practical Application

The authors employ a specific narrative strategy to make the complex concepts more accessible and actionable.

Fictitious Company ("FCOM"): The book uses a fictitious company to embed theory within a story. This "takes that emotional aspect and put it into the book storyline so we can have that conversation about pros and cons and what it means." This approach echoes books like "The Goal" and "The Phoenix Project," where patterns are revealed through a narrative.

Practical Implementation: The book is designed to be highly practical, allowing readers to "implement a fully automated solution, uh, themselves."

GitHub Repository: Complementing the book, a GitHub repository will be launched with "all these code examples and patterns and things you can run yourself, and templates and everything." This aims to foster collaboration and provide concrete implementation examples.

6. Challenges and Learnings in Writing the Book

The podcast touches upon the significant effort involved in synthesising 25+ years of experience into a coherent framework.

Seven Years in the Making: The book has taken "almost seven years" to write, highlighting the complexity of the undertaking.

Collaborative and Harmonious Process: Despite co-authorship, Roelant notes remarkable agreement between himself and Dirk, leading to a "very harmonious" writing process. Disagreements were rare; instead, there were moments of "didn't know something yet" leading to further exploration and coding.

Valuable Role of Training: Creating course material and training people before writing the book proved invaluable. Roelant states, "at some point I started recording the trainings for that purpose. And then after the training sessions, I was updating the notes in the slide decks to find the right words. And that all made it into the book." This iterative process of teaching, getting feedback, and refining content directly informed the book's clarity and structure.

7. Antipatterns and Dogmatism

The discussion subtly highlights issues in current data practices that "Data Engine Thinking" aims to address.

Handcrafting vs. Automation: A major antipattern identified is the tendency for data professionals to "like to handcraft things oddly." This is contrasted with the software domain's adoption of templated, automated deployments (e.g., Terraform).

Reinventing the Wheel: When changing technology stacks (e.g., SQL Server to Snowflake to Databricks), data teams often "reinvent all the patterns again... That's just crazy." The book seeks to provide a shared, reusable library to combat this waste.

Avoidance of Complexity: Data professionals can be "a bit shy of exposing too much complexity if they can avoid it," opting for "simpler patterns" that may cause issues later. The book argues for upfront embrace of necessary complexity, managed through automation.

Dogmatic Approaches: The shift away from rigid adherence to single methodologies (e.g., Inmon vs. Kimball, Data Vault vs. Dimensional) is acknowledged, promoting flexibility based on context.

Conclusion:

"Data Engine Thinking" proposes a paradigm shift in data solution development, moving away from manual, ad-hoc, and project-specific builds towards automated, pattern-based, and inherently adaptable systems. By clearly defining "design patterns" (the what) and "solution patterns" (the how), and advocating for their codification and reusability, the book aims to lower the cost of change, increase agility, and ultimately build more trustworthy and future-proof data platforms. The authors' extensive experience and practical, code-backed approach suggest a significant contribution to standardising and industrialising data engineering practices.

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

Transcript

Shane: Welcome to the Agile Data Podcast. I’m Shane Gibson.

Roelant: Hey, I’m Roelant Vos. Thanks for having me.

Shane: Hey, Roelant. Thank you for coming on the show Today. We are going to talk about the patterns that are in your book, data Engine Thinking, but before we do that, why don’t you give the audience a bit of background about yourself.

Roelant: Yeah, sure. Thanks Shane. I’m fu, I’m a Dutch national, but I’ve been living in Australia for almost 17 years now, so I’ve got this weird blend of accents and I’ll try to not bother too much with it, but I’ve been working in the data space for more than 25 years, so pretty technical guy. I’ve started with computer science and then got my first job as a Conos consultant, so building reports and cubes and everything, and quickly realized that.

Building these reports is that’s good fun and it’s important, and obviously it’s what people often consume their data with, but the issues that you find in the data, you can’t easily or should easily fix them in the reporting sites. Slowly but surely descending into the depths of data management and ETL data integration all the way down to database administration, more the real hardcore technical low level stuff.

And then slowly my way back into light, took a management position at Alliance for a long time. And now I’m back to more the technical programming area of things. ’cause there is this pendulum always I find, right? So as a data professional, you see these problems in organizations and you wanna fix it, make it better, make the data more easy to consume and to an extent you can with your tools and skills and all this stuff.

But problems are always. Often, partly organizational as well. So then you find yourself going into more management and trying to change your organization to work better with data, but you lose the technical aspect of it a little bit. So it is always this back and forth between I wanna do something to improve the organization and also wanna keep up to date with the technical skills.

So that’s been my story all along,

Shane: and it’d be fair to say that. Over those 25 years, you’re focused a lot on automation. Before the term DataOps came out, I always remember a lot of your writing and thinking was around how to automate some of the tasks so that you don’t have to do them manually.

Roelant: Absolutely. Yep. So I started that at 2000 actually with Tickle, which was part of Oracle. Oracle Coase at the moment. So. Working with Oracle Warehouse Builder made rest in peace. We used this stickle language to try to automate things, and we came up with ways to change the physical modeling to align better with automation, because refactoring takes a speed.

That’s also when we run into more hybrid modeling techniques such as data vaults and things like that, because that was already more mature than we were doing, but it still aligns with the same mindset of modular patterns that are easier to automate, quote unquote. So that whole approach towards making life easier with automation, that was, it started out as we can do this and it’s also saves a bit of time, but it evolved into this mindset of working with data is based on a series of assumptions.

Which are translated into a model and translated into a, an interpretation of the world, right? Literally what the model is. And those interpretations are always going to be flawed because you start with a certain background that you may not have the full awareness of understanding of everything is in place and the history of why things are now where they are.

That idea that your interpretation of reality model is always concept. Exchange your interpretations. The more you understand the data, the organization, the history, the knowledge that they in people’s minds to show a memory. And the more you learn, the more you want to refine that model. And then you have to go back every time to update your code base.

And that’s why automation is such a critical thing. So automation and model driven engineering, patent based design, those kinds of terms have always been the red thread through through the work. Yeah.

Shane: And for me, it’s this idea that if the cost of change is lower, then we are more willing to change hundred percent.

We can manage more, change more often. We can iterate more often. We can take more risks. We can make earlier guesses because we know the cost of refining that guess in the future is lower than if everything was manual. Everything’s hard coded. Everything involves human effort that’s large. We just won’t take the risk because we want to do a lot of design up front.

Because we don’t want to invoke that high cost of change. I’m with you. That the ability to automate it gives us the ability to experiment.

Roelant: Absolutely. And then, then that links into things like dataware automation and the tooling that exists and the patterns that exist for it. So then you think about what kind of components do we need?

How can we really specifically define them so they are reusable and they tick all the right boxes, and I’ll get to what those boxes are in a bit. But my experience with the tooling back in. Right. Always felt there.

To be looked too easy because certain things were missing, and at the same time, it shouldn’t be that hard because once you figure it out and you embed it once, then you can use it as many times as you want, right? So there’s this feeling that always lingering that the patterns that we were using weren’t as correct as they should be.

That pattern and that, I guess that implementation approach. Generic, truly tick all the boxes, then you know, we can’t park it and leave it behind, because once we figure it once and wrong, then we’re done. But all these different tools and different frameworks that many people use, there was always some issue with it that sort of made it incompatible.

And the way I look at it, these systems, these data solutions, as I call them, they’re not complicated, but they’re complex, right? There’s a lot of tiny moving bits, pieces.

Once we talk through end to end, what it means to set up a, an architecture that is designed for change and what these components are that need to work together, what kind of frameworks you need to think about the message. Is that correct? Viable pathways to implement things. But you need to understand if you change one of the components on the left hand side, then it has downstream impacts on the components on the right hand side.

So all these components they need to be aligned, need to be in sync with each other, so. The correct function results, and that’s really what it is. So there’s a couple of options, but you need to understand what the consequences are of those options and make a decision that you then capture in your design and then you move on.

Working through that allows us to then build co-generation templates and automate everything from there.

Shane: Cool. So what is the book about?

Roelant: So data engine thinking is an end-to-end overview of what it means to create a data solution that is designed for change. So what frameworks do we need? What kind of steps do we need to think about?

What kind of decisions do we need to make along the way? And it’s been into two general sort of storylines. So one is that the theory read say, how do we with key distribution by temporal data, late driving changes, how do we manage time? How do we manage information models versus physical models? All that stuff, right?

So that bulk. You’ve a fictitious company code

all. There’s a couple of reasons for that. One is that we feel like some of the implementation choices that you have are really opinionated, and that’s in some cases, rightfully. In some cases it’s, we try to make the point, it doesn’t really matter, but we try to. Take that emotional aspect and put it into the book story line so we can have that conversation about pros and cons and what it means and how you get to decision outside of the standards, if you will.

So it’s not like you have to do this, but these are your options. This is what we would recommend. And then we keep the emotional aspect of it out of it, and at the same time, it follows typical. Discussions and dynamics that you have in a given company, right? So how do you get to an implementation of a new data warehouse or data solution or anything?

So it’s that first thing. Let’s define the vision. What are the current problems? How do we have the conversation about let’s buy a tool versus we need to. Spend time on information modeling. How do we translate from the information model to a physical implementation? What kind of technology shell or methodology should we select?

And then all the way to how do we introduce automation? Why does it matter? How do we implement testing? How do we roll it out into DevOps all the way to the end result?

Shane: Okay, so did I hear that you pick up. The pattern for the book out of the Goal and the Phoenix Project where you tell a story to reinforce some of the messaging that you’ve also, rather than having somebody have to guess what the patterns are.

’cause if you go for the Phoenix project and you read it after a while you go, oh holy shit, this is about demo ops. I can start seeing it. But it comes as a bit of a shock, right? If you haven’t read it before.

Roelant: Yeah. Yeah.

Shane: So I think what you’re saying is you’re kind of. Calling out the patterns and then using a quasi real life example story to then give a reinforcement about how you decide between the options.

’cause they’re all good options, but given the situation, certain patterns are more valuable given the context and others become antipas. So combining both describing the pattern and then telling a story to give us a reinforcing model of how you choose and how you use. Is that right? Yep,

Roelant: that’s right.

Yeah. And that goes hand in hand all the way through the book. Yeah, we hope that people can use this to implement a fully automated solution, uh, themselves should be really practical.

Shane: Okay. And then the other thing is I tend to talk about information value stream and information factory, and I’ll give you the difference.

So information value stream is we in a team work out how they’re gonna identify a problem in the organization, how they’re gonna ideate. The possible choices they have of solving that problem, how they’re gonna discover which option looks like it’s the most viable, feasible, valuable, how they’re then gonna get the work prioritized.

It can go into their delivery queue. So it’s more product thinking. On the left hand side, I’m using my hands here. And then on the right hand side, once it’s been prioritized, they pick it up and they do some light design. They do some building, some deployment. They release that value back to the stakeholders they may maintain or decommission over time, and then they go back and it’s like a continuum.

They again, aiming for speed through that so they can find a problem, deliver back quickly. Yep. An information factory is more about thinking about the way we build the platforms that support that work, and the way we move data through it all the way from collection through to consumption, and where we can look at automation, where we can use platforms and tools to automate.

Work that has value when it’s automated and try and turn that work into a as much of a factory as possible. Still realizing that it’s half art, half science, ’cause no data ever survives our first engagement. So using those two models, I get the feeling that data engine thinking’s about. Information factory about how you make decisions around the vision for the platform, the components you need to build for the platform, the way you make decisions on the trade offs that you have on every component, how you automate it, how you deploy it, all that engineering, craft, and practice.

Is that right?

Roelant: That’s spot on, right? Definitely more in the information factory area. So as part of the modeling and the, I guess the information gathering and the interactions with the quote unquote business side of the company, the interface with that is the information model. So we use a couple of examples, but stick to FCOM mostly where we say you need to have a understandable way of communicate with everybody.

In a way that is ambiguous. We feel like ization and concepts in general are really suited for that, but we don’t talk about how you workshop that. But then you feed in the results of that into your engine and then everything will refactor automatically and look the same way or however you want it. Not the same way, but yeah.

The result of that decision.

Shane: Okay. And so when you talk about information model and the, the patent that you’re adopting, are you talking about a business model or conceptual model or the data for the organization? Yep, a hundred percent.

Roelant: Yep. So we make the case that the, that is true ip. And you want to use things that are available in the market at the moment for that, but definitely at physical pretty much automatically.

So that’s also a pattern that you can decide on and codify and then run. So definitely the, the co conception model is the input and then everything downstream will be generated.

Shane: And then often I talk about hydration. So I talk about if you get your conceptual model right. And then you understand the attributes, which is a form of logical modeling, then you should be able to hydrate your physical model using any modeling pattern you want.

You should be able to deploy it as anchor or data vault or dimensional or relational, because actually it’s like you said, it’s that conceptual understanding of the data that’s more important. And then the physical modeling can change depending on the technology, the user, the tools, all those constraints that you have that make one physical modeling pattern seem better for you in that situation versus another.

Versus the old days where we were dogmatic and we were data vault for everything, or dimensional, or not quite Inman versus Kimball, but definitely data Vault versus Dimensional was a key scrap a few years ago from memory.

Roelant: Hundred percent. In that context, we define. Term pattern into a design pattern and a solution pattern.

So the design is more the what do we need to do, how should work, what are sort of conceptual boxes that we tick is the specific implementation on a given technology. So the, the design pattern itself is. Holistic, generic technology agnostic, all that stuff, and that, that includes translating the logical model to the physical model, for example.

So you have a specific idea on how this should work in technology X. Then you’ve got switched over into something else when you need to, so the technology becomes less of a. Yes, you need to optimize it, but it’s also not really where the IP resides.

Shane: Okay. And so if I play that back, because I was think about the complexity of the path.

So I can go and say there’s a design pattern of historize data. So where data changes in the source system, we want to be able to see every historical view instantiation of that data over time. So that’s like a big pattern in my head, was that what you call a design pattern? And then I can go, if I deploy SCD type two dimension, that gives me a way of storing historicized data.

Or I could do a satellite of a data vault hub that gives me the ability to store ization. I can reconci stand change records in a single table. There’s a whole lot of technical patterns for the physical modeling. Then I could do that using DVT or I could use that doing Oracle warehouse builder. If I still had it, I could do it in SQL code, I could do it in Python heaven, for forbid.

I could do it in Java or Scala if I’m back on the Hadoop stage. And then actually I could physically store it in some form of Databricks, some form of fabric, some form of Snowflake ES history. And they just all or both? Yeah, they’re, yeah. Or as we always do, and all of them. So they’re just solution patterns.

They’re just different ways, but. People often struggle with the complexity. ’cause we’re almost in an ola cube now, which is, Hey, I’ve got this one simple design pattern, historicized data. I know, sorry, I didn’t bring in my data layers so I could be persistent staging EDW presentation, or I could be bronze, gold, silver.

I have a whole of different language just for the layers. So do you cover the way you should think about it when you have all these complexities to take it from a design pattern to a solution pattern?

Roelant: Yeah, and it’s actually one of the more, more difficult things we found to explain and to write, and we ended up moving text and content around quite a bit to try to explain that the best way we can.

So first of all, the solution architecture can partly as the collection of all design patterns. So you’ve got. Specific patterns apply to specific architectures is another way of looking at it. So we start saying, these are the high level architectures you can think about, right? The high level building blocks.

PSA was mentioned, precision was mentioned, and we say that at that level, you need to make a call and include one of these design patterns in your. Solution design because that will have a lot of downstream impact. And we try to explain there, if you don’t do this, then you need to think about this. And if you do this, then you don’t do this.

But if you do that, then, so it’s almost like a decision tree of options that you need to think about. And that’s a critical first part because once that is in place. It changes the way how the next layer or downstream processes will work. For example, if you don’t have BSA, you are refactoring, you’re reloading of historical data in a different mold will look different, right?

You can still do it. It’ll just be different. So the complexity is shifting that way a little bit. If you use an insert only pattern, is that, or also allow updates in the data. It shifts the way how you interpret the timelines when you deliver data for information. So we need to take a couple of high level decisions and, and understand what the implications are.

And

Shane: if I think about it. The way we’ve dealt with this in the past is we have blueprints for reference architecture. So if you look at any experienced data architect that’s both a data architect and a data platform architect, they effectively have a small number of blueprints in their head that have been crafted for certain styles of organization, large versus small, distributed versus not complex data, fast moving data.

Big data where we talk about terabytes and then a whole lot of other patents, and they then bucket them into blueprints that are templates that they bring first, and then they iterate it for the things that don’t fit for that context of that organization. I think what you’re telling me is you’ve taken that.

Upper level to say, actually, here’s the building blocks of those blueprints and reference architectures. Here’s the choices that you need to make. That will tell you which block to adopt. And then the consequence later or earlier in your information factory, that decision will make.

Roelant: Yeah, exactly. And so that, that’s a good way to, to put it at that level.

And what we also feel is that once we define that reference architecture, and we do select one, right? So we’ve got 12 different blueprints, if you will. And then one that we say, this is our reference, we’ll just continue with that, but keep those in mind and we’ll refer to the other ones, uh, throughout the book.

But we also feel that this complexity is something that can be understood and managed and enc coded. Codified one. To the point that we then don’t have to worry about it as much. And that’s sometimes a controversial topic, right? So basically what we’re saying is it doesn’t matter who you are, if you use that approach, it does everything you need.

And, and just making sure that one solution will always work. And again, not talking about the technical level, the solution pattern, because the infrastructure and technology will be slightly different from case, but the architect, the design of the platform itself should be the same for everyone.

Shane: So let me ask a couple of questions to clarify what you’ve just said so I can interpret that and what I call an anti-patent, which is whenever you’re modeling party role is the only table you need, because it covers every use case for every organization, and I’m not personally a great fan of that pattern, or I can infer it as you saying his data.

By default because you’re gonna need it. And therefore, if you don’t do it, you’re gonna have to do it at another stage. It always has to be done. Just decide which patent you’re gonna use to do it, and here’s your choices. So design block, we do need to historicize data. Now we just need to figure out which solution design we’re gonna use to create the ization.

Roelant: Yeah, pretty much. Yeah, it’s definitely second. So the part thing that’s more the modeling side and there’s actually a on that, how played it, but I point I’m trying to make. A given architecture to, to make it do what we wanted to do, which is being endlessly refactor and all that stuff, right? So you can morph your model and move with organization, all that good stuff.

You have to have a couple of things. You have to have digitalization point, you have to specify. What we call a assertion timeline. Estate timelines, like a technical one, a business equivalent, right? Because you have to be able to handle later life in transaction, future dates, data stuff. So one of these blocks is to have these two timelines in place at all times and solve the problems associated with it, like the byt, temporal, like, so these are complex things.

We, we argue you have to have all of them ization by temporality. A way of delivering information. You can make some decisions here and there, but there’s a couple of these core things to, to make it work. Business one, defining. Level. If you don’t have that, then it’ll not work. But we also argue to truly work with data in your organization, there’s no avoiding them.

Right? Sooner or later, you will run into these problems, so you might as well tackle it upfront. Embrace that complexity, make sure it’s. And codified once and once aro, and you don’t have to worry about it as much because at that point it becomes pattern based, right? Because you know that you need two timelines.

You know that the way to fix timeline gaps or issues in date met all and stuff is the same stuff over and over again. The pattern for delivering data is gonna be the same no matter what you do. So these are complex things by themselves.

Shane: I’m gonna draw down that in a second. I just wanna go back and get a couple more examples.

’cause by temporality is quite deep thing to understand as a data person. So if I go back to a couple of examples that I think are a little bit more simpler to understand that I always see in a data platform. So one is reference data, the ability to have a bunch of data with some lookups where it’s not created.

Source system. It’s in Excel, Google Sheets, or in a Word document. And we need to be able to capture that data and iterate it in the data platform. So I see that one a lot. The ability to file drop, the ability to grab a CSV file, a J file an X ml file of heaven forbid an Excel file, and manually drop it into the platform for an ad hoc task is one I see all the time.

Okay. So those are design patent blocks. They’re things that. You don’t have to build it on day one, but you’re gonna build it. If you’re teaming your data platform survive for a couple years, you will create those features. ’cause everybody wants them.

Roelant: Do that. Then make sure that these boxes are ticked because it needs to adhere to the fundamental principles.

Everything else has to.

Shane: Okay. And then if we look at an example of SED two and hub sets and links. So the value of those patterns is you can write SCD two code and. It’s the same code. It is a known in solution pattern. You write that code, you can harden that code, and if you want to create an SED two type dimension, that code becomes bulletproof.

It’s the same one of the reasons I like Vault. Yep. Because you can create the code that says create hub, create link, create set load, hub load, link load set. You do all the exceptions ones, of course, but those six pieces of code become bulletproof. They become reusable. They are used so often that actually every bug and every use case gets solved.

That you can just trust that code. And I think what you’re saying is we take that and apply it to the design patterns and say, okay, let’s come up with solution patterns that get used time and time again. Then we’re effectively iterating those solution patterns. Giving it more test rounds, more coverage, that they become bulletproof and they become easier to implement and therefore, why wouldn’t we do ization if we could just drop a solution pattern in and know it’s gonna work.

We don’t have to build it ourselves from scratch. Is that what you’re saying?

Roelant: Absolutely. And, and extend that to more complicated things like the by temporality you mentioned.

Shane: Okay. And so when you get these complex use cases, then what you’re looking for is a proven solution patent. So you don’t have to invent it yourself and you’re effectively dropping that Lego block and the problem’s taken away to a degree.

And that’s controversial, right? Yeah. It’s confide, but I dunno why. So let’s discuss that because in the DevOps world, you create Terraform templates to deploy your servers as cattle. You protect the Terraform code like nothing else. You deploy your servers, your server away, you redeploy it. It’s a proven pattern in data.

We seem to like to handcraft things

Roelant: oddly.

Set of patterns that has been sufficiently enough described to tick all the boxes that actually work in, in all scenarios. So what works for one methodology doesn’t work for some other one, or there is a couple of gaps that a couple of shortcuts are taken to avoid some of the the nasty things that may happen.

And then you run into them and then you have to make changes. So it’s not matured enough yet. It’s almost there, but not quite yet. So that’s also a reason why a lot of handcrafting is also. Data professionals seem to be a bit shy of exposing too much complexity if they can avoid it. So they would opt for simpler patterns if that tick the box for a little while longer, and then don’t worry about the things that will go wrong in the next six months or so.

So, ah, this’ll make it too complicated because what will people think? And, and I sense that quite a bit and I’m like, yeah, look, satellites is a great example. The data, false satellites, right? So yes, ization, that’s easy, but. We argue you need to set up your satellites for by temporality always, basically, because if you don’t, you’ll run into the problem and then you have to go back and invent the wheel and change your patterns and, and there’s no proper tool that fully supports that.

And you go into hand coding and it gets all kinds of weird. So it’s that combination of not everything has been fully thought through yet, in my opinion. Combined with, let’s be a bit careful with how complex we wanna make things because what will people think necessary?

Shane: So yeah, we’ve talked about this off and off over the years and I struggled to find a template or a pattern to describe patterns.

So it’s one of those things that I can write a name, I can write a description, I can provide some context about where it has value or not. I can provide in one example, but then it just starts. Getting hard and for somebody to be able to pick up a pattern and read it and actually understand how to apply it is something that I’ve always struggled with.

With your design patterns, how far down that path did you get with the book?

Roelant: We have defined two templates, one for that we follow throughout the book. By the way, the book will come when, when we launch it. We’ll also launch a GitHub with which has all these code examples and patterns and things you can run yourself, and templates and everything.

So everything we’ve used in the past, obviously in our projects. And then added to the book will be publicly available also with a view on collaborating on it further. So these templates will be there and it has a couple of things, but having a template with a couple of headers is never gonna fully cut it because you need more of a dynamic, interactive sort of thing.

So the way I look at it is that the sections that we’ve defined in the book will go through all the options and considerations as part of that pattern. To use the word again, air exception handling reference data. So we say, this is what you need to think about. And then at these points we say in your template, include this and explain to therapy why you are doing this.

Why am I excluding or including this? Why am I making this decision? So we basically provided this checklist of stuff that you then need to include in the template, and that will be part of your solution design. Acknowledging that it’s almost like a graph model, right? That had these things together. So also these patent definitions, but we think that’s a good start because it, it, it elevates it to a higher level concept and then breaks it up into smaller pieces that you can include or exclude.

Shane: Okay. So it becomes a patent library effectively, and then some golden paths of guided paths of the way you would typically put the patents together. Because there, there are, like you said, there are a common set that we all do and then there are the exceptions that we end up doing because the organization context for some reason is so different to everybody else that we go, ah, actually we have to deal with that one differently here.

I. For this reason. But we only deal with that as an exception because, I dunno about you, but the number of times I’ve worked with an organization that said they’re special and unique and I’m sitting there going, uh, I’ve seen this before a few times, I’m not quite sure you are special, unique. Still special on those unique, right?

Yeah, yeah. True. And then we end up spending six to 12 months building something they never use because they said they wanted it and they never actually use it.

Roelant: Yeah. And the second part is always an interesting thing.

Time, the more maintenance, all that stuff. So again, automation is key to take that concern away, right? ’cause we feel you need that complexity. But we also understand you don’t wanna build like the jaguar for when you also only need a master two or whatever to, uh, to get where you need to be initially, but then at least take a couple of options to prepare you for the future.

That automation is essential there. So once you have that foundation in order, then.

And also the notion of, I guess, the complexity that we’ve attributed. There’s this ideal that I have that I’ve been talking about for years and years and years, and it’s also one of the red thread in the book, even though I don’t really make it as explicit. Often it’s the virtualization id, right? So my dream is still to have this virtual representation of data that doesn’t require persisting of data through all these layers and areas, all the complexity, you just have the data you need to historize it in this most fundamental way.

But after that, all the things that you put on top of that are derived the same way from the information model as you would traditionally do by creating physical model, creating sort of data logistics like quote ETL processes and all that data movement, right? So all that stuff can be replaced with the virgin transportation.

Funny thing is it still needs to tick the same boxes you still need by temporality, ization, reference data, blah, blah, all the things we’ve talked about. But it’s such a powerful idea and I think in the book we try to always come back to and say. If you do this, think about how it would behave in this virtual world.

Can we still do this? Can I still go to one version of the data and then to another version of the data, back to the original version of the data and make it look the exact same way, right? Deterministic processing. If the answer is yes, then you know your solution works. If the answer is no, you’re gonna get in trouble.

Similarly, for things like do I. Select an insert only approach, or do I support updates in my data? Right? So if you do an SD two as as your example earlier, do I update the end dates? Can I derive that? Why is it necessary? Does it matter? How do we do things like driving key link satellites in an insert only approach?

For example, how do we do byt temporality in the insert only approach? The funny thing is you can do all that. There’s no limitation on doing that, but it’ll have some impacts. Your consumption of data, it’s gonna work. So that kind of ideas is something that continues to fascinat.

Shane: I’m the same. We’ve experimented with fully virtualized in our platform as a core concept.

And yeah, we always end up. Regressing back to physicalization of data at some points, because we had a constraint either in cost or performance, but more often we had a constraint in Physicalization in our thinking. When we bring in the next. Pattern and it’s all virtualized. We lose the ability to trace the physical data flow to understand whether we’ve got their patent applied properly or not.

And I’ll give you an example. It’s that graph problem. There’s just too many things going on for our little brains to little brains things. So for example, we run a form of. Data vault modeling for our physical structure, but not for our conceptual structures. And we treat link tables as event tables effectively.

So we can say a customer ordered product from this store and this employee as an event. And we hydrate our consume layer so you can see all the data. De-normalized into one big table. So for that event, you can see the customer and all their details, the order and all their details, the product and all their details, the store and all its details, and the employee right in one table.

So that’s, that was first cut easy. And then you go, okay, now we wanna make sure that when we hydrate that we are picking up the right version of the data. That existed at the point in time that event happened. Okay, now we’ve gotta go and take our hubs and sets or our dims, and we’ve gotta find the right point in time to hydrate it so that it was looking like the time that event happened.

And again, we can physicalize that and we can then virtualize it and then we go without ETL. Our transformation code we call rules, the code or the logic that’s applying some change to that data. Again, we can virtualize it. But now if we wanted to say actually. We’re gonna virtualize it. We’ve gotta say, this event happened at this point in time.

This was the raw data for each of those core concepts at that point in time, and this was the business brawl that was being applied at exactly that point in time. And then switch it out. While the reasonable volumes of data, the platform could probably handle it. We can’t see that graph. It becomes so complex that.

To test every case and make sure the patent’s hardened, becomes so intensive that we go, is it worth it right now? And what you are saying is, if that patent was available to us and we could just apply it, then hell yeah, we would. Because we know it’s a proven patent, we can trust it’s already been tested.

We don’t have to craft it ourselves and then we can trust it to be virtualized. So I think that’s part of it, is when you have to get that graph in your head and every piece of complexity you add, ’cause it does become complex, makes it harder to prove that you are. Code is doing what it should for every use case.

That’s the problem that we need to,

Roelant: yeah, absolutely. And there, there’s so many to unpack there. Right? To start, I always say we’re in the business trust. Are delivering the data, so we have to prove it’s correct. And by correct, I don’t mean according to explanation for the business, but we need to be able to audit to the original data at any point in time because if we don’t, we don’t trust that easy get, we lose all of that.

So having that and that, that lineage is essential and it ties into versioning that we have to version control the metadata and the code generation templates hand in hand with data, which is built in, but those.

There’s too many practical barriers at the moment, still to make that realistic, but I see it as a business test to make sure that the patterns that you do have are working as intended. So if you can do it, you can also physicalize and or materialize and do all these other things, but if you can’t virtualize it.

Then something’s wrong with patterns. So it’s more like a test to make sure everything is correct. And then when performance and these, those bottlenecks are resolved potentially, or the data sets are manageable enough, the ideal that I have to mitigate those kinds of concerns that you mentioned, is that almost the same as how API versions work in my view?

So you have this data solution that acts as a surface for data consumers to extract our information, and they use that. Data as per a specific version of availability, like a release, right? You’ve got the model like this data, follow these rules, et cetera. So you get the data and then if you change something, anything in your design, you could theoretically, again.

Spin up a second version that is version 1.1, next original version. Give people some time to cut over and to understand what’s happening. And all those lineages and everything will still be captured in, in your version control, history and visible. So that’s how I look at it. And again, this is ideal world, but I do think thinking about your solution that terms helps to make it as robust as it can be.

Shane: And I think technology’s moved on. Yeah. ’cause we use Google BigQuery outta the covers and we can virtualize all the way through.

Roelant: It’s always that constraints that you have to work with.

Shane: Yeah. I remember the old days of Oracle servers and we used to have to get them ordered in Singapore and shipped over and more memory put in.

And then we got really expensive Teradata boxes that could handle the volume, but cost of fortune guys from other duck talk about, it’s not a big data problem for many people. And so I think that’s true. So I think we’re a lot closer of the technology being able to support virtualization. I just don’t think as data professionals we are, and this idea of having reasonable patterns that then have solution model patterns to support it, that then have proven code gives us one step towards being safer.

Being able to virtualize all the way through, and especially with things like zero copy cloning, where we can actually don’t have to take a full copy of the data back to test things out. So maybe the first step is whenever you’re doing any development, do it in a end-to-end virtualized. Pattern to test that it works.

And then physicalize when you have a cost or performance constraint.

Roelant: That’s the final chapter of the book. So the engine concept is where we start the book with and it explains all these sort of building blocks of what constructs a fully automated machine. And one of the components is optimizer. And we say exactly that, right?

So start virtual, but depending on. Which optimization directive you want to give it, like cost storage, IO latency, whatever it is, it can calculate based on use patterns, what the best combination of physical, virtual objects are to deliver that, right? So if you don’t want cost because you’re running on Snowflake, for example, or something like that.

Some parts because it help you. Directive latency, maybe just don’t do that. The end result, everything for making things work the best way.

Shane: And then you’re gonna have to bring in the segmentation or domain based or business unit based or process based. So you say these data flows need to be low latency.

Roelant: Exactly. How cool is that? Right? I want this mark to be refreshed every minute and this one every hour. Right. And this is literally always say in the book, that becomes a requirement because as a data team you have limited resources no matter how you look it. If you want to optimize that, it becomes more of a management decision and then you get more resources to deliver that requirement and you just.

Update the directions and go,

Shane: so if I took that future state, I’ll virtualize everything and it actually worked. I could also see a future state where you basically pull up the design patterns, you tick some boxes for them, pull up the constraints around which technologies it’s allowed to use, and it actually writes the solution patterns and the code and deploys itself as a fully config driven end-to-end platform from a couple of, there’d be a lot of check boxes, though, a lot of complexity.

Roelant: In our definition, the design patterns would remain the same, but the different solution patterns will be selected by the engine to deliver the updated physical implementation.

Shane: So are you saying that the design patterns become mandatory in your opinion?

Roelant: Yeah. Yeah, because that defines how your solution operates.

The ization, how to do reference data, all those things we’ve discussed earlier. So you need to make a decision how you wanna treat all those big blocks. But for each of design patterns, you’ve got a number of solution patterns for different technologies or concepts or anything. So you can switch between those and then let the solution refactor.

Shane: So let’s explore that a little bit because again, it’s just bringing this idea of agility, which is ability to change at low cost and with speed, not scrum. And so there’s this balance about work done upfront. So there’s times where you do work up front. You don’t need it right now ’cause you know it’s gonna save you time in the future, but it is waste ’cause you’re doing it before you need it.

And in fact, it’s a really interesting conversation with Patrick Lagger at the moment on LinkedIn where he’s saying he actually doesn’t do any work front anymore. It’s if he doesn’t need to build it, he doesn’t build it. So I have to have a whole chat with him now about. Hold on. Doesn’t that just become a whole bunch of ad hoc now he’s got enough experience to probably know how to retrofit the patterns after the fact.

Yeah. Okay. But it is, it’s not off on that tangent. So let’s go a couple examples. So I would say you always want to bring in a ization pattern into your platform on day one, especially if you can just pull it out of the patent library because you are gonna need it. I could then say, if I was looking for.

Feature flags for analytics, for machine learning. So I wanted to be able to create very wide, very long table that was key by customer, that had a whole lot of feature flags, their age bucket, their usage bucket, their income bucket that I was gonna put into a machine learning model to do some clustering segmentation.

I would probably not do that work until I had an information product that needed that work to be done. That would be my standard approach Right now what I think you are saying is if the. Is a design pattern and it is. And if the solution patterns and the implementation code was readily available and proven that box will be ticked by default because at some stage I would need it.

And the cost of implementing it is almost nothing. Is that what you’re saying? Or did I misunderstand that?

Roelant: Partly, so in, in this particular example, I would argue that every delivery of data uses the same pattern. So no matter how you deliver, like everything normalized or one big flat table, that’s all the same pattern you use.

You just. Select different columns, but conceptually, yes. So the way I look at it, you would have a couple of solution patterns readily available because you know, we provide them with the book and the GitHub and all that stuff, but it might not be fitting your technology and all that kind of stuff. So you would then add them as you need them?

I would think so. Good. Looks like having a library of patents that we can all collaborate on that is somewhat centrally available when we can work together as community to improve them. That’s a bit of an ideal world dream. But in the smaller groups, that would certainly be possible. But I think that if your solution patent doesn’t exist for the specific technology that you’re working with, yeah, you, you would have to add it.

And in that case, I would only add it when I need to add it.

Shane: But that idea of a patent library, we can all share and click. That happens in the software domain. Our software NCE have that already. They’ve done the work to make that happen and got the value out of it. In the data domain, we don’t, right? We go and implement a new piece of technology or a new vendor, we go, oh, SQL Server, I’m gonna do Snowflake now.

And we reinvent all the patterns again and it’s, oh. We’re gonna go Databricks, oh, let me reinvent all the patterns again from scratch because I’m changing the technology stack. And that’s just crazy.

Roelant: That’s just waste. Yeah. So much to learn from our friends in, uh, software development.

Shane: Yeah. But, and I still see large platform builds.

I still see projects where it’s six months to a year before the platform’s available to the team to actually build something of value. That has changed a little bit, but not a lot in my view.

Roelant: Not a. A library like that to use and contribute to. Hopefully it, it’ll help get it moving to that direction.

We’ve talked about this before, right? And that those plans, they, they’re still there.

Shane: I gotta say, at least you’ve following this dream for a while. So I can see that actually to write this book, there are at least three challenges, but three big challenges that just come to mind. So one, I. Is building out this mental framework of design patterns and what they are, solution patterns and code that implement those solutions and how you describe them, how you link them, the kind of meta model for that model.

That’s the first problem. The second one is then taking. Your 25 years of experience Duke’s been in in the industry about as long. Yep. So taking 25 years of experience from two people and then distilling that down into those patterns, like just picking them outta your brain and writing them down so that you get this library of all the patterns that you’ve ever implemented and that are valuable.

And then the third one is figuring out how the hill, you tell that complex story in a book.

Roelant: A hundred percent. And it’s taken us almost seven years. Right. So that’s, that’s something. And I do find, as you point out, the data engine thinking is co-written by Dirk and myself, and we’ve, we both worked very, very hard on it.

And the interesting thing is that there has never been a case where we didn’t agree on something. Right. So it’s, it’s like this convergent evolution kind of thing. You work towards something that, you know. And people get there, and not just us, but other people as well. They, you slowly move towards this approach that makes sense in the present.

So it’s been very, harmonious is probably the right word to, to use. It’s, we never had any sort of disagreement on how things should be done. There were a couple of cases where we. Didn’t know something yet. Right. So a couple of error that we run into and say, Hey, actually I never thought about that. Or I wonder what would happen if that that happens.

And then sort of explore that and started coding and wrote a couple of examples. And then certain cases where timelines or time periods right from start, date and date, that kind of thing were missing in, um, not in an expected pattern or something like that. Right. Or what it consequences if the source system operational system doesn’t have that time validity.

And so to really explain what happens then. Oh yeah. That’s interesting, but never that we weren’t on the same page. So I think that’s pretty, uh, pretty special.

Shane: And I also think the fact that you are creating code and applying it against data scenarios to prove that both the solution pattern and the design pattern.

Have legs, right? Are real things that you can describe actually probably helped in terms of validating the book as you go. Here’s the design pattern. Here’s what we think the solution pattern is. Let’s create some code and if it survives and does what the patent says it should do, then we know it’s a real thing versus Absolutely.

’cause there seems to be a few books that have come out over the last couple years. I read them and I go. That’s great theory, but where’s the meat and potatoes? Right. How do I do that? Because that seems great. That seems like Nirvana and we’ve been trying to get to that for the 35 years I’ve been in data, but we’ve never made it.

So just tell me how to do it for once. Yep. Yeah. And this book seems to be taking us to that state, right? Where there is an example. Certainly the

Roelant: And so into junior reckon. Yep. We got the Global Data Summit coming up in ice present. Book and everything, so that’s when the physical copy should be available and everything should be ordered online as well, so that’s gonna happen.

Yeah. But events,

Shane: yeah, I was hoping to be at Iceland this year, but for a lot of personal reasons, it’s not gonna happen. And then I was hoping to be at the Helsinki Data Week, and then they moved it to when I wasn’t gonna be in the uk, so I missed that one as well. I’m happy that. 2025 seems to be the year of the books.

There’s a hell of a lot of really good data books coming out, so I’m hoping 2026 will be the year of good conferences for me. Yeah, see you there. What interesting questions Joe Reese asked on his podcast just lately, and I’m gonna go steal it. Thanks, Joe. Is this idea of how did you write the book? What was the process you used to write this book?

Can you just talk me through how the two of you wrote it together?

Roelant: I’m not sure if we actually follow the process. Classroom training a long time. Then our focus areas are slightly different, but it’s similar stuff, and the idea of having a storyline that supports the more quote unquote materials, the theoretical parts, we agreed on that really early back in the day.

We thought, ah, maybe we don’t wanna make it like a data notebook or anything like that, right? There are some components, but. I started to write the main theoretical chapters and dear, started to write the main storyline chapters and then we would peer review our work and then revising that sort of, then we swift swap roles a little bit, saying Now I’m gonna update that storyline with the theoretical.

Explanation that I have in mind, and then tweak a little bit and then put the same and review and change some of the explanations or, you know, add what he wants to say. So it’s like that, right? So we both write a chapter storyline versus theory, and then we switch rules and then we do it again.

Shane: So effectively you.

Storyboarded the chapter and then wrote in isolation and then swapped to iterate and align, and then would be interesting to see which chapters you had close alignment after the first iteration and which chapters you deviated from each other or more than some of the other chapters would be interesting.

Roelant: Yeah. Yeah. I think especially on the biggest. Work we’ve done is on the definition of what the architecture would be, the different options. So the big boxes that we’ve talked about earlier that took us the most time to really nail that. And the other one is the different ways of looking at temporality and data that also.

Those are the real big things.

Shane: Yeah. Seems simple, right? Realization. It really is. Seems until you, again, until you engage with the first bit of data. And one of the things I found, and it’s only after I’ve done the retro of publishing my book, that I realized how valuable it was, but actually creating a course.

For the content in the book before you write the book. And then actually training people for me was something I hadn’t realized how valuable it was because by creating the content, you are actually drafting the book. You’re just not writing the words, you are drafting I images and you talk it, and then you write the hands on that people do to reinforce it and then.

As you are writing the book, you’re flicking back between iterating the course and the book because you’re starting to learn different way of articulating that seems better or you’re getting feedback on the course that you need to bring in, and so that gives you a safe kind of cool testing ground to actually learn and do at the same time.

Is that what you found? A hundred percent.

Roelant: Yep. So at some point I started recording the trainings for that purpose. And then after the training sessions, I was updating the notes in the slide decks to find the right words. And that all made it into the book. And at the end, I just opened training again and started removing slides from the deck if I was happy enough that it was officially covered in the book until there were.

Shane: Oh, I hadn’t thought about doing that actually. Yeah. Picking up the course material and deleting it when you know the book’s covered it to prove you’ve got the coverage and aren’t slipping stuff in. Yeah. What I actually found was I ended up writing more stuff in the book that I haven’t put back in the course yet.

Yes. Because then I’ve gotta extend the course, make it longer, and I’m like, I’m not sure I’ll want to make it anymore. Exactly. Yeah. Yeah. So a set trade off decision. Yeah. Alright. If people want to get hold of you, what’s the best way for them to find you?

Roelant: Yeah, find me on roelantvos.com. That’s my website with the blog that I have ignored slightly because it was so busy writing the book, but that’s where you can find me.

And also dataenginethinking.com, which has the book website and it has some examples and it has some briefly chapters and some of the personas. So definitely find us there.

Shane: Excellent. And June, it’ll be out on Amazon. No doubt.

Roelant: For sure. Yeah. And we’re also exploring some other platforms, including our own website.

But yeah, definitely Amazon too to start with.

Shane: Excellent. Alright. I look forward to buying that one. And finally getting a design patent template that I can use repeatedly and stop hating the ones that I use currently. So tha thank you for that. At least s gonna be, that’s gonna be the best value that I’ve had for a long time.

Roelant: I generally hope so.

Shane: Excellent. Hey look, thanks for coming on the podcast and I hope everybody has a magical day.

«oo»

Stakeholder - “Thats not what I wanted!”

Data Team - “But thats what you asked for!”

Struggling to gather data requirements and constantly hearing the conversation above?

Want to learn how to capture data and information requirements in a repeatable way so stakeholders love them and data teams can build from them, by using the Information Product Canvas.

Have I got the book for you!

Start your journey to a new Agile Data Way of Working.