Define Once Reuse Often (DORO) and my friend Disco

Its amazing what you find valuable a second time around and end up reusing

We are doing some experimentation on how we can improve ADI in the sub domain of Data Modeling.

As we worked with an Agile Data Network partner this week to help them onboard a new Customer, we decided to use an ADI first approach for all the data work in the AgileData App and Platform and see what would happen.

And of course part of all data work is data modeling.

It doesn’t matter if you consciously data model or not, as soon as you transform or store data you are modeling data.

We prefer to consciously model data instead of letting it happen unconsciously.

So we got ADI to look at the source system data that had been collected into History, it was data we had never modeled before, and got her to take us through the data modeling process.

Our Agile Data Network partner had already worked with the customer to understand their required Information Products and so had a good understanding of the Core Business Concepts, Core Business Processes, Facts, Measures and Metrics that would potentially meet their organisational needs.

This also stopped us letting ADI define a source system specific data model that would break on the first engagement with a Stakeholders chnaging requirements.

ADI did ok, but we always know we can do better, so time to McSpikey.

Agile Data Disco

Last year we did a raft of work for a Customer, where we reverse engineered 100’s of their Cognos Report definitions to help document their legacy data platform before they moved to a greenfield’s Modern Data Stack. This work removed the need for a team of Business / Data Analysts to spend months documenting the legacy system.

We ended up building this in public and semi-productising it, calling it Agile Data Disco.

You can read about that journey here:

We saw a potential market solving that data problem of understanding what a legacy data platform actually contains.

https://agiledata.team/data-problems/use-case/legacy-data-platform-discovery/

You can see an interactive demo of the final Agile Data Disco product we built to help us do that Fractional Data Work here:

https://agiledata.cloud/disco/#demo

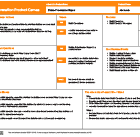

One of the things we did as part of Disco was some interesting prompt engineering to take a single input (say a blob of SQL, a log from SQL execution or the defintion of a report) and from that create a series of useful populated Pattern Templates as the output.

The outputs we generate are:

Information Product Canvas

Event Model

Conceptual Model

Physical Model

Reporting Model

Business Glossary

Data Dictionary

Metric Definitions

Bus Matrix

Source Mapping

And we also did another McSpikey with Disco where I uploaded an image of a completed Information Product Canvas and had Disco generate all those object, from that one image.

You can read about that one or see the Interactive Demo for it here:

Can you guess where we went with this

Yup we took the core parts of Disco and did a McSpikey to see how much of the Disco prompt and reinforcement logic we could reuse to improve ADI.

Results of the McSpikey still to come

I still need to take the time to write up the results of the McSpikey properly, but the TL:DR is:

Reusing the Disco prompt and reinforcement logic had massive value “time to value” wise;

We ended up experimenting with breaking out a ADI sub agent - ADI the Agile Data Modeller, which had some real benefits in improvement in the ADI responses.

A word from our sponsor

One of the things we got asked in the early days of Disco was rather than just document what was in the legacy Data Platform, could we automagically migrate it to a new data platform.

When we thought about it that looked like a very complex problem, that would take a few years of development to make feasible and we would end up with a many to many problem before it was viable, we would need to read from many tools and technologies and also need to write to many tools and technologies. Until you had critical mass at both the read and writes ends the product wouldn’t be viable.

And we also saw data platform modernisation as a great catalyst for organisations to rethink the way their data teams worked, and to rearchitect a lot more than just their database, ETL tool and BI tool. So we weren’t fans of supporting a better “like for like” modernisation pattern.

Getting permission to replace your data stack, is often the easiest business case to get signed off to be able to change the way your data team works.

And also a way to finally pay back those years of technical debt (by rebuilding it all again)

But we also had in the back of our mind the idea that if we could take a Customers legacy data platform and automagically migrate it to the AgileData Platform with minimal human effort, that would be very valuable in removing one of the key points of friction for working with potential Fractional Data Service customers who had already had invested a shit ton of money in a data platform (legacy or modern).

Because our AgileData Platform is based on our very opinionated Ways of Working, Data Engineering and DataOps patterns, this wouldn’t be a “like for like” but an automated rebuild from new.

As we experiment with ADI the Agile Data Modeler we are wondering if that Agent on its own has some value. Upload your current data model, and get back a bunch of candidate data models to review.

This wouldn’t be just a LLM going text to text, we would extend the reinforcement model we used for Disco, and bring in our opinionated Business, Concept and Physical data modeling patterns to it as well.

If I have to place a bet (and I do) I am going to carry on with the Context Plane bet.

All the experimentation and development we do in that space is also immediately available in the AgileData App and Platform, so another form of DORO.

But if you think there is value in an ADI the Agile Data Modeler on her own, reach out and lets have a chat. I would be keen to understand the use case you have in mind.

An incoherent stream of Context

You can find all the previous articles with my train of thought listed in this thread:

https://agiledata.substack.com/t/context-plane

We are building the Context Plane while flying it, so always looking for early adopters to help us decide the final destination.

If you want a virtual chat grab a slot here:

https://contextplane.ai/contact-us/#bookemdanno