Well to be more accurate no Data Analyst or Information Consumer cares about them, but your data colleagues do.

The Data Lores

There are a lot of data myths that have become data folk lore and therefore are treated as true without being questioned or tested.

One of my favourites is Data Analysts prefer dimensional models. I call bollocks to that.

A lot of Data Analysts I know just want one big table that has the data they need in it, without the need to go and do endless joins to get access to that data. AS LONG as that one big table has all the data they need and performs well when queried.

And don’t get me wrong, a Data Analyst will prefer querying a dimensional model over querying the relational model of the source system, when that source system model is horrendously complex.

SCD Type 2

The other data myth is that Data Analysts and Information Consumers need SCD Type 2 data on a regular basis.

And now a copy and paste from my AskAI friend ChatGPT 4o

SCD2 stands for Slowly Changing Dimension Type 2. It is a data warehousing technique used to track historical changes in dimension data.

What It Does

SCD2 allows you to:

Preserve past values when an attribute changes (e.g., a customer’s address).

Insert a new row for each change, keeping the old record and marking it as no longer current.

Maintain history of changes over time.

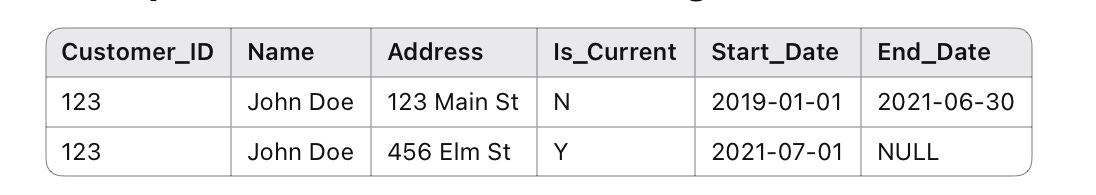

Example: Customer Address Change

In this case:

A new row is added when the address changes.

Is_Current or End_Date is used to indicate which version is active.

When to Use It

Use SCD2 when:

You need a complete history of changes.

It’s important to reconstruct past states (e.g., for auditing, reporting over time).

And back to the human

Again for 80% of their use cases I am going to call bollocks to that as well.

They just want and need the current state of the data. They need to see the data “as at now”

The other 20% of their time, as an exception, they need to be able to see the data “as at a specific point in time”. And this is what SCD2 is a good pattern for.

“What is customer #122345 address right now”

vs

“What was customer #122345 address as at 01/01/2020”

SCD2 is all about us not them

But as data professionals we know that the effort to retrospectively determine and store those historical changes is way more effort than automating that data work at the beginning.

And we know that at some stage we will need to deal with that 20% of the time need for historical point in time use case.

So knowing we need to that data work at some stage, we use the valuable SCD2 pattern (or any of the other patterns we can apply to determine and store historical change data) to do this work early rather than late.

And I have no problem with this, IMHO this is a good set of patterns and an efficient Way to Work.

But then we expose this historical data as the default data in the Consume layer.

And this forces the Data Analysts and the Information Consumers to do more work to use that data.

We force them to apply a filter to find records with “current_flag = yes”. Or we force them to filter the data for “end_date is Null” or “end_date = “9999-12-31”, or we force them to create a window function to find the latest active record.

We do all this because it’s easier for us as data professionals.

There is a better pattern

Give the Data Analysts two options.

Give them a consumable table with only the current records.

Give them another consumable table with all the historical records.

Let them choose which one they use, when.

You can also get fancy and only create one physical table with the historical records and then create a view with the current filters applied. As long as the view performs well enough then that is as good a pattern as any.

Think about it from the lens of the person using the data, not from the lens of you as the data professional creating the data.

It’s one of the many steps you need too do to bring “Product Thinking” to your Way of Working.

I could be wrong

It has been known to happen.

Your Organisation might have a unique context where Data Analysts and your Information Consumers might need that historical data a lot more often than 20% of the time.

Well use the data to see if thats true.

You’re logging all your users queries right?

So do some data analysis on those logs and see how often a current state filter is being applied to your data and how often it isn’t.

Then apply the patterns that are best for both you as a data professional and your Information Consumers.

They after all are your customers, they are the people consuming the Information Products you are creating.

Make their life as easy as you are making yours.

«oo»

Stakeholder - “Thats not what I wanted!”

Data Team - “But thats what you asked for!”

Struggling to gather data requirements and constantly hearing the conversation above?

Want to learn how to capture data and information requirements in a repeatable way so stakeholders love them and data teams can build from them, by using the Information Product Canvas.

I’ve been a data analyst consumer in a large retail organization, and the EDW team provided the current state table, with the historical one suffixed with _HIST. It was pretty straightforward to use & understand, and once I started using dbt and actually running data tests, I helped them discover and debug some issues

This is not a new idea at all. It is at least 30 years old. Maintaining a separate current state snapshot for the high proportion of analysts who only care about current state is a pattern consistent with minimising processing on constrained resources and even more so now when some cloud platforms charge for the entire set of rows in the table not the subset extracted. What has changed is the rise of Data Science driven feature engineering interested in variable windows applied to that history. A similar approach to creating a current snapshot can be applied to maintaining the outputs of feature engineering. If multiple Data Scientists require the same feature, why would you require them all to re-run that process.