We are working on something new at AgileData, follow us as we build it in public #AgileDataDisco

My doesn’t 30 days fly by when your having fun #AgileDataDisco

30 days ago Nigel Vining and I decided to do a 30 day experiment.

We wanted to extend our McSpikey to see if using a LLM to do discovery on a legacy data warehouse was a feasible, viable and valuable product idea.

And we decided to do it in public, posting daily about our journey.

Time is up!

And the answer is ……. yes.

We confirmed it was feasible to build, we confirmed it was viable to build with just the two of us.

And we have just got our first paying customer and a verbal yes from a second, so I think we can say it’s valuable.

There is still a lot to do, but I will revert to my usual posting process of writing whenever something comes to the top of my mind. No doubt some of that will be about AgileData Disco. So keep following along if you are interested.

And we have sponsored a stand at Big Data London in September, so if you want to see the product, pop along to stand Y760 and say hi.

Those that know me well, know I have a thing for t-shirts.

And given we have a new product, I thought it was only fitting that we got a new ADI for this product.

I give you …..

AgileData Disco!

2024/07/05

Product Market Fit is wow

#AgileDataDisco

As in wow that is frickin cool, not as in Way or Working.

For AgileData we have a simple sales message. It will cost you at least $600k per annum in salaries for a data team. We (and our AgileData Network Partners) will deliver that as a Fractional Data Team for $60k a year.

You will also need to pay for a bunch of data technology, data collection, data storage, transformation tool, catalog etc, and then all the ongoing storage and compute costs to run that technology. We provide all that in the $60k (yes you read that right, that includes the storage and the compute costs, we pay for it not you)

You will be amazed at the lack of wow we get from this sales message.

I have been socialising the AgileData Disco prototype and outputs a lot over the last 30 days.

I am constantly hearing people say wow.

And then they do one of two things.

They say, the prototype didn't automagically create it, did it? You did some manual turking to produce that. To which I demo uploading a query log from a BI tool, hitting generate and a few minutes later showing them the output, seeing is believing.

Or they stop and think, and then they come back with a use case they could apply it to right now.

I think that wow is what Product Market Fit looks like.

Next let’s close the 30 days experiment out #AgileDataDisco

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/07/04

Where is my moat?

#AgileDataDisco

TL;DR

There is no moat!

You know when you buy a car. You think oh that's a little different, and then after you buy it you notice hundreds of them on the road.

This is different, everybody is building an AI product, you don’t even need to look around to find them.

It’s so bad I wouldn’t be surprised if when I visit my 74 year old mother next I find she is building an AI product on her new laptop.

Every data vendor is embedding AI into their current products.

Every data consultancy I talk to is using bench time to build an AI powered consulting product.

VC founded AI startups are emerging from “stealth” on a daily basis.

The big LLM providers are releasing capabilities that eat these start-up ideas daily and then provide them as part of their fixed monthly fee or pay per token offering.

The cost of access to these LLM capabilities is so low there is little if any barrier to entry.

In fact you can use a LLM to help you build your LLM based AI product, reducing the barrier to entry even more.

I talk to lots of people and I am finding AI products being built for so many different use cases over and above data.

Our AgileData Disco experiment is based on a language, patterns and pattern templates I have been iterating on for over a decade.

But that is no moat.

I reckon I can use somebody's LLM powered product for a little while and then reverse engineer the majority of their prompts and RAG patterns to have a clone quickly created.

We are using a lot of the AgileData capabilities we have already already built for AgileData Disco, things like private tenancy’s, file upload, Google Gemini API’s integration etc.

But lots of other data vendors have these things.

Our AgileData Network is growing, but it’s not big enough to deliver access to a market that nobody else has, it’s not big enough to give us (and them) a flywheel motion (yet).

There is no moat.

Not for us, and not for most other companies building something using GenAI and LLM’s.

And I am ok with that.

I see enough potential value in what we are doing, to keep investing our time in experimenting with AgileData Disco.

I see ways we can help customers reduce the complexity of their data in a simply magical way.

I can see how it will help us achieve our vision of working with people we respect, who can work from anywhere in the world (we think of it as working from any beach), who get paid well for their expertise and experience, to support the lifestyle they want.

Next my second to last post in the #AgileDataDiscover series.

My doesn’t 30 days fast when you are having fun!

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/07/03

Let’s think about the pricing options

#AgileDataDisco

Here is when the chicken and the egg pattern hits again, without defining the GTM channel its going to be difficult to define a pricing strategy.

Time to brain dump via writing to collate the jumble of thoughts in my head.

A key Product Management pattern for pricing is to focus on the value delivered to the customer not the cost it takes to deliver it.

The problem I have with this is we end up with that ridiculous behaviour I outlined where I would get “custom” pricing for a bottle of milk in a supermarket.

While being profitable is important, we are boostrapping and so need to be profitable as we don’t have VC funding to spend money while bleeding money, Nigel Vining and I want to build a sustainable business we can run for 20 years not a “growth engine” we can flick quickly and move on to the next shiny thing.

Our core values and our vision leads us to a pricing model that means we make good money, but fair money.

We will probably leave money on the table with this approach but I am ok with that.

With that in mind lets list the options we could use to charge for AgileData Disco.

Pay per token used

Pay for a set of tokens in advance

Pay per input uploaded

Pay per input used

Pay per output created

Pay each time its run to produce an output

Pay a fixed monthly fee

I tested these with the leader of a data team asking what their preferences was for paying for the outcomes AgileData Disco delivers, they responded with:

1. Pay per output

2. Pay for a bunch of tokens in advance

3. Pay per token used

4. Pay each time it is run to produce an output

One problem is I am not doing robust research by asking that, I gave a predefined list of options and asked for an answer, rather than explored the problem and value space.

I am clear research skills is a small T for me. But we decided that part of this experiment is a Way of Working that means it's only Nigel or me working on this, so we use the T Skills we have.

If I link this answer back to the GTM channels I thought of in the last post.

#1 Pay per output & #4 Pay each time it is run to produce an output.

Equal some risk to us, we set the price and we wear the overs and unders. We can partially mitigate this by limiting the size of the Inputs, we know the size of the Prompts and the likely Output size so we can estimate the potential cost per Output or cost per Run.

#2 Pay for a bunch of tokens in advance & #3 Pay per token used.

Much easier and less risky for us, we already provide a guesstimate of the number of tokens to be used in the prototype , so we can just times this by a unit rate and wallah.

#1 & #4 seem to align in my head with our current Channel led GTM and #2 & #3 seem to align with a PLG GTM. But I think I am just making shite up with those mappings.

So no closer to a decision.

Next let’s think about the dreaded moat #AgileDataDisco

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/07/02

Next let’s review our GTM channel options for

#AgileDataDiscover

I was going to call this post “lets pick our GTM channel option” but I am not at the stage where I have enough certainty on which option to pick, so I am going to hold off on this decision yet again.

I would love to hear from any Product Leaders who have any suggestions on the patterns they use to make this call.

One of the benefits of building in public and these posts, is the writing forces me to clarify my thoughts. It helps me take the jumble and try and make some sense of it. So let's get that jumble written down.

When I think about the channel options I think about three patterns.

First, we are great at building, ok at selling, crap at marketing.

So I naturally levitate towards Product Led Growth and Growth Marketing, it seems like the nirvana option.

This would require us to make #AgileDataDisco a no touch SaaS product.

We would auto provision, which is ok, we have a pattern for that already. Sign-up is ok, we use Google Identity Manager for this already.

Onboarding would be a lot less complex than the full AgileData capability, we would have a simple flow of uploading a file (query log etc), pick your output types (Information Product Canvas, Conceptual Model, Data Dictionary etc) and then hit generate and wallah!

We would need to build out the subscription management, but we would use a SaaS subscription management solution for that and integrate it, rather than build our own.

That would still leave the marketing problem (unless we hit the magic viral nirvana from listing on Product Hunt which is unlikely). As we are bootstrapping we don’t have the dolleros to spend big on ads and outbound email spam (which we wouldnt do anyway)

Second, we are already building out an AgileData Network of Consulting partners around the world.

We have learnt that providing them with a Swiss Army knife selection of things they can offer their customers, over and above the Fractional Data Service has value. For example the ability to offer the AgileData Courses to their customers.

We could add AgileData Disco to this toolkit so they would be the only people who can use it.

When I talk to new potential partners one of the most common questions (after how much) is who finds the new customers.

This GTM pattern means that effort still sits with them.

Which takes me onto the third pattern.

We offer Agiledata Disco using a freemium pattern.

Same work to be done as the PLG pattern, but we limit how much of the product a customer can use and if they want to carry on, we pass them over to an AgileData Network partner.

This approach would be good for us and the partner, not sure how a customer would feel about it. I need to test that.

Of course it doesn’t solve our fiscal marketing constraint.

So I am no closer to a decision on this one.

Next let’s think about the pricing options #AgileDataDisco

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/07/01

There must be patterns I can use to help us decide our GTM for

#AgileDataDiscover

I spent a short amount of time searching Google and browsing the Reforge templates, but in the end I reverted back to the help of the LLM, this time ChatGpt.

It’s interesting how much easier I find the ubiquitous ChatGPT app which can I access on both my iPhone, IPad and MacBook compared to the browser based interface of Google Gemini when I want to use an AskAI capability for something.

On the subject of GTM, after a bit of prompting ChatGPT replied with:

>o<

Top 7 Steps to Define a SaaS GTM Strategy

1. Identify Target Market and Segments

Conduct market research and create detailed buyer personas.

2. Define Unique Value Proposition (UVP)

Clearly articulate what makes your product unique and develop compelling messaging.

3. Choose the Right Channels

Select and utilise the most effective channels for reaching your target audience.

4. Develop a Pricing Strategy

Offer tiered pricing and consider a freemium model or free trial.

5. Create a Demand Generation Plan

Produce valuable content, optimise for SEO and SEM, and engage through email and social media marketing.

6. Implement Customer Onboarding and Success Programs

Develop a seamless onboarding process and provide robust customer support.

7. Measure, Analyse, and Iterate

Track key metrics, collect feedback, and continuously improve your product and strategy.

>o<

Target market is any organisation that has a legacy or current data platform and no adequate documentation for it, which is a bit broad. Will need to refine this market a lot more as we get deeper into validation using the MVP.

For persona’s I have identified three main data personas that I think the AgileData Disco product will help, a Head of Data, a Data Governance Manager and a Data Consultant. All of these of course have various different roles and titles depending on the organisation they work for.

Funnily enough only the Data Governance Manager is a new persona for us, so there is a risk that I have brought in a bunch of bias for these, time will tell.

For the Unique Value Proposition current draft is:

>oo>

For the Head of Data (or Data Governance Manager or Data Consultant)

Who needs to discover, document and understand their current Data Platform so they can rebuild it or augment it.

The AgileData Disco is a Product

Which automagically discovers and documents a data platform, producing actionable outputs in less than one hour.

Unlike the current manual task that takes multiple people multiple months.

The AgileData Disco Product will:

- Accept multiple input files

- Output multiple pattern templates that are actionable

- Not require a direct connection to the data platform

- Provide outputs that can be stored offline

>oo>

Next let’s pick the GTM channel option for #AgileDataDisco

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/29

GTM, Houston we have a problem

#AgileDataDiscover

While I have worked in the Data Domain for over 3 decades I am fairly new to the Product Domain. There are a bunch of product patterns I have not found or used.

I feel like defining a Go to Market approach is one of those.

I have a myriad of things buzzing around in my head.

Do we go SLG, PLG, CLG, ELG? Are there other LG’s I haven’t found. Are these LG’s strategies, patterns or tactics (and do I need to care about the distinction?)

How do tactics like Freemium fit into it, I am assuming you can only apply a Freemium tactic when you are using a PLG motion, is this true? I have done free consulting in the past as a loss leader, isn’t that freemium?

Is Freemium a tactic?

I think I know why they call PLG a motion, it’s all about the flywheel, do I need to care about the definitions?

I have strong opinions on what I prefer and what I don’t prefer, I have strong opinions on how AgileData will behave and how it won’t behave.

There are patterns that although they are known to be effective, I am adamant AgileData won’t use them.

For example the whole GTM SLG pattern using SDR’s and AE’s, making pricing opaque, so they can make a price up to extract the most value out of the customer.

I dislike that pattern.

I posted this on another LinkedIn thread the other day that was discussing software vendors pricing tactics.

>oo>

I went into the Supermarket the other day to buy some bread and milk #SnarkyMcSnarky

I perused the aisles and found a bottle of milk I liked, I went to grab it but one of the supermarket staff told me to stop.

They took me to a counter where I was asked why I wanted to buy milk, when I was likely to buy the milk, how many people at home were going to drink the milk, how much everybody in the house earned, who’s name was on the credit card I was going to use and had they signed off on the credit card expenditure.

After I had answered those questions I asked how much the bottle of milk was.

I was told to take a seat and wait.

A second person eventually came along with the bottle of milk I wanted.

They brought out a glass, poured some milk into it, swished it under my nose, they took a drink of it and told me how good the milk tasted.

They asked would I like to buy some milk.

I said yes I would, how much is the bottle of milk.

They told me to sit down again and wait.

Eventually the second person came out with a sealed envelope. In it was the price of the bottle of milk.

The price was $10,000

I didn’t buy the milk.

This is the process some vendors take a potential customer through.

Does that sound ridiculous to you?

>oo>

While I have strong opinions, can make a decision and can self justify how I made it, this build in public process is as much about the learning as the outcome.

There must be patterns I can use to help me decide our GTM.

Let’s explore patterns that are available.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/28

Current AgileData Go to Market

#AgileDataDiscover

I was talking to somebody the other day and they made a comment about startup founders who build the best tool in the world that nobody ever knows about.

When you are building a product you have to care as much about how you will sell it as you do about building it.

I much prefer building over selling.

For AgileData we did a bunch of experiments with our Go To Market (GTM) last year to see where we would place our bet for this year.

The experiments were based on the usual GTM patterns:

Sales Led Growth (SLG)

We have a team of people who find and sell direct to our customers.

Product Led Growth (PLG)

We let our customers serve themselves, they sign up, subscribe and use the product with minimal assistance.

Partner/Channel Led Growth (CLG)

We work with other organisations who sell our product to their customers.

EcoSystem Led Growth ( ELG)

We piggyback on a large technology vendors ecosystem/marketplace and customers find and buy our product via that.

Each of these GTM requires some sort of investment:

For SLG we hire a team of Sales Development Representatives (SDR’s) who do outreach and qualify the opportunity’s before handing it to an Account Executive (AE) who closes the deal.

For PLG we need to spend money advertising so people can find the product, we need to ensure the product is so easy to use that they don’t need any human assistance to use it and they get value out of it quickly so they don’t cancel.

For CLG we need to figure out how to find potential partners, convince them there is value for them if they partner with us, upskill them in our product and then wait until they find and onboard customers.

For ELG we need to pick a large vendor, integrate our product with theirs, validate our product so we can be listed on their marketplace, then wait for the deal to rolling in, or not as the case maybe.

All of these GTM pattens need a way to fill the top of the sales funnel, which is another set of patterns.

Most companies use a combination of these GTM patterns and investments, which becomes complex.

We are always focussed on simplicity over complexity.

So we decided that for 2024 we would place a bet on Partner / Channel Led Growth.

The logic and reasons behind placing this bet will require a much longer post, but one of the key reasons comes back to the vision Nigel and I have for AgileData:

“working with people they respect, who can work from anywhere in the world (we think of it as working from any beach), who get paid well for their expertise and experience, to support the lifestyle they want.”

Now we have the context for the current AgileData GTM bet, lets think about the GTM options for #AgileDataDiscover

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/27

Whats happening in the Data & GenAI / LLM space, an #AgileData #Podcast with Joe Reis 🤓

#AgileDataDiscover

The latest AgileData Podcast has hit the airwaves:

https://agiledata.io/podcast/agiledata-podcast/ai-data-agents-with-joe-reis/

As part of the 30 day experiment we are doing I wanted to understand whats going on in the wider Data / GenAI / LLM space.

A great way to understand this is chatting to Joe Reis to hear what he is seeing and hearing.

Then I thought why not record it as a podcast so we can share his insights.

So thats what we did.

Thank you as always to Joe Reis 🤓 for being so generous with his time!

2024/06/26

Opps where did that pesky data come from?

#AgileDataDiscover

We are hyper paranoid about our customers' data, as any data platform provider should be.

We run a private data plane for our customer tenancy’s as part of our agile-tecture design.

So we of course adopted this pattern for the inputs we used in the Discover prototype to keep them secure.

Even though the input files are secured in a private tenancy we made sure that we only used Metadata as inputs into the Prototype not actual data records.

We believe we can get the outputs that are needed without having to pass the LLM actual data and we know from experience the barrier requiring data has on a product’s adoption.

One of the inputs we tested was using query logs from the BI tools querying the data.

Imagine our surprise when we started seeing actual data values in the LLM’s outputs!

Lots of thoughts surging through the brain, until we realised where the LLM had got them from.

In the Consume view the reports were using we had a case statement. The case statement was being used to map some reference data.

It was an old pattern we used before we built the ability to dynamically collect and manage reference data via Google Sheets.

So of course this reference data mapping was in clear text in the query logs and that is where the LLM was getting it from.

We will have to think about a pattern for scanning the LLM output for data values. Not sure how far we should take that.

The platform is secure, we are using Google Gemini within a private tenancy, rather than passing the inputs over the internet to OpenAi’s API like most other products so we know any data values are secure.

But should we surface them in the output or not.

We will need to get some feedback when we test the MVP for this one.

Feel free to post a comment if you have an opinion.

Next let’s think about our ideal go to market #AgileDataDiscover

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/25

Whats happening in the Data & GenAI / LLM space, an #AgileData #Podcast with Joe Reis 🤓

#AgileDataDiscover

The latest AgileData Podcast has hit the airwaves:

https://agiledata.io/podcast/agiledata-podcast/ai-data-agents-with-joe-reis/

As part of the 30 day experiment we are doing I wanted to understand whats going on in the wider Data / GenAI / LLM space.

A great way to to understand this is chatting to Joe Reis to hear what he is seeing and hearing.

Then I thought why not record it as a podcast so we can share his insights.

So thats what we did.

Thank you as always to Joe Reis 🤓 for being so generous with his time!

2024/06/24

Let’s look at the new patterns we need to move from Prototype to MVP

#AgileDataDiscover

We have been able to use a lot of the patterns we have built into the AgileData Platform and App as part of the Discover prototype.

To move it from Prototype to MVP and add the initial list of features we need, a number of new patterns need to be implemented under the covers, including:

1. Logging of prompt tokens so we can keep track of how many are being used each time we send a prompt off to the LLM

2. Logging of responses from the LLM so we don’t need to make unnecessary requests, we can just show the latest response if its is still applicable

3. Uploading multiple input files and including them in the prompt sent to the LLM, this turns out to have the most complexity as the documentation is very light, and is currently skewed towards processing multiple image and video files

4. Lightly modelling the most extensible pattern to hold configuration data, ie bite size prompts that can be combined to produce different outcomes

5. Parsing and storing the raw markdown responses into separate buckets so they can be surfaced separately in the app.

Most of this activity happens in our API layer as thats where the AgileData magic happens. We add new endpoints when required or augment existing endpoints to deliver additional functionality for the web application.

In this case we re-use our existing file upload pattern but send discovery files to a separate bucket where we can access them from the new interfaces.

We re-use our existing LLM wrapper, but extend it slightly to provide the option to choose which model is called . We use different models depending on their strengths and weaknesses, some are better at ‘texty’ stuff, while others are better at ‘codey’ stuff.

So a few bits of behind the scenes plumbing we need to do to make the product scalable. It’s tempting to bypass some of these for the MVP, but we already know after spending some time using the prototype, that without them we would end up in a world of manual effort hurt.

Scale the machine, don’t scale the team!

Next we will talk about a little surprise we encountered with the prototype.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/23

Let’s review the feedback so far and the use cases that have started to emerge

#AgileDataDiscover

After talking through the product idea with a few people and showing them the prototype the feedback has been positive.

The uses cases that have come out of the conversations look something like:

Understanding a legacy data platform

An internal data team or a data consultant(cy) wants to understand the current data platform and the human effort to do so is large and time consuming.

This might be supporting a green fields data platform, or making a change in the current data platform.

All the people who have built it have left and there is no upto date documentation.

An interesting variation is the senior leaders in the organisation have left and the new leaders have no idea what was built or why.

In a number of conversations I was asked if we could produce data lineage back to source systems.

The typical approach to do this would be to parse the ETL code or execution logs for the code. I can potentially see how the LLM might be able to create the lineage if we gave it a bunch of the inputs, but I am worried about the level of hallucination it will give back.

Data Governance

This is effectively the same pattern of documenting the data platform, but with slightly different use cases.

A lot of Data Governance teams have been reduced in size and the technical BA team members have been laid off, meaning the Data Governance Managers no longer have people available to manually document the data.

Or the Data Governance Managers have relied on the technical BA skills in the data teams, those teams have been downsized and can no longer do this work for them.

A lot have tried to do the work using a Data Catalog and that work has failed to deliver any results.

An interesting pattern emerged in this space.

Can we upload regulations or policies and determine if the data platform is complying with them? I am going to have to experiment with this one, as I can’t think of a pattern we can use to deliver this use case yet.

Automated Data Migration

As with all things AI there are a myriad of startups experimenting in this space.

As part of the conversations I have stumbled across a bunch doing similar things outside of the data domain. And talked to people who know people who are doing similar things in the data domain.

A use case that came up a couple of times was automating Data Migrations from one data platform / technology to another data platform / technology.

Overall there seems to be a bunch of use cases that people have said will have value to them, let’s carry on and invest some more time and effort into it.

Next let’s look at the new patterns we need to move from Prototype to MVP.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/22

One response to rule them all, not!

#AgileDataDiscover

As I started showing people the prototype one bit of feedback I consistently got was that having all the outputs in one screen was confusing.

There are a few other problems with the single output model we have initially built into the prototype.

The number of output tokens the LLM will generate is limited, which means the LLM reduces the number of words used as it moves through the output types.

We want the definition of the Data Dictionary to be as verbose as the Information Product, even when the LLM produces it as the last part of the output.

We want to be able to regenerate just one of the output types in isolation, not all the output types. A single output response type makes this difficult and costly.

We want to make it easy to copy and paste one of the output types to another medium, right now I have to copy the entire output and then remove the bits I don’t want.

The single output pattern we have built into the prototype won't allow these things to happen easily.

And also scrolling down a long list of output text to find what you are looking for is just a bit of a pain in the arse.

So we need to break the various output types into their own unique objects.

Luckily its an easy enough change to experiment with (well Nigel made it look easy enough ;-).

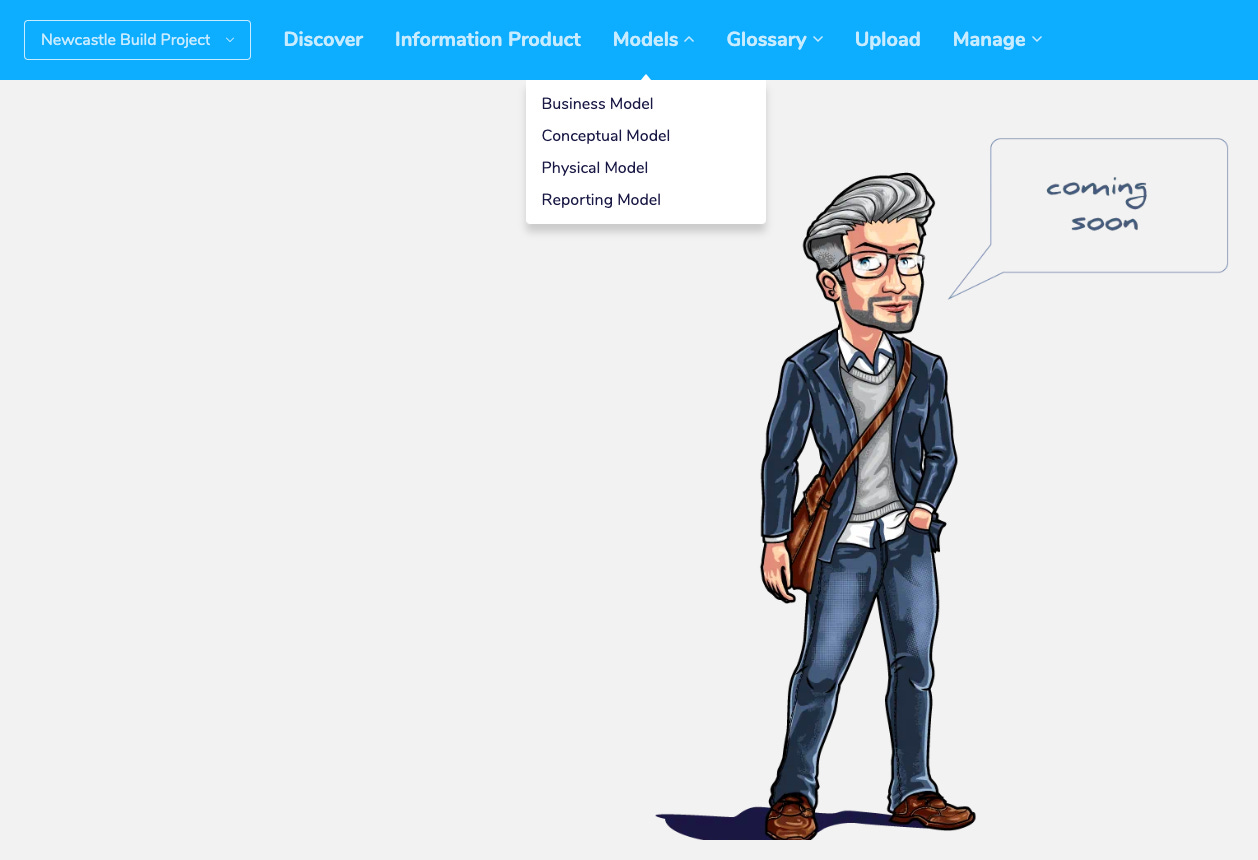

We have already decided to break the Discover menu for the prototype out into its own menu for now, so it’s “just” a case of adding new menu options that only display the response for that output type.

We have split the outputs into the following screens / menus / output types:

Information Product

Models

Business Model

Conceptual Model

Physical Model

Reporting Model

Glossaries

Business Glossary

Data Dictionary

Metrics

Facts

Measures

Metrics

Matrix

Bus Matrix

Event Matrix

Concept Matrix

One of the interesting benefits of this change is it makes it much clearer to explain to people what we are producing and what they can be used for.

I have spent the last decade finding and iterating pattern templates that have value in the data domain. That knowledge and content has been instrumental in defining these output types.

And that clarity has resulted in getting much clearer feedback on what output types we are missing.

Next let’s review the feedback we have had so far from surfacing the prototype.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/21

Let's sort out that menu problem, for now anyway #AgileDataDiscover

It’s not a big thing, and I know I shouldn’t be sweating the small stuff but I also know that if I don’t it's going to really needle me until I scratch this itch.

And this is where I would normally hand the problem off to our UI designer to use their experience and expertise to solve the problem by using their dark design arts. But with our experiment in our new Way of Working it’s either Nigel or me, or both of us.

A problem shared and all that, so a Slack huddle with Nigel it is.

When talking through the problem Nigel mentioned what I had done to the header area in our marketing website. I have iterated it to use each of our core colours depending on what part of the AgileData product we are talking about, Network, Wow, Platform or App. Why can’t we do that for the App header?

Intriguing thought, we use those core colours heavily in our course material as well to indicate a transaction from one course module to another.

We could spin on this decision for months if we let ourselves, but one of the key things a Product Leader has to do is make decisions quickly if they can, and I can.

So we will introduce a pattern where the App header changes colour when you are in the Discover area.

Now how to get to it?

Well we always talk about Personas, and again we teach data and analytics teams how to apply the persona pattern in the data domain (hell it might even become the second AgileData Guide book ;-)

Let's add the ability to quickly change personas in the app and as a result change the menus that are presented to us so that we can get the job that personas needs to do, done.

And speaking of jobs, on the menu options, job done (for now)

Now let’s move on and sort out the usability of the one big prompt response.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/20

Protect my top menu at all costs, or not?

#AgileDataDiscover

One of the patterns I have been focussed on since the first day we started building the AgileData product is trying to retain simplicity in the top menu of the AgileData App.

I have been in the software world long enough to remember the days when we had text only screens. I remember when the Graphical User Interface was introduced and we got the little icon instead of text for menus, and then funnily enough we got text as a mouse-over on the icons so we could know what they did. It still makes me giggle when a UI has the diskette icon as the save icon.

I have seen the complexity of menuing systems that come with enterprise products such as SAP and Oracle.

From day one I wanted to try and retain a simple top menu structure, again influenced by Xero. Originally I thought we would need to move the Manage menu to a left menu option at some stage to allow a myriad of options but we haven’t been forced to do that yet. We also haven’t needed to extend the top menu to more than a single level of sub-menu. I love this simplicity.

However we have extended the number of top menu items as we expanded the capabilities of the app. We introduced the Design menu option a while ago, we moved the Collect menu option out from Manage to its own top level position. And we are about to introduce Marketplace as a new top level menu. This will result in 7 menu options at the top and this in my view is the limit.

As part of the introduction of the Markekplace option I am rejigging the order of the menu’s as well.

Until now the order was:

Catalog | Consume | Design | Rules | Collect | Manage

It will become

Marketplace | Catalog | Collect | Design | Rules | Consume | Manage

Previously I had the Catalog and Consume on the left as those were the two menus designed to help Information Consumers.

The introduction of Marketplace is designed to help Information Consumers find the Information Products they need, while the Catalog will continue to help Data Magicians find the Data Assets they need.

As part of this change I have reordered the other menu options to follow the right hand side of the Information Value Stream flow that we teach, i.e Design > Build > Deploy > [Value] > Maintain.

So what to do about the AgileData Discover menu option?

The Discover option takes us into the left hand side of the Information Value Stream flow, i.e Problem > Idea > Discover > Prioritise.

I can predict we may need to break the Discover option out into a series of sub menus, so that means it can not become a sub level menu under Design. Plus Discover is in the left hand side of the Information Value Stream and Design is in the right hand side.

That leaves it becoming a top level menu and that would make 8 and that’s a no.

Ok we need to find a different solution for my menu problem.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/19

Let’s show a few people the initial prototype and see what feedback we get.

#AgileDataDiscover

I am lucky to have a network of talented data peeps across the world, people I have connected with over the years, people whose opinion I respect.

As part of the No Nonsense Agile Podcast we have a series of awesome guests on the show, and one of those was Teresa Torres. One of the key takeaways I got from that chat was the pattern of continuous discovery, my key takeaway was talk to 3 people a week, and ask them about one thing that has popped up for me that week. Something I have been trying to do for a while now.

I combined those two things and started asking people about their data problems and showing them the prototype to elicit some feedback.

The conversations were enlightening, I can see a few problems we might be able to solve with this thing. Ill explore those in more depth later.

One bit of feedback I got as I started showing people the prototype was that having all the outputs in one screen was confusing, we need to iterate that.

Another thing that frustrated me from day one with the prototype was trying to find the url to demo it. Especially when we have a couple of different demo Tenancy’s. It’s a small thing, but it’s one thing that has caused me frustration and a surprising amount of wasted effort and time in the past, so i'm very conscious of it.

We were always going to have a bunch of decisions to make about where this fits in our current product Information Architecture aka our menuing system.

Let’s deal with that menu problem next.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/18

Let’s build an initial prototype.

#AgileDataDiscover

We have already done some prototyping as part of the initial McSpikey.

We can provide specific inputs into the LLM, we can give it a specific language to use, we can get the outputs we think have value.

But in the McSpikey we did that manually.

Next step is building out a prototype app that allows customers to do that with or without us.

Here is the first cut of what we built:

https://guides.agiledata.io/demo/clx2hlkru0ibi35xebw371v0f

In this demo example I used a file that contains the Consume query log for my demo AgileData Tenancy.

As part of this step we learnt a lot about the feasibility of building the potential product.

Application Framework:

We need to build, deploy and run a browser based application, this capability is a core part of the AgileData App.

Secure Sign-in:

We need to allow customers to securely sign in, this capability is a core part of the AgileData Platform.

File Upload:

We need to upload input files and store them for use by the LLM, this capability is a core part of the AgileData Platform and App.

Data Security / Privacy:

Even though we wouldn’t be using Customers actual data for the prototype we would be using their logs etc at the MVP stage, we need to make sure whatever we use is stored securely. The AgileData Platform architecture provides a Private data plane for every customer tenancy, we can reuse this for the prototype and deploy a new AgileData Tenancy for each MVP customer in the future.

LLM:

We need to access an LLM via API so we can run it from within the protoype app. AgileData is built on Google Cloud, Google Gemini is the obvious choice of LLM service for this.

We have already used Google Gemini as part of the Customer Trust Rules feature we recently released, we have a lot of the plumbing (engineering) patterns in place for it already.

Prompt Storage:

We need a way to store the prompts we are using so they can be used across all prototype Tenancy’s. The AgileData App stores everything in Google Spanner which is then accessed by the AgileData Platform, so we can use this to store the prompts.

We were presently surprised how many reusable patterns we had built as part of the AgileData, that we could reuse for this protoype and the fact they are all production ready.

Let’s show a few people the initial prototype and see what feedback we get.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/17

Let’s experiment with our Way of Working while we do this product experiment.

#AgileDataDiscover

In response to my last post on Research led vs Solution led Chris Tabb replied with:

“Do both 🤷♂️

Bet on two horses 🐎

Increase your chance of winning.

Similar to the difference of

👉Logical vs creative”

To which my responses was:

“funnily enough we have been using a hybrid pattern of the two for a while. We didn't end up with the worst of both worlds, but I'm not sure we ended up with the best of both worlds. So it’s time to try something new and see what happens.”

Until now we have been building something to solve a series of data complexities that we have experienced as data professionals over the last 3 decades.

In the beginning the target user for the product was me. If I could do the data work on my own, without having to ask Nigel Vining to write code, then that was the initial goal achieved.

As we achieved that goal we started focussing on three measures of success:

If we could reduce something that takes me 1 hour of effort to 10 minutes of effort, that would be a good thing

If we could reduce the cognition, expertise and experience required to do the data work, that would be a great thing

If we could automate the data work so the machine did it, not a human, that would be a magical thing

As an engineer Nigel Vining doesn’t really care how our product looks and behaves, but I do.

Having worked at Xero briefly in its early days I got to see the benefit of great UX and UI design. So early on we started working with an awesome product designer in Kris Slazinski ☮️

Being a fractional team working in the different timezones, we found the process of great design upfront started to slow us down and we got impatient.

Over the years we had built out a design system with a lot of reusable components and we found we could experiment by cobbling those components together and see if it helped us achieve one of our measures of success.

We started moving more and more towards the MVP pattern for how we built and tested new product features ideas.

So which pattern should we lean into for this product experiment.

Let’s look at the summary from ChatGPT again:

User Research vs. Market Testing:

UX research perfects user experience; MVP tests market demand.Development Time:

UX takes longer; MVP is faster.

Risk Management:

UX aligns with user needs; MVP quickly validates the market.

Iteration Approach:

UX iterates on design; MVP on product-market fit.

Based on our journey todate we naturally lean towards MVP.

We are boostrapping which means we are cash constrained, and we have put a 30 day limit on this experiment so we are also time constrained.

Which naturally leads us to lean into our MVP bias with all the work being done by Nigel and Myself.

Let’s build a prototype to learn what we don’t know and have something to test with potential customers.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/15

Research led vs solution led

#AgileDataDiscover

Being a co-host on the No Nonsense Agile Podcast I have been lucky to speak to some of the worlds best product people.

I have come up with a hypothesis that there are two different Product Value Streams an organisation can adopt.

One is being research led the other is MVP or solution led.

Research led is where the organisation starts by researching the problems that exist in the world, identify problems that could be solved, ideate how they might solve those problems, and then discover whether those ideas do indeed solve it. Only after all that do they build something.

Solution led is where you start with a problem you know or believe exists, you build a minimal viable product that you think solves that problem, then you test the MVP. You are testing both problem space and the solution space and the value space all in one go.

Given #AgileDataDiscover is based on using an LLM I thought I would ask ChatGPT to given me more details on the differences between research and MVP led.

ChatGPT Prompt:

Whats the difference between a SaaS product company that takes a UX research approach vs a mvp lean startup approach?

Reduce it to 1000 characters

ChatGPT Response:

UX Research Approach

1. Focus on User Experience:

Goal: Excellent user experience.

User-Centric Design: Extensive research on user needs and behaviours.

2. Research Methods:

Qualitative & Quantitative: Interviews, usability testing, surveys, and analytics.

3. Design Process:

Iterative Design: Continuous refinement based on feedback.

Prototyping: Detailed prototypes for validation.

4. Development Cycle:

Longer Initial Development: Extensive research and testing.

High Fidelity: Aligns closely with user needs from the start.

MVP Lean Startup Approach

1. Focus on Speed and Learning:

Goal: Quickly validate business ideas.

Build-Measure-Learn: Rapid development, market measurement, and learning.

2. Development Methods:

Rapid Prototyping: Functional versions to attract early adopters.

Lean Testing: Experiments and metrics for viability assessment.

3. Product Features:

Minimum Feature Set: Core functionalities for quick market entry.

Incremental Improvements: Based on feedback and market response.

4. Development Cycle:

Shorter Initial Development: Quick launch.

Pivot or Persevere: Adjust based on feedback.

Key Differences

User Research vs. Market Testing: UX research perfects user experience; MVP tests market demand.

Development Time: UX takes longer; MVP is faster.

Risk Management: UX aligns with user needs; MVP quickly validates the market.

Iteration Approach: UX iterates on design; MVP on product-market fit.

So which approach are we going to take for #AgileDataDiscover

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/14

Should we experiment with our Way of Working while we do this product experiment.

#AgileDataDiscover

When I coach data and analytics teams, I coach them to reduce uncertainty as much as possible, make things simple and don’t boil the ocean.

This is going to be a case of do as I say not do as I do.

We have a massive amount of uncertainty in what this product is or will be, so the last thing we should be doing is introducing additional uncertainty by changing the way we work while experimenting with the product.

When we designed our product development Way of Working a few years ago we had to design it based on a fractional development team that worked in different timezones. That led us down to a value stream flow of work that was asynchronous and chunked down into UI Design, Backend Engineering and UI Build steps.

We were careful to not create a waterfall process, a process based on large requirements upfront, no discovery and a high cost of change.

But given the need to work asynchronously we naturally adopted a flow based way of working. And the initial flow was UI Design, then Backend build and then UI build.

Overtime we realised this linear flow wasn’t working for us. We were over investing in UI Design upfront, which we then discovered wasn’t feasible to build in the backend or the UI.

We ended up with multiple value stream flows depending on the level of uncertainty in what we were planning to build.

Sometimes we will do a very light UI design, build a MVP backend service, then build the UI, use it for a while to see if it had value and identify what we want to iterate,

Sometimes we will do a light UI design, then stubb a backend service, then loop back what we have learnt into the UI design process.

Sometimes we will build the backend service to reduce some uncertainty in what data structures we need and how we can model it, and then move onto the UI design process, then into UI build.

Sometimes we will build a backend service and cobble together a UI with our current components and no UI design work, to help us reduce some uncertainty on the problem we actually want to solve.

The key to all of this is Nigel and I were the key users, we were the customer for our product.

We knew the data problems we wanted to solve, we had lived with them for over 3 decades.

We were focussed on reducing the time it took us to do data work, reducing the cognition it took us to do it, or automating it completely so the work was done without us. We could measure how well we were delivering these.

For this product we are building it for somebody else, we have a high level of uncertainty, we want to do a high level of experimentation, so we might as well experiment with changing the way we work.

Let’s aggressively iterate the way we work while we do this.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/13

If this new capability becomes both viable and feasible, where does it fit with our current product strategy.

#AgileDataDiscover

We have a few simple choices:

Treat it as a completely separate product, give it a new name, a separate pricing model and separate Go To Market strategy.

Treat it as a separate module in our current product, give it a new main menu name, add a separate pricing model (unbundling the current pricing strategy). Retain the current GTM strategy for this module or create a new one.

Treat it as a bunch of features in our current product, make it part of the current pricing, make it a feature in our current GTM patterns.

There are a few other drivers I haven’t thought about as well. I immediately start diving down a rabbit hole into a complex multidimensional decision model, and want to reach out to Google Sheets to create a pivot table to manage it.

Sure sign that ugly complexity has appeared, so we need to move back to simplicity.

Lets ignore the Pricing and Go To Market options, we can deal with those later.

Still leaves us with a choice between a separate product, new module or a set of features.

As I have mentioned, we are technologists at heart, we have a small team, don’t plan to ever have a massive team, and as a result of these two things we are great at building, we are ok at selling, but we really struggle at marketing.

Introducing a whole new product would mean we would need to split our limited time and skills across two products.

For that reason alone the separate product option is out, for now.

This is not a decision that is set in stone, this is just a decision made quickly, so we can move onto the many decisions we need to make next. We can always change this decision as we learn more, but the cost and consequences of that change will be greater as we progress further into this process.

Also there is a massive amount of bias embedded in this decision.

I don’t want to have to design and build yet another website, which I would need to do if it was a separate product.

I have seen dual product strategies before, Tableau & Tableau DataPrep, Wherescape Red & Wherescape 3D, and I was never a fan of those strategies.

There are probably a bunch of other unconscious bias reasons why I have discounted this option.

That leaves a decision between a separate module, or just a bunch of features.

For some reason I am reluctant to make this call at the moment, I feel like I need some more certainty before we make the decision.

And that’s ok, there is so much uncertainty in everything we are doing for #AgileDataDiscover right now, we can afford to delay some decisions.

So before we make the decision on seperate module or a bunch of features, I want to explore where does building this fit in our current AgileData Way of Working?

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/12

Next we need to see if we should adjust our current product strategy, or is this just product business as usual.

#AgileDataDiscover

We are lucky at AgileData that we are purely a product company (even though we bundle services as part of our Fractional Data Team offering) and so our business strategy and our product strategy are closely aligned if not one and the same.

We made a conscious decision when we started out to reduce the complexity of our business as well as providing a product that reduces the complexity of managing data.

This aligns with Nigel and my personal goals of building a profitable and sustainable business, which we can work within from anywhere in the world, and which we can be part of for the next 20 years.

Part of our business strategy is to bootstrap our company and keep our team, processes and costs ridiculously lean.

As a result we have always gone for simplicity over complexity, and as a result have a single Product Strategy, and a single pricing model.

We know that most SaaS product companies choose to unbundle their products so they can “upsell” their customers to the next “tier” or by adding access to an “advanced” set of features. To these companies revenue growth within their current Customer base is as important to their reporting metrics as new customer acquisition metrics.

Our view has always been that if the product capability makes the data work less complex, why wouldn’t we make it accessible to our partners, after all that's what we are promising them.

We don”t charge extra for more users consuming the data, we don’t charge more to use the Catalog features etc.

Each time we decide to experiment with something new, we need to review our current business and product patterns and see if we want to change them.

So yes, it’s a good time to review our product strategy and see where this might fit within it, or if we need to iterate it.

Next up is where does it fit in our current AgileData product strategy?

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/11

Next we need to explore the initial viability of the product idea a little bit more.

#AgileDataDiscover

I am lucky I have a network of people I’ve met over the 3 decades I’ve been working in the data domain. They span a breadth of backgrounds, skills, roles, organisations and locations.

Even more valuable is they are very giving of their time, something I am very conscious of and try not to waste.

We really want to explore the viability of the migration to the AgileData platform use case, but we have a hypothesis that Data Governance / Enablement might be the most valuable use case.

There is a growing trend for Data Governance teams to move towards being Data Enablement teams, a trend that was reinforced by an excellent presentation at the Wellington Data Governance Meetup I attended recently where somebody was playing back the key themes from the DGIQ conference in Washington DC last year.

The next step is to see if we can get some quick market validation on the value of the product supporting Data Enablement.

I reached out to a couple of people I know in the Data Governance space to “pick their brains”.

I know I should have taken a more structured product research approach to this rather than just reaching out to people I know.

We are a small team, we don’t have a researcher in the team, I don’t have those research skills, I make do with what I have and what I know. Then I live with an imposter syndrome most founders live with everyday, when I do something that is not a strong T-skill.

Here is what I think I heard:

Data Governance Managers are losing their BA team members as a result of the fiscal downturn, or they are losing access to those skills in other teams as a result of downsizing. They need to do the discovery work themselves.

How can they create this info as a team of one?

Data Governance Managers who get a new role in a new Organisation, are faced with a standing start. If the outputs they need do not already exist, they are blocked until they can get them created.

How can they quickly document what already exists?

How can they create insights into what exists, without directly accessing their source systems or internal data networks?

Starting a conversation with a blank piece of paper is always harder than starting with some known state.

How can they generate insights into the Org and its data, as research for themselves, before starting a conversation with stakeholders?

Data Policies and Procedures often exist but there is a lack of visibility if they are being complied with.

How can they compare what is actually happening with what should be happening?

These seem like problems we can help with.

We have quickly validated the feasibility of the product idea and we think we have quickly validated there may be a market and demand for the product.

Next up is where does it fit in our current AgileData product strategy?

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/10

Next we want to explore the initial feasibility of the product idea a little bit more.

#AgileDataDiscover

As technologists at our core Nigel Vining and I always err towards understanding the feasibility of building something, before we work on the viability of building it.

There is joy in building something that works, compared to getting a constant “no”, or even worse a shoulder shrug, to what you think is a great idea.

To stop us boiling the ocean and spending too much time validating the feasibility of the wrong thing, we have a process where we do internal research spikes, which we call McSpikey’s, to time box the feasibility research and force us to quickly swap back to the viability.

As part of the Greenfields Data Warehouse Rebuild research spike we did for a customer, we tacked on an internal McSpikey we had been thinking about for a while.

For this McSpikey we tested the LLM based on using these inputs:

Screenshots of reports

Exports of report definitions

Query logs from the BI tools

Query logs from the ETL loads

The ETL code

DDL for the data warehouse schemas

DDL for the source system

550 page word document that contained a data warehouse specification

Sets of gherkin templates for transformation/business rules

Sets of data by example templates

Then we created a series of tailored prompts, based on the shared language we have crafted and use when we are teaching via our AgileData Courses, coaching Data and Analytics teams or helping our AgileDataNetwork partners with their Way of Working.

Last we used the LLM to produce these artefacts:

Information Product Canvas

Business Model

Conceptual Model

Physical model

Reporting model

Business Glossary

Data Dictionary

Definitions for Facts

Definitions for Measures

Definitions for Metrics

The outputs the LLM produced were much better than we expected, the key seemed to be the quality of the shared language we used, which we have been crafting and refining for over a decade.

Another interesting discovery was the report screenshots cost us twice as much to process compared to the text based inputs. That's the value of McSpikeys, you always learn something new.

This product idea looks feasible, we should explore the viability of it some more.

Or put it another way, now we think we can build it, will anybody buy it or use it?

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/09

Some background on what led us to the decision to do this 30 day bet on #AgileDataDiscover

#3 Greenfields Data Warehouse Rebuild

One of the things we have found our customers value, is the speed and cost which we can execute Data Research Spikes using AgileData.

We fix the delivery time period to 3 months and fix the cost for the work.

Often customers already have a data team and data platform, but the team is under the pump with higher priority data work and cannot do the research work anytime soon.

The goal of the research work is to reduce uncertainty, or show the art of the possible.

Often a business stakeholder has an idea and wants to know what is feasible, before they decide to invest in it fully.

The Head of Data wants to deliver the research work, but the data team has no spare capacity to do it. The Head of Data prefers the business stakeholder does not independently engage yet another data consulting company with whom the organisation does not already have a relationship and that often treats the work as a black box delivery where they only provide the answer but not the workings, so they engage us.

We have just finished one of these Research Spikes.

The organisation's data team is building a new greenfields data platform to replace their legacy data warehouse.

The legacy data warehouse currently provides over 1,000 separate reports all built in a legacy BI tool. The reports and data warehouse all have variable levels of documentation.

The team knows that a lot of the reports are slight variations on each other, where they have been copied and a filter added, or a different time period hard coded.

But the team is stuck with manually reviewing and comparing each report to see what it does, if it can be consolidated or decommissioned.

The research spike we did was to answer this question:

“Can we use a LLM to compare exported report definitions to reduce the manual comparison work required?”

We received a subset of the report defintions as XML. We received a cluster where the data team had already reviewed them and they were slight variations on each other. We received a second cluster that was based on a seperate set of core business processes.

We then used Google Gemini to compare the report definitions.

The answer to the research question was yes, the LLM approach could reduce the manual work required but could not automate it fully.

When we asked the LLM to merge some of the XML report definitions it politely responded with:

“I can't do that. The reports are complex XML documents that define the data source, layout, and other properties of a report. Simply combining three separate report XMLs will result in an invalid structure.”

There must be a better way to automate this data discovery work.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/08

Some background on what led us to the decision to do this 30 day bet on #AgileDataDiscover

#2 From Data Governance to Data Enablement

As we are bootstrapping AgileData, every now and again I do a side hustle consulting gig to refill the AgileData funding / runway bucket.

I did a side hustle earlier this year that involved a lot of collaboration with an enterprise organisation’s Data Governance team, well they were called a team by name, but in reality it was a team of one. That one person was frickin awesome, but that is a different story for a different time.

The organisation was migrating from a legacy Data Warehouse to a “Modern” Data Platform.

The Data Governance lead was attempting to enable this to happen as quickly as possible, while putting in place the data Principles, Policies and Patterns that were lacking when the legacy Data Warehouse was created.

As you can imagine being a team of one meant that they could not actually do a lot of the work themselves, and by having no team members they had to ask for other data teams to help with the work. Those data teams were head down, bum up trying to rebuild everything on the new platform so the old one could be decommissioned. They didn’t have a lot of time to put into the Governance data work, and they treated it as not being their work to be done, but work the Data Governance team should do.

We decided to flip the model and move from being a Data Governance team of one trying to get the work done, to being a Data Enabler of one, helping the other data teams collaborate together to share and leverage the data Principles, Policies and Patterns they had each created independently.

We also focussed on helping to unblock those teams when they were stuck with something outside their control and also started a series of capabilities to increase the data fluency across the entire organisation, again to improve the ability for the data teams to have conversations with their stakeholders.

One area that was a real blocker for the data teams was the ability to document the current state of the Data Warehouse, especially based on a pattern of Information Products, identifying what was already available, what was being used, what was adding business value, what could be decommissioned and the effort / feasibility to rebuild those that had ongoing value.

As you would expect, the organisation tried to implement a data catalog solution, which had failed to produce these useful outputs.

The typical pattern of getting five business analysts to spend six months reverse engineering the legacy platform and engaging with business stakeholders to discover this information wasn’t a viable option.

There must be a better way to automate this data discovery work.

Follow #AgileDataDiscover if you want to follow along as we build in public.

2024/06/07

Some background on what led us to the decision to do this 30 day bet on #AgileDataDiscover

#1 Legacy Data Platform Migration to AgileData

One of the blockers our #AgileDataNetwork partners hit when talking to organisations about becoming their Fractional Data Team, is the theory of sunk costs.

The organisation may have already invested in a data platform and maybe a permanent data team to build that platform and deliver data assets. Or they may have already paid a data consultancy to build a new modern data platform for them, and then continue to pay the consultancy for the ongoing data work.

Even when our partners offer to reduce the organisations annual data costs from $600k a year to $60k, the theory of sunk costs still seems to remain.

One of the barriers is the cost / time to rebuild the current data assets and Information Products in the AgileData Platform.

So we have always been keen on finding a way to remove or markedly reduce the time and that cost for that migration data work.

The way we think about it is this:

How do we automate that migration so it is automagic, so that it removes all human effort to do that data work.

We know that 100% automation is probably not achievable but it’s the vision we wanted to strive for.

In reality we would be happy with 80% of it being automated and human effort for the last 20%.

The obvious answer is we just migrate the organisation’s code and we run it on our platform.

The problem with this pattern is we lose all the secret sauce of what we have built in AgileData that allows our partners to reduce an organisation's data cost from $600k to $60k.

So we need to automate the process of discovering the core data patterns in the organisation's current data platform, map those to the core data patterns we have built into AgileData, then automagically generate the AgileData config to apply those patterns.

There must be a way to automate this data work.

2024/06/06

We are working on something new at AgileData, follow us as we build it in public

As part of our constant experimentation we have stumbled on an interesting use case in the data domain.

It's a data use case that has got Nigel Vining and myself so excited that we have decided to dedicate the next 30 days to experimenting with it.

It might turn out to be nothing valuable.

It might turn out to be a set of features in AgileData, or a new module.

It might turn out to be a completely new product.

We have no idea, but we are interested enough in it to bet 30 days effort to find out the answer.

As part of this we are also going to completely change the way we do our product build, for this idea we are going to change the way we work.

As part of this process we are also going to post daily as we decide and execute each step of the product process.

If you want to follow along as we build in public, checkout this post on a regular basis, we will add each days update to it so you can watch as we build and learn.