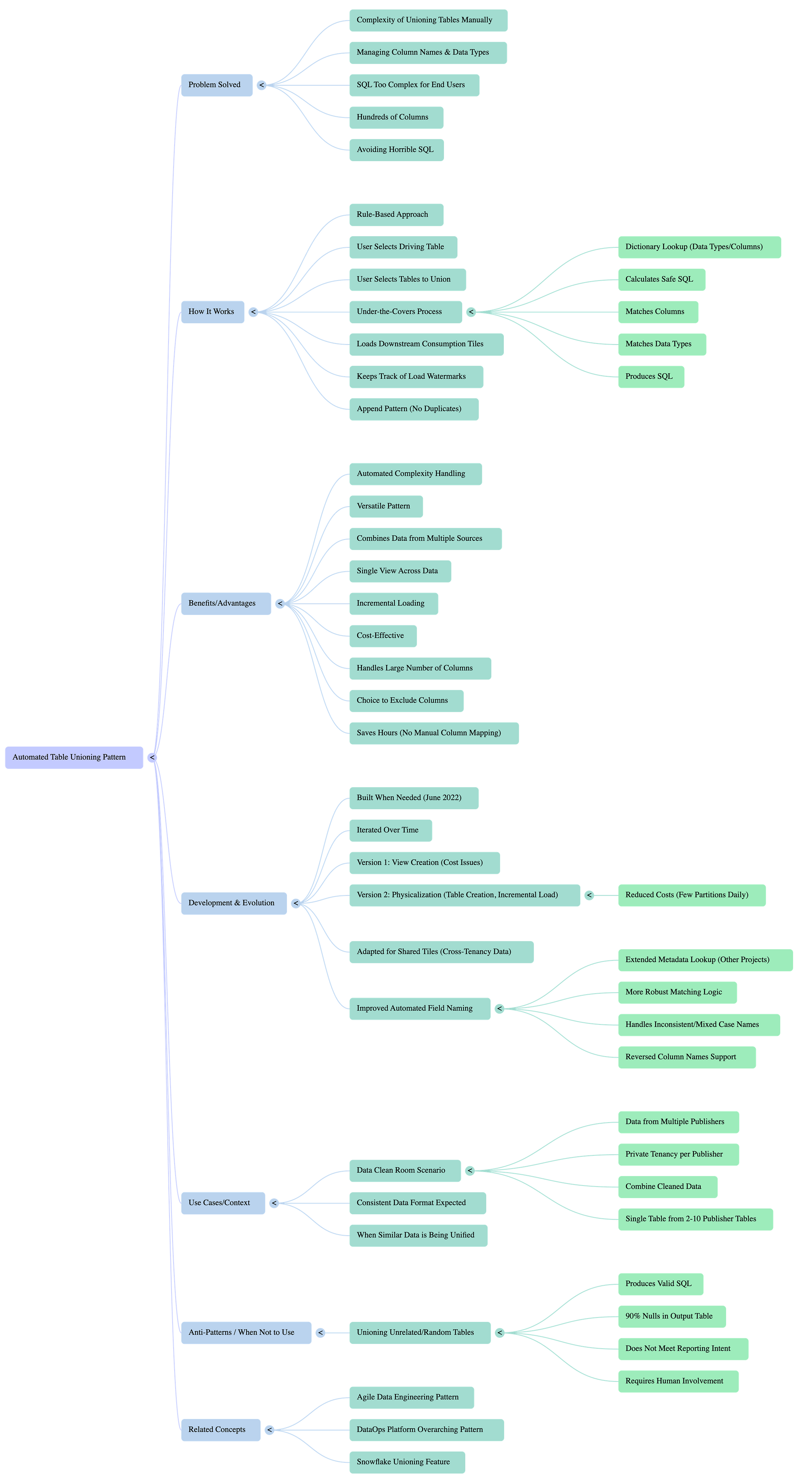

Union two or more tables together automatically, AgileData Engineering Pattern #2

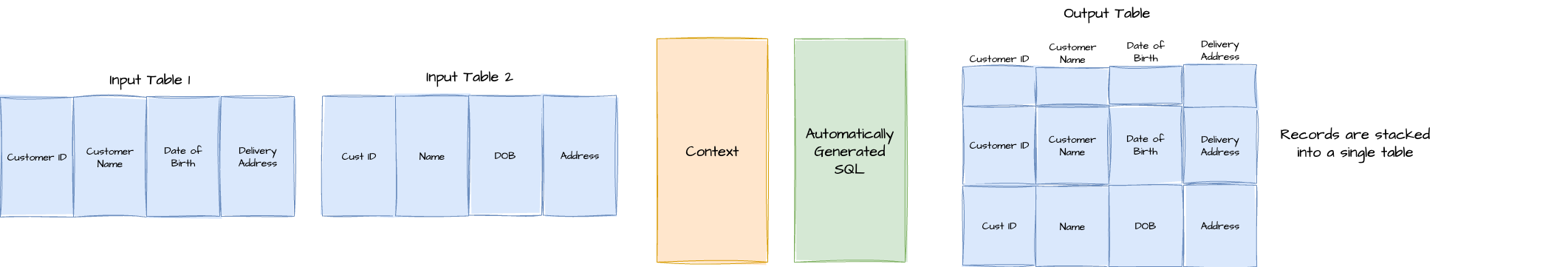

The Automated Table Unioning pattern automatically combines two or more tables by intelligently looking up column names and data types to generate safe SQL under the covers

Automated Table Unioning

Quicklinks

Agile Data Engineering Pattern

An AgileData Engineering Pattern is a repeatable, proven approach for solving a common data engineering challenge in a simple, consistent, and scalable way, designed to reduce rework, speed up delivery, and embed quality by default.

Pattern Description

The Automated Table Unioning pattern automatically combines two or more tables by intelligently looking up column names and data types to generate safe SQL under the covers. It supports disparate data sources, such as those from multiple publishers in a data clean room, and creates a view or incrementally loads the unified data into a physical table while tracking load watermarks to prevent duplicates.

Pattern Context Diagram

Pattern Template

Pattern Name

Automated Table Unioning (or Union Tables Pattern)

The Problem It Solves

You know that moment when you need to combine data from multiple sources into one cohesive view, but the thought of writing complex SQL for unioning gives you a headache? Especially when dealing with tables that have hundreds of columns and potentially differing column names or data types, manually handling the complexity is a huge mission. This pattern solves the problem of needing to "stack together" multiple tables automatically, without requiring users to write "horrible SQL". It streamlines the process of consolidating data from various origins into a single, unified view that end customers can easily consume.

When to Use It

Use this pattern when:

You need to combine two or more tables to create a single, unified dataset.

The complexity of managing column names and data types during the unioning process is too high for manual SQL.

You are receiving data from multiple disparate sources (e.g., different publishers in a data clean room) that needs to be consolidated for a single customer view.

The data coming from these different sources is "roughly similar" in its underlying structure or intent.

You want to avoid writing extensive, repetitive, and potentially error-prone SQL for union operations.

Input tables have a large number of columns (e.g., over a hundred) making manual column mapping or selection impractical.

How It Works

Trigger:

The pattern is initiated when:

The need to combine multiple tables from various sources into a single, comprehensive table or view.

Inputs:

Two or more tables that contain related or similar data.

These tables might originate from different "tenancies" or "projects".

Steps:

User Selection: The user selects a "driving table" and then specifies other tables to union with it.

Metadata Lookup: Under the covers, the system performs a comprehensive dictionary lookup of the data types and column names across all selected tables. This lookup can extend to tables in other projects if shared tables are used, ensuring the most up-to-date metadata is acquired.

Safe SQL Generation: The system then intelligently works out the "safe SQL" required to union the tables, ensuring that column names and data types are correctly matched and aligned.

Robust Column Matching: The logic for matching columns is robust, handling inconsistencies like mixed case or reversed column names, making it "a whole lot smarter" than a simplistic string match.

SQL Execution & Output: The generated SQL is produced and executed, loading data into downstream "consumption tables".

Physicalisation & Incremental Loading: While the first version created a view, the pattern was iterated to "physicalisation," where it creates a new physical table. This table is then incrementally loaded daily, keeping track of "load watermarks" for each source table and safely appending new data without inserting duplicates.

Column Exclusion Choice: The user maintains the choice to exclude specific columns that they do not wish to bring through to the final unioned table.

Outputs:

A single, combined, and incrementally loaded table that stacks data from multiple tables, ready for consumption.

Why It Works

This pattern works like a smart, automated data assembler. Instead of you manually figuring out how to fit different pieces (tables) together, it automatically assesses their shapes (column names) and materials (data types), then precisely connects them. It's akin to having a super-efficient robot manage all the complex plumbing behind the scenes, ensuring data lines up perfectly without any human intervention in the intricate SQL details.

It "just works," providing a "magical" experience by automating a traditionally time-consuming and error-prone task. By automatically handling schema alignment and ensuring safe, duplicate-free incremental loads, it significantly reduces manual effort and improves data consistency, ultimately saving "hours" of development time. The iteration to physicalisation also makes it highly cost-effective for large volumes of data.

Real-World Example

Imagine running a data clean room where data arrives from five different publishers, each in their own private data tenancy. Each publisher's data might be in a separate table, and after cleaning and anonymising sensitive information, you need to combine all these 5 or even 10 tables into a single, unified table for your end customer to report off. Instead of writing a massive, complex SQL query to union these tables—especially if each table has 100 or more columns—the Automated Table Unioning pattern allows you to simply say, "pick this table and union it," and the system handles all the underlying complexity automatically. The result is one easily consumable table for the customer, without the need for manual SQL coding or tedious column mapping

Anti-Patterns or Gotchas

Unioning Unrelated Data: The primary anti-pattern is using this feature to combine tables that have no inherent relationship or similar data intent. While the pattern will technically run and produce valid SQL, if you "grab three random tables and union the hell out of it," the resulting table will likely be "90% null cells" because the columns won't match, and it won't meet any meaningful reporting or analysis purpose. It will do "the job, but probably not the job that you wanted to do".

Lack of Human Oversight: Relying purely on automation without a human in the loop to understand the underlying data relationships and intent can lead to meaningless outputs.

Tips for Adoption

Build When Needed: This pattern was developed out of a direct need when existing methods were too difficult or required automation. Adopt it strategically when a clear problem of complex unioning arises.

Iterate and Evolve: Be prepared to iterate on the pattern based on performance, cost, and changes in other related patterns. For example, the pattern evolved from creating views to physicalising tables for cost efficiency, and its column matching logic was enhanced to accommodate changes in automated field naming conventions or shared data practices

Understand Data Relationship: Ensure the data you are about to union is genuinely "similar" and has a logical relationship to meet your reporting intent.

Leverage Exclusion Choices: Utilise the ability to selectively exclude columns you don't need, which helps manage the width of the final table, especially with inputs having many columns.

Related Patterns

Data Ops Platform: The Automated Table Unioning pattern is an overarching feature within the broader data operations platform.

Shared Tiles: The pattern had to be iterated to work with "shared tiles," where data is safely shared across different tenancies or projects.

Automated Field Naming Convention: Changes to this convention necessitated updates to the unioning pattern's column matching logic.

Append Pattern: The incremental loading aspect of the union pattern leverages an "append pattern" to safely add new data without inserting duplicates.

Press Release Template

Capability Name

Automated Table Unioning

Headline

New Automated Table Unioning Feature Simplifies Data Consolidation for Users, Eliminating Complex SQL

Introduction

We're thrilled to announce the launch of our new Automated Table Unioning capability, a powerful feature that allows you to automatically stack data from two or more tables into a single, unified table. This is designed for anyone who needs to effortlessly stack data from multiple similar tables, such as those from multiple publishers in a data clean room, without having to write intricate or "horrible SQL". It delivers a "magical" experience by making data integration both easy and efficient

Problem

"As a data analyst, I constantly needed to bring together data from different sources that could have over a hundred columns and inconsistent naming conventions. I hated writing pages of really horrible SQL just to union tables, especially when dealing with data from multiple publishers in our data clean room that needed to be stacked together into one single table. It was a huge mission that wasted hours of my time trying to manually map columns

Solution

The Automated Table Unioning feature streamlines this process by allowing you to simply select a "driving table" and then specify other tables to union with it.

Under the covers, the system performs a comprehensive dictionary lookup of data types and column names across all selected tables, automatically generating the "safe SQL" required for the union.

It robustly handles inconsistencies like mixed case or reversed column names, making the matching "a whole lot smarter".

You also have the choice to exclude specific columns you don't wish to bring through to the final unioned table.

This capability also incrementally loads the final output into a physical table, keeping track of "load watermarks" for each source table and safely appending new data without inserting duplicates, ensuring both cost-effectiveness and up-to-date information detected, generally taking care of 99% of common issues without manual intervention.

Data Platform Product Manager

"This capability is a game-changer for our data platform, significantly enhancing maintainability and trust by automating complex data integration tasks that previously required substantial manual effort. It has proven to be incredibly cost-effective at scale and highly adaptable to evolving data patterns and shared data practices across tenancies."

Data Platform User

"This feature is truly magical! I can just pick my tables and say 'union this,' and it just works, saving me hours of tedious SQL writing and column mapping. I get one clean, combined table exactly how I need it, without the headache, allowing me to focus on analysis rather than data preparation”

Get Started

The Automated Table Unioning capability is available today within the AgileData platform. Simply select your desired tables and apply the union rule step to effortlessly stack your data. Talk to your data platform product manager for more details and to access this powerful feature for your data.

AgileData App / Platform Example

Context Set via Change Rule UI

AgileData Sudo Code Generated

GIVEN new or updated records in the consume.adt_products input tile

UNION the consume.dbt_products tile

UNION the consume.xyz_products tile

AND a business effective date of TIMESTAMP(effective_date)

THEN populate the all_products consume tile

USING the replace patternAgileData Podcast Episode

AgileData Podcast Mind Map

AgileData Podcast Episode Transcript

Shane: Welcome to the Agile Data Podcast. I'm Shane Gibson.

Nigel: And I'm Nigel Vining.

Shane: Hey, Nigel. We're onto our second agile data engineering Pattern so today we're gonna talk about union two or more tables together automatically. Or automatically. Take me away with what it is and why I cared.

Nigel: Cool, thanks Shane. This one was topical this week because Snowflake did an announcement around, basically this very feature, unioning tables together and taking care of the complexity of the covers, which I thought was funny because it's something that crossed our paths going back about four years now, we needed the ability to union two tables together, or more, and have the complexity of this taken care of under the covers. Now, the complexity is that when you union two tables together, you need to take into account the column names, and the data types. To basically line all the data up correctly, and usually this is handled in the SQL, but if you have a end user like Shane, working out that SQL's a step too far, it's quite a mission sometimes, considering that some of the tables may have, you know, 100 columns in them and have some different data types.

So we come up with this pattern called basically union tables. and it's a rule. So let's Shane pick a driving table and then effectively pick another table and say union this. Pick another table if he wants and say union this. Under the covers we do a basically a big dictionary lookup of the data types and the columns in those tables.

And then we work out what the safe SQL is to union them together. Basically, make sure the columns match up and make sure the data types match up. And then we produce the SQL and it runs and it does all the normal stuff that loads out downstream consumption tiles. So that's it in a nutshell.

Shane: And, I just, I was just looking at this we actually built it 14th of June, 2022. We released it. So the interesting thing for me is we built that feature way back then because we needed it. It comes back to this, one of the overarching patterns of the data ops platform is build it when you need it.

When something is too hard or needs to be automated, then we build it, we use it. If we don't need it, we don't build it until we need it. So for me, what this allowed me to do was grab multiple tables, tiles in our language and to slam them together. And then I could come up with one view or magically off that and just use it.

And the reason we needed to do that was we were running data clean room. So we'd have data coming in from multiple publishers. Each one going into their own private tenancy. And then once we cleaned all the data and hidden the stuff that we needed to hide from our customer we then needed to slam all those tables together.

So we'd bring in, 2, 5, 10 tables, one from each publisher, and I needed to combine them to be a single table. And I didn't want to write a whole lot of really horrible sequel to do that. So this Pattern effectively allowed me to say, pick this table, and union it.

And then all the complexity is done under the covers.

Nigel: Yeah, exactly right. In the end it turned out to be quite a versatile pattern for that Because yes, we have data arriving from different rooms. As you said. And then effectively you just want to put it all together because the end customer just wants one view across all that data.

So I've already got a consistent format. So. Basically, as long as all the data comes up from the cleanrooms, roughly similar, the union pattern takes care of it and safely puts them together and incrementally loads that table every day because the second part of the pattern, the covers, is it's keeping track of the Effectively the load watermarks for each of those tables in the consume and when new data turns up basically a big append pattern but done in a safe way where we check we never inserting duplicates.

Yeah, it just works. It's it's actually a quite a magical one.

Shane: I was just reading our release notes. We actually iterated it to do that. So the first version of that patent that we did, we actually created a view. Data was stored in the original tables, and we created a view across the top as we got to a large volume of data.

Where BigQuery still ran it really fast, but the cost went up. Then we moved to physicalization, right? We changed it. That actually creates a table at the end and you incrementally load that. So again, we iterated the Pattern as we found something that didn't quite work. Off version one.

Nigel: Yeah, yeah, that's that's exactly right, the cost went up and so we the engineering a little bit and the cost came way down now and now that's quite a cheap pad to run because we only incrementally add a few partitions into that table each day, which is really effective. Okay.

Shane: the second thing we did is as we changed some of our other patterns, we had to iterate that union Pattern. So for example, when we started using shared tiles, so instead of data moving across tenancies, we shared data between tenancies in a safe way. , You had to iterate that Pattern,

And then the other one was we had a automated field naming convention for our consumed tiles. And we decided that actually the readability of those columns, those field names, was not best.

So we flipped it. And actually changed the way we dynamically generated those field names. So again, when we did that, you had to add another set of logic to the union rule so that it could actually pick up both field formatting

Nigel: yes, so there are two things that went on. As you see, the first one was we were unioning in tables from other projects, so we needed to extend the metadata lookup wider into the project that that table was coming from. So we had the most up -to -date list of Column names and data types, so we would pull them straight off the source project and then the second one is.

We changed some of our naming for the tiles going in so which made our the first version was very simplistic match was basically if the end of the string matches then it was the same column name so we made that a lot more robust we rejected that and we basically made sure that the name the names could be a little bit inconsistent with mixed case we Reverse the column names and it would still find the correct match because effectively we made a whole lot smarter.

And that's the version now we've been running probably about 18 months nearly, I believe. And that's still got legs, so we'll just keep running that as is.

Shane: And the other thing you mentioned is some of the input tables we use to union have over a hundred columns because in our consume layer. We effectively use one big table as much as we can. So I'm literally grabbing five tables, each of a hundred columns, saying to a union, it's working out where those columns are the same rack and stacks that data.

And then when the columns are different, it treats that as a separate column. Then I can choose which columns I don't wanna bring through. So I may say don't do these ones because effectively I don't want, a table with 300 columns when I'm racking and stacking it. But again, I get that choice when I use that rule.

So that's really powerful for me. I don't have to actually go through and do a whole lot of colon mapping. The columns are the same. It picks it up. , But I can actually then exclude the ones that I'm like, yeah, I don't need that this time. This one probably saves me hours actually, because I just don't need to care.

\It just does what I want.

So that's the Pattern that we use. When wouldn't you use this Automated Unioning Pattern.?

I think for me one of the ones would be, I know that the data that I'm about to union is similar. Because I know where it comes from. I know what we've done to it. I think if you grab two random tables that had no relationship of the data and try to union them without any. Human being involved to look at it.

So just grab three random tables and union the hell out of it. It'll run, it'll create a consumed table that's actually the union and all of it. But I'm not sure it'll meet the intent of what I would actually need to report off. So I think for me, that's probably an anti-patent, that it just does it without a human being involved in that loop.

Nigel: Yeah, it definitely allows you to do exactly that scenario, put three things that aren't related together, it'll definitely union them, it'll run, it'll produce valid SQL, 90 percent of that produced table will be, No, because they don't match.

Shane: think that's the only one I can think of. The table versus view that was performance. It's not really an, it's just something we need to do to that. And then the name of the columns for helping with the automated matching, they're just sub patterns of the patent itself.

So that's the main one is the ability to use it to slam and rack and stack tables that actually aren't related at all and have had no shared data. It'll run it'll do the job, but probably not the job that you wanted to do. Alright, I think we got that one done. I hope everybody has a simply magical day.