Dimensional Data Modeling Patterns with Johnny Winter

AgileData Podcast #73

Join Shane Gibson as he chats with Johnny Winter about the core patterns that make up Dimensional (Star Schema) Modeling.

Listen

Listen on all good podcast hosts or over at:

https://podcast.agiledata.io/e/dimensional-data-modeling-patterns-with-johnny-winter-episode-73/

Subscribe: Apple Podcast | Spotify | Google Podcast | Amazon Audible | TuneIn | iHeartRadio | PlayerFM | Listen Notes | Podchaser | Deezer | Podcast Addict |

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

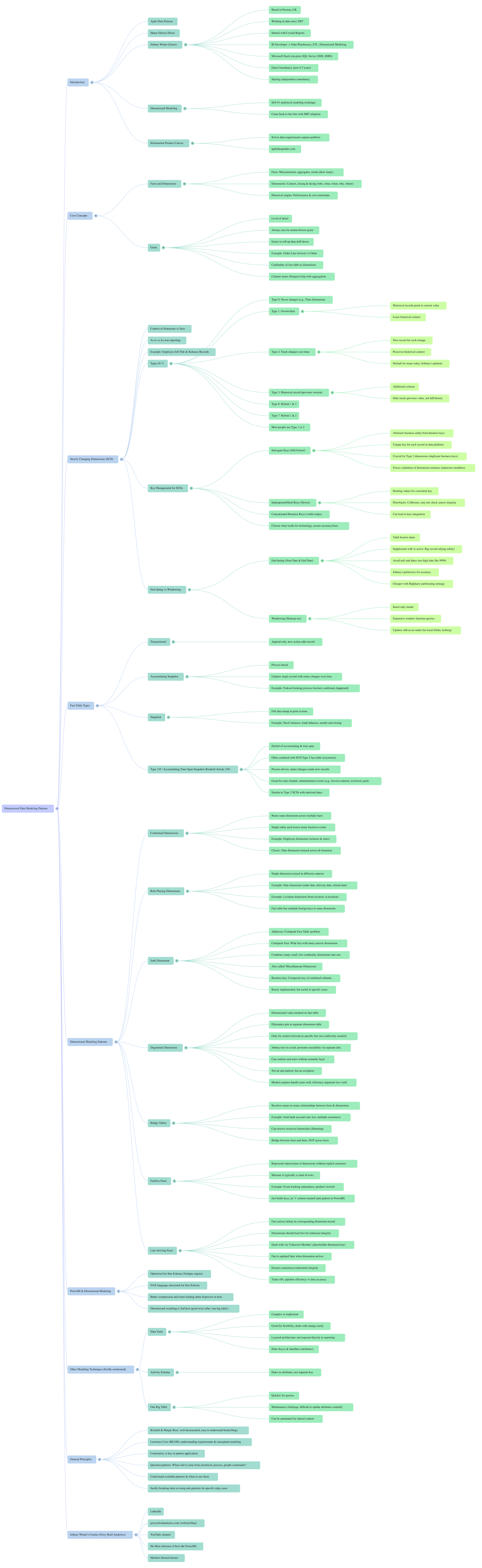

Google NotebookLM Mindmap

Google NoteBookLM Briefing

Briefing Document: Dimensional Data Modelling Patterns

Overview: This podcast episode of "Agile Data" features Shane Gibson and Johnny Winter discussing the enduring relevance and practical applications of dimensional data modelling. Johnny Winter, a seasoned data consultant with a background spanning from Crystal Reports to modern data stacks, provides a comprehensive breakdown of core dimensional modelling concepts, common patterns, and nuances often overlooked. The discussion highlights why dimensional modelling remains the "number one analytical modelling technique in the world," even with the advent of new technologies and approaches like data vaults and activity schemas.

Key Themes & Important Ideas:

1. The Enduring Relevance of Dimensional Modelling:

Despite its age (Johnny started his career when it was prevalent), dimensional modelling is "pretty much still the number one analytical modeling technique in the world."

Its popularity has seen a resurgence with tools like DBT, indicating its continued applicability in modern data stacks.

The widespread availability of resources, particularly Ralph Kimball's books and blog posts, has made it highly accessible and understandable: "Kimble and Margie Ross they wrote a hell of a lot They write lots of books that were easy to read and understand."

2. Core Concepts: Facts and Dimensions:

Dimensional modelling organises data into two primary categories: facts and dimensions.

Facts are the "measurements, the things you actually potentially want to aggregate or trend," often representing "the event." Johnny uses the "how many" from Lawrence Core's 7 W's as a fact. Examples include order value, payment value, or sick days.

Dimensions provide "the context that you apply to those," enabling "slicing and dicing of the data." These relate to the "who, what, when, why, where" of an event. Examples include customer, supplier, employee, or store.

Historically, this separation was driven by "constraints" (performance and cost) in databases, but it still promotes "reuse reasons" today.

3. Grain of Facts:

Grain refers to the "level of detail" in a fact table.

Johnny advocates for modelling at the "atomic grain" or "lowest grain anyway." He states, "You can always roll up grain easily in a query... but it's very difficult to do the other way around."

While historical constraints led to "aggregated facts" for performance, modern "column store databases and now storage formats like paret" make aggregating granular data much easier and reduce storage footprint.

The default should be the lowest possible grain (e.g., "order line" rather than "order") unless there's a specific, justified reason for aggregation (e.g., performance, ease of use).

4. Slowly Changing Dimensions (SCDs):

SCDs address how changes in dimension attributes are managed over time, allowing for "asis and as was type reporting."

SCD Type 0: The attribute "never ever changes." (e.g., a time dimension with 24 hours in a day).

SCD Type 1: The attribute gets "overwritten." Historical records are updated to reflect the current value, meaning "all of my historical records will now point to that value." This can obscure historical analysis.

SCD Type 2: "Allows you to track changes over time." A new record is created for each change, preserving historical context. This typically involves "valid from valid to date or a start date and end date" and a "surrogate key" to uniquely identify each version of the business entity. Johnny notes this is generally preferred now, though some clients still opt for Type 1 for "historical reporting perspective."

SCD Type 3: "History recording except for rather than with a type two where you get an extra record when the value changes you get an additional column." It only tracks the previous version.

SCD Type 6 & 7: Hybrids, often involving "durable keys" for more complex scenarios, but "most people do ones or twos."

Key Management: The importance of surrogate keys is highlighted, especially for Type 2 dimensions, as they provide a unique identifier for each record in the data warehouse, abstracting from the business key which might not be unique across historical records. While "hash keys" or "concatenated business keys" are possible with modern tech, Johnny prefers surrogate keys as they "forces you to go back and look at your dimensions and make sure that your values exist."

End-dating strategies: The choice between leaving an end date as NULL or using a 99999 value, and the concept of "windowing" (which emerged from Hadoop's insert-only preference) versus direct end-dating, are implementation-level patterns that require careful consideration based on technology and cost (e.g., BigQuery's cost optimisation for end-dating). Consistency in these patterns across a data platform is crucial.

5. Fact Table Types:

Transactional Fact Table: "Pretty much append only," recording a single event as it happens.

Accumulating Snapshot Fact Table: Used for processes with multiple defined steps. A single record is updated as the process progresses (e.g., tracking a podcast booking process from invitation to confirmation).

Snapshot Fact Table: A "full data dump every single day," capturing data at a specific point in time (e.g., stock balances, month-end closing balances).

Type 145 Fact Table (Accumulating Time Span Snapshot): Less commonly mentioned, this "process driven type fact table whereby the status of something changes and you get a new record every single time." It's like a Type 2 SCD applied to a fact table, excellent for tracking states in a process like a sales funnel. Johnny calls the common misnomer "SCD Type 2 Fact Table" an "oxymoron."

6. Dimensional Design Patterns (Conformed, Role-Playing, Junk, Degenerate):

Conformed Dimensions: "Reuse the same dimension across multiple facts." This promotes consistency and reusability across different business domains (e.g., a single Date dimension used for sales, finance, and HR reporting). This is a hallmark of dimensional modelling that differs from other approaches like Data Vault.

Role-Playing Dimensions: A "single dimension that can be reused for multiple different things in different contexts." The classic example is the Date dimension serving as Order Date, Delivery Date, Refund Date via different foreign keys in the fact table. Another example is a Location dimension serving as both From Location and To Location.

Junk Dimension: Addresses the "centipede fact table" problem (a wide fact table with many narrow, low-cardinality dimensions). A junk dimension combines "all those little bitty low cardality type things" into "one fat dimension that combines all possible given combinations of them." It's a "miscellaneous dimension" that simplifies queries, though it's "not a particularly common pattern."

Degenerate Dimension: A "dimensional value" or context "only ever relevant in the context of a given fact," so it's "retained it on your facts table instead." This avoids joining to a separate dimension. While it can reduce joins, Johnny "try and avoid where I can" as requirements can change, leading to a need for that attribute in other contexts, making reuse difficult and potentially complicating user queries if there's no semantic layer. Modern analytical engines are also "optimizing their engines for that BI workload for that kind of star schema type shape and it it deals with them absolutely fine," so the performance argument for degenerates is often outdated.

7. Anti-Patterns & Justification:

Single "Thing is a Thing" Fact Table: Having one huge fact table for "every event all at the lowest grain" with highly abstract dimensions (e.g., a "people" dim for customers/employees/suppliers) is an anti-pattern. Dimensional modelling aims to reflect "business language," so dimensions should represent understandable business concepts. Separate dims for Customer, Employee, Supplier are generally preferred.

Joining Across Facts Directly: This is generally discouraged due to "fan trap and chasm trap" issues and differing grains. Instead, "drill across" functionality relies on "conformed dimensionality" and aggregating queries at the dimension level.

Factless Facts: A fact table that "doesn't have any of those things [measures to aggregate]," but "basically just stores the intersection of the various dimensions and ultimately end up counting rows on it to get the facts." It still has a 'fact' (the count of rows). Johnny prefers to just store the keys, not an extra column of '1's, as that's "bloat that you don't need."

8. Advanced Topics & Nuances:

Bridge Tables: Primarily used to "resolve many to many type relationships" between facts and dimensions. The example given is a joint bank account where one transaction (fact) relates to two customers (dimension), requiring a bridge table to resolve this. They can also help with "recursive hierarchies." Shane clarifies, "Bridge tables are a bridge between the facts and the dims not a bridge across facts."

Late Arriving Facts: When a fact arrives before its corresponding dimension record has been loaded. This is handled by assigning an "unknown member" (a default surrogate key like -1 or 99999) in the fact table, and then "rolling windows" or later updates to assign the correct dimension key once it's available. This ensures "referential integrity" even if it means pipelines run "slower and a bit more expensive."

Impact on BI Tools (e.g., PowerBI): PowerBI's Vertipac engine (compression engine) and DAX language are "structured to work with" star schemas, making it "far more efficient" than a single large table for analytical workloads, especially for compression and querying.

Layered Data Architectures: Dimensional models often sit as a "core reporting layer" on top of other modelling techniques like Data Vault (which is typically not exposed directly to analysts due to its complexity for querying). This provides "flexibility" and "context" while making data "easy for the end user."

The Importance of Context and Trade-offs: The choice of pattern always depends on context. "The nuance is in absolutely understanding what patterns are available and which ones to use when." Sometimes, knowingly implementing an "anti-pattern" might be justified for a specific edge case if it "makes sense in the right context and you can justify it."

The Consultant's Mindset: Johnny and Shane discuss the need for a "dimensional checklist" when starting with a new client, to quickly understand their existing patterns and ensure consistency.

Conclusion: This episode serves as an excellent deep dive into dimensional modelling, re-emphasising its foundational role in data analytics. Johnny Winter expertly navigates complex concepts, providing practical examples and personal insights into common challenges and best practices. The discussion underscores that while technology evolves, the core principles of dimensional modelling remain highly effective for building robust, performant, and user-friendly analytical data platforms.

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

Transcript

Shane: Welcome to the Agile Data Podcast. I'm Shane Gibson.

Johnny: And I'm Johnny Winter.

Shane: Hey Johnny. Thanks for coming on today. We are gonna talk about the patterns of dimensionally modeling. It's an interesting one for me because I started my career in data when we did lots of three and F, and then we went on to dimensionally modeling.

I'm that old. And then I moved on. I used a bunch of other patterns and I was sitting back the other day thinking, holy shit. I've had a bunch of podcasts talk about modeling patterns, but actually nobody on to describe dimensional modeling, which pretty much is still the number one analytical modeling technique in the world.

Before we rip into that, why don't you give the audience a bit of background about yourself?

Johnny: Yeah, absolutely. So. I guess some people listening, actually, even as you did that intro, all that was going on in my head was the intro music. 'cause that's like a ripping tune and if I ever do my own podcast, like the standard's been set in terms of, uh, intro music.

But yeah, me, I'm Johnny. I, I'm based in Preston, in Lhi in the uk. I have been working in data since I always say 2007, somewhere at my house. I've got a certificate where I went on a Crystal Reports course, so that's where I cut my teeth as a data professional. So basically a report writer, analyst type role using good old business objects, crystal reports, that's where I, my sql then effectively graduated, which think is quite a common path from that kind of sort of analyst type role into more the sort of full BI developer as we called it back in the day.

Moved on to building, developing data warehouses, the ETL and all the dimensional modeling that came with that. Historically that was good old Microsoft stack, so on-premise, SQL server integration services, those kinds of things. As I still did like the reporting services element of it as well. So I did the full sort of end-to-end.

It was before we got trendy and had data engineers versus analysts as it were. I used to do absolutely everything and then, yeah, graduated more into cloudy stuff from a tech perspective. In my last four or five years, I've been working in data consultancy for a couple of different consultancy firms, and I'm a consultant today looking to start my own independent consultancy imminently.

Shane: Wow. Welcome to the chaos that is doing a business, running a business and trying to grow a business. Yeah, looking forward to it. How? How to take a 40 hour job and make it hundred 20 a week.

Johnny: Yeah. So simultaneously terrified and excited. What is it? Find a job you love and you never have to work another day in your life.

I'm pretty sure that it works the other way as well. Find a job you love and you'll never have another day off in your life.

Shane: Yeah, I think the key I say to people is always make sure you have annual leave. The problem with consulting is there's gonna be lumps and bumps. There's gonna be times where you're not working due to no choice of your own, and because of that you tend not to book holidays and then you burn out.

So yeah, just get somebody else to help you run the company, your partner or whatever, and say, this is annual leave. You've gotta take it every year. 'cause otherwise you won't and you're regret it. Crystal reports though, the real question there to age people is was that crystal reports before business objects or after business objects?

Johnny: So in terms of my exposure to it, I started using it just after it got acquired. So I think its Seagate before that. So when I started using it, the company I worked for at the time, there was this Seagate footprint everywhere. Like quite a lot of the system accounts that we'd used for accessing databases.

'cause we didn't do things properly with like you would do today with service principles and whatnot. So a lot of the accounts that we'd accessed, things were like Seagate, everything was labeled Seagate. But yeah, they had just been acquired by business objects at that point in time. I think by the time I stopped using it was just as they got acquired by SAP as well.

But yeah, it was just the customer report side of it. I never really touched like the university side of it, the semantic layer, which is weird 'cause I'm like a bit of a semantic layer geek now. I guess my sort of public persona in the kind of data community, a lot of it has been built around Power bi, which is a semantic model, is like I'm a big fan and one of the reasons that I'm a big dimensional modeling fan as well because it's one of the things that Power BI is absolutely optimized for.

But yeah, never really got to grips with universes. It was just a pure Crystal Reports guy, and at the time it was Crystal Reports always on our LTP databases as well. When I learnt my trade, I didn't know what a date warehouse was. I didn't know what a dimensional model was.

Shane: I think it's back in the days we used to do ods, which were really just near real time replicas or overnight replicas of the source system.

And then you were munging all those horrible tables together and there was no analytical layer as such. It was just bunch of bloody horrible queries, which is where business objects in the universe is actually had massive value. And then from memory, it took them ages to get crystal reports to run against the semantic layer.

It reminds me of SQL Server reporting Services, SSRS, where again, that was direct query against the relational databases. It never really had the semantic layer that you got when Power BI kind of took hold.

Johnny: Yeah. Funnily enough, Krista reports to SQL Server reporting services was exactly the sort of step that I took as I left the role I was doing using Krista reports.

We were actually in the process of migrating to S server reporting services instead. So I had a bit of a foot in both camps to an extent. Definitely from a analysis service perspective, you could connect SSRS reports to analysis services semantic layer really easily. But the crystal reports that I had exposure to is exactly what you described overnight, ODS, just a database replication once a night and then you'd write your reports.

And I got forever frustrated about the fact I'd have to write the same convoluted business logic over and over again for lots and lots of different, similar concepts, similar reports for stakeholders, oh, we need to do this thing, and I'd do it and be quite pleased with it. And it was like somebody else wants something a little bit different, perhaps a variation on it.

And I'd have to. Reuse some of that logic all over again and it sent me down a bit of a, a rabbit hole in terms of trying to research that there must be a better way. And that is when I picked up my first copy of the Date Warehouse toolkit and learned about what a date Warehouse was. And ultimately I actually left that role because the project to implement our first data warehouse spent two years getting off the ground and eventually I ran outta patients.

'cause they'd not even started it by the time I actually ended up resigning because I was like, no, I'm fed up with this. I'm gonna go work somewhere that's actually doing the things I want to

do. Forget the days where we used to spend six months to a year doing requirements and then six months to a year buying hardware and waiting to rack and stack it and get the database installed before we could even start.

Shane: The world has certainly moved in a good way.

Johnny: Yeah.

This is working in defense industry as well. So they were very risk averse and the amount of red tape we had to go through for any kind of IT type projects was just horrific. Anyway, so I get the impression speaking to former colleagues that even with the way the world's gone these days, it's still like that they're still mostly on-prem based and getting anything up and running.

Still very slow. So I'm

glad I got out what I did. Let's go into that idea of data warehousing, dimensional modeling, star schemas, all those good words. Just kinda want to go through and just discuss the patterns. 'cause what we're seeing is quite interesting with the adoption of DBT as a tool. Dimensional modeling seems to have come back to the four.

For those people that are modeling or consciously modeling. Dimensional modeling seems to have come back as again, the number one modeling technique that is used in those kind of modern data stacks. So let's start off at the beginning. Dimensional modeling, uh, has this concept of facts and dims. Talk me through those.

Yeah, that is not far off the standard sort of textbook answer. If you were to describe what is dimensional modeling, organizing your data into two categories of table facts or dimensions, the facts tend to be the measurements, the things you actually potentially want to aggregate or trend. The dimensions are effectively the context that you apply to those.

So I always talk about the slicing and dicing of the data. I think like when you first asked whether or not I'd like to be a podcast guest and talk about dimensional modeling, we talked about the fact that I was going to potentially do a bit of a blog series about dimensional modeling and that perhaps we could then wrap that up as me being a podcast guest.

And I think I wrote part one. And part two still in draft, and I've just not gone round to it. And I was like, yeah, okay, Shane, let's just do the podcast because the blogs are going slow. But I've still been putting quite a lot of thought into what the content of those blogs is gonna be. And one of those is absolutely the sort of Oh, dimensional modeling.

Yeah. It's just facts and dimensions. And then you dive into it and it really isn't, it's actually lots more nuanced than that. So I guess the first layer of the onion is very much the, yeah. Facts, the things that you wanna be able to measure the event almost is the way that I describe it, especially in the sort of Lawrence core business event, E type context.

And then, yeah, the dimensions being, when I think of my seven W's from a Lawrence Corona Beam perspective, my how manys being my facts, and then my other W's, my who, what rental, why wear how. We know those might be the dimensions that are gonna sit around it.

Shane: Yeah. Think about it as dims of things. We want to look at the things we can see, customer, supplier, employee store, those kind of things.

And then the facts are things we wanna count, order value, payment value, those kind of things. Yeah, absolutely. And what we're doing is effectively we are breaking the data out into those types of tables primarily. In the early days it was around constraints. So our databases were constrained in such a way for performance and cost that we couldn't just load all the data effectively into a data like inquiry at willy-nilly.

We actually had to model it in certain ways for performance reasons as well as reuse reasons. And that's where it came from. And so yeah, we've got this idea of dim being a thing and the fact being a measure of those things. And then the next thing that often we need to talk about is grain. Yeah. So as soon as we talk about a fact, we will typically wanna have a conversation about grain.

So do you just want to explain how you think about grain of the facts? In many ways

Johnny: being brutal about it. I don't tend to overthink about my grain of my facts too much and like historically, as you say, from almost like a technology constraints perspective. You potentially end up with aggregated grains and things like that.

But I've always just gone in at trying to always model this sort of atomic grain at the lowest grain anyway. So grain for me is always about level of detail, and when you build a fat table, you've got to figure out what level of detail you're gonna get to. So I'm always aiming to get to the very, very lowest grain of detail I can.

You can always roll up grain easily in a query. You can always do an aggregation on top of a very granular fats table, but it's very difficult to do the way round. So for me, that grain, it's trying to get to the lowest detail of information available for me to an extent in terms of a dimensional model as well, I'm looking at the sort of the cardinality of the facts table to its dimensions as well.

So I want my dimensions for each unit of measurement that's in my facts table should only relate to one value in my dimensions. In an ideal world. We used to

Shane: talk about transactional facts and aggregated facts. The aggregated facts back then was a constraint based model. We couldn't hold all the transactions and then query them, and with an aggregated query fast enough, we needed to materialize it or physicalize the aggregations for a performance reason.

Whereas now, not so much we've got the fire power to be lazy and bring it all through, but we get less work up front. More value down the stream.

Johnny: Yeah, so I think column store from a going down a sort of what I call a techie gobbins perspective, the advent of column stores really help with that column, store databases, and now storage formats like Par k being common based as well does make aggregating data really easy and it helps reduce the storage footprint as well.

So you're getting the best of both worlds with it.

Shane: But if you look at a fact, you're still gonna say, is the grain of that fact an order or an order line, aren't you?

Johnny: Yeah, absolutely. I'd still always strive to just go as low as I can. So in that scenario, I'd always, we should build a order line. 'cause we can always roll up to order.

But yeah, absolutely.

Shane: So treat your facts as the lowest grain possible for now. And then if you have to aggregate or change the grain for another fact table, then you're doing it for specific reasons. It's not anti-patent, but you're applying that aggregated Pattern for a specific reason. Performance, ease of use.

Yeah, something like that. Rather than using it as As the default. Yeah, absolutely. And then the next core word is slowly changing dimensions. Type one, two, what is it? I can't remember. Seven. Yeah, there's all these numbers

Johnny: seven's as far as I've gotten to in terms of understanding them. In fact, I mean I could say that there is no way that I could reel off what all seven are.

'cause they're not. Just not used that much. It's zero index as well. I think sometimes type people forget there's a type zero to start with two. So yeah, dimensions effectively the context of your dimension with regard to your facts and talking about, the phrase I've been using most recently is as is and as was type reporting.

And the one that I like to talk about is like a sickness record for argument's sake. So if you're somebody in HR and you wanted to understand which job titles potentially, or which job roles potentially cause the most illness, and we had a fact table that recorded a transaction every time somebody the other day off sick and then a dimension for the employee.

And one of the attributes of the employee was what their job title is so that you can then slice it and dice it and say, ah, we can see that data consultants have had a hundred sick days this year. Whereas agile coaches have only had five sick days, so maybe we need to give a bit of wellness training to our data consultants.

What you've got to take into account is the fact that someone's job title can change over time. So I'm a data consultant now, but I'm gonna be a business owner. In a couple of months time. You were a data consultant and now you're. Agile data coach ish. So yeah, type zero is the, basically, it never ever changes.

The best example I always have that is the time there will always be 24 hours in a day. There will always be 60 minutes in an hour. There'll always be 60 seconds in a minute. So if you've got a time dimension, once you've defined it, you're never gonna have to change it. Type one is that it gets overwritten.

Me, for example, as a, currently as a data consultant, if my job title changes to business owner, the record just gets overwritten. But what that means is that all of my historical records will now point to that value. So my historical sick record would now have all of my sickness loaded as a business owner.

So it might cover up the fact that data consultant was the thing that was causing the stress. Type two basically allows you to track changes over time. So every time there's a change to the record, it creates a new record with it so that you can basically then say that, ah, all those absences that happened last year.

The job title is data consultant, all the absences next year, not that I'm ever gonna have any, the business owner absences. And then you get a, essentially a more accurate reflection of that particular analysis, but you've always got to bear in mind that isn't necessarily what the users always want.

Sometimes the users want to know what the current is. We speaking with a client the other day and they were talking mergers and acquisitions, and so they were talking about the fact that if a particular entity changed its name, they would always want the historical records to be recorded against the current name of the entity because from a historical reporting perspective, they'd want it to be overwritten.

So you gotta understand it from that perspective. Type three is history recording, except for, rather than with a type two where you get an extra record, when the value changes, you get an additional column and it basically only ever tracks So you the the previous version of it. So you don't get the full history, but you get the previous.

The good fun I've been getting into recently is type sixes and sevens. So type six is a hybrid one and three, and type seven is a hybrid one and two, which is then you get into the realms of the fact that you can do both and starting to use things like durable keys. I'm going properly down the rabbit hole at that point,

Shane: and I think it'd be fair to say that most people do ones and twos.

Very rarely do you go into the other numbers unless there's a really specific use case and then it's well documented how to do it. You just need to know they exist. It's very rare that you are gonna bake that in. And again, I think in the early days we used to have to make a call between ones and twos because again, we had a constraint on how much data we could store.

So we would cherry pick which dimensions. We moved to a type two because it involved more data, more complexity. Whereas now I'm guessing everybody is. Type two in by default and then may surface a type one view. When you query the data, you always just see as it now, and if you want to, you can query another view, which is as at a certain date if you choose it.

Or is that not true? Are people still defaulting to type one by default and then type two by exception,

Johnny: my experience has been more type one by default, type two by exception. Yeah, there's so many nuanced arguments in terms of the way to implement it these days, like if even getting into the realms of persisted keys and things like that.

'cause I'm like an old school surrogate key guy and I believe that's still really important from a relational integrity perspective. You get quite a lot of people who. Prefer the sort of indem key. So basically the scenario where the importance of surrogate key is this idea that it's almost like abstracting the business entity away from the business keys.

The fact that you're basically just having something, a key that identifies a particular record that's unique to your data platform. When you get into the realms of things like type two dimensions, it's really important to have a surrogate key because you're gonna get duplicate business keys or natural keys in there.

'cause every time the record changes, the business key's not gonna change. But you need a new unique key in your data warehouse. Some people are funds of in Keys these days, there's this idea of hashing values to produce a key that's gonna be pretty consistent. There are some drawbacks with that. The hashes aren't always perfect.

You can end up with clashes. I was always coached on the just the incrementing key type approach, and there's loads of people who argue against that these days and what. I've, my experience so far is that people have gotten a bit lazy by using the Indem key value because they can create them on the fly and they're like, oh great, we're gonna have perfect relational integrity because it always, when we use this hash, it's gonna make all the records match, but they're still not checking that they've got that integrity between their data sources.

So you can still end up with missing records and mismatches anyway. So I tend to use the old school surrogate key 'cause it at least forces you to go back and look at your dimensions and make sure that your values exist and that you've got things like unknown members and things like that. I feel like I'm going down like another rabbit hole of just said lots and lots of phrases and.

I take for granted that I know what they mean.

Shane: But the good thing about Dimensional modeling, and one of the reasons I think it is still popular, so popular is Kimball and Margie Ross, they wrote a hell of a lot. They write lots of books that were easy to read and understand. They wrote lots of blog posts, which became books.

Some of those old tips and tricks, we should probably archive them so we don't lose them. If those sites ever go down, they give us really good examples of, if you have this problem, this is how you deal with it. I'm a big data vault fan and we haven't had that gift in data vault land. If you read any of the data vault books, they're pretty dire.

They don't explain things well, in my view, 'cause this is my personal opinion, even though I use the modeling technique all the time, all the tips and tricks, there's very few of them that are usable. And lots of people have tried. So yeah, I think part of it is if you wanna understand what a surrogate key is, get the books or go read the blog posts and it will explain them in infinite detail in a way you can understand.

I think one of the key things you said was the surrogate key Pattern. This Pattern of saying, I'm gonna look up unique business key and then I'm actually gonna store another unique key and maybe an incremental inte key that's just incremented up by one to say in the data warehouse and the data platform that is now the identifier for this customer, this employee, this supplier.

We definitely use different technical patterns. Now can business key, I think is a viable Pattern with the technology we have today. Hash keys. Yes, we have collisions. That is a problem we need to worry about. How often do they really happen? Would we even know? We wouldn't know. There is technical implementations, the ability to wrap.

Trust patterns around it to say, if I've got a key in my Salesforce and I've got a key in my operational system in my Salesforce, it's. Business key one is Johnny, my operational system business key one is Shane. I have to actually do some logic to say that I can't just slam those keys and put them in the same place.

I have to make sure that they're unique. Otherwise I'm gonna do a whole lot of bad things, whether we surrogate, whether we hash, whether we can cabinet business keys, who cares? Pick one that works for you for the technology you're using and then make sure it's accurate, it's trustworthy, it, it's got all that rigor around it.

'cause those are the patterns that actually really count. So then let's go back to the SCD two. So I've got Shane the consultant and I've gone on for my lovely two weeks holiday because I'm working for a consulting company and I actually get leave and then I become Shane, the, the company owner, and I go and take my one day weekend that I get forced to take by somebody else for the year.

We have a bunch of techniques on how we know that record has changed in dating versus windowing. So do you wanna talk through those?

Johnny: Yeah. So in terms of the way I've always done it, the terms of all the implementations I've seen, it's always about having that valid from valid two date or a start date and end date that can be supplemented with it and, and is active flag potentially.

So you always know what the current one is. I've seen different people do them different ways. I've, I personally, for me, having a definitive start date and end date happen works really nicely. One of the patterns that I hate seeing is people who join on the years active indicator. 'cause if you happen to be loading a historic record, if it's a backdated payment, that needs to go back to when you are working as a consultant.

But we. Load it based on the active flood being business owner. For me, that's an inaccuracy. So for me, I would always sort of time bound it between my active windows, and I always hate leaving a end date as null as well, because then you're into the realms of casting that as some high date, just to be able to figure out your date ranges.

I guess it's one of the interesting things about the Pattern is even the patterns themselves have patterns within them. There's that Pattern at a conceptual level, and then there's even other patterns at a implementation level that can differ as well. But yeah, type two. You basically got an active from date and active two dates, what period of time a particular record was

Shane: valid for.

And this is the key is that there's the logical modeling or the conceptual modeling of Dimensional. I've got a bunch of dims, I've got a bunch of facts, and then there's the physical implementation. How am I doing my end dating strategy? So as you said, I was gonna ask you, are you, have you start end dates and if it's a current active record, is you end date an or do you pick a a 9, 9, 9, 9?

Yeah, that's always interesting. And then we got this idea of windowing, which kind of came outta the Hadoop stage. So when we moved from relational databases to Hadoop, one of the problems was actually changing. A record was incredibly expensive. Yeah. So if we landed a record and then we wanted to end date, it actually, it wasn't performance.

So we moved to this upset model, this idea that we wanna be insert only if we can. And so that forced us down the idea of using a Pattern of windowing. We don't ever have the start date. And then whenever we run the query, we'd go back and say, run a window function, and tell me between this period, what is the effectively active record.

And that was an expensive query. So we just gotta make choices.

Johnny: I've seen window in, it wasn't even in a Hadoop implementation, it was just in a relational database where somebody hadn't done an end date. They'd just say same rationale, but for the technology, probably the wrong choice. And even for that, it was an expensive query, which is why I prefer not to do it.

But again, I, I've missed that area to an extent. I went from relational databases and then just leapfrog straight to lake houses and open table formats. And so with your Deltas and your icebergs, that kind of updating historic records. I don't wanna go down the rabbit hole of explaining how it happens, but functionally you can, even though sort of the way under the hood it works is insert only,

Shane: yeah, it is still an update of records in those technologies.

It's still a technical anti-patent for the technologies. It's just that they've worked out ways to make it work and you shouldn't care. You may get a query go from two seconds to 2.1 seconds unless you really care, which you don't at that level. So you just gotta choose a Pattern that works for you. You can do start only dates and windowing and then create views on top, and the view can give you a, an end date on the fly.

There's lots of choices. To make it easy for the end user versus performance, you just gotta pick one. The interesting for us is with our product, the end. Because it's way cheaper. With the petitioning strategy that we use with BigQuery, it's way cheaper for us to end date the records than it is to have a windowing function.

So when we looked at it and cost was key for us, we said, it's a trade off decision we're gonna make. We're gonna go touch that record because it saves us money over everything we do if we use that Pattern. So let's pick one. And then the other key is when you walk into a new site. You now got a whole lot of questions you need to ask yourself.

Are they dimensionally modeling? Great. Okay. What's the typical grain of the facts? Is it transactional? Yeah. Are there any aggregate facts? No. Okay. What's their DIM strategy? It's primarily SCD type one. Okay. How do they make a decision when they need a type two? Okay. When they do a type two, what's their end dating strategy?

Are they relying on active flags? Are they start in dating? How do they fill out the inundated field? Is it a null? Is it a specific date? Are they windowing? Where's the query for the window? Is it left to the user? Is it in Power bi? There's all these patterns within patterns that are known, but as soon as you walk into a place you've never seen before, you have to ask all those questions.

'cause you need to know, you need to follow those patterns. Heaven forbid you have a dimension where one dimension is inundated and another is windowed. Yeah, you, you have to say, what the fuck, what? There may be a reason, but I'm gonna ask really hard questions about why are we using a different end dating strategy for DIMS in the same data platform?

There's gotta be a reason for that. Apart from, oh, a new developer came on, that's why they did it. That would absolutely

Johnny: fry my OCD. Just in terms of consistency. I can, I can get quite opinionated on what my preferred patterns are, and I don't mind being challenged on those, and even the patterns I prefer have some trade offs, and if those trade offs aren't the right trade offs for a client, then it's, okay, great.

If you're wanting to optimize for something. Different toward the kind of default that I try to optimize for. That's fine. But yeah, consistency's gotta be key. It's great though, that little spiel you just went on in terms of all those things you've gotta think of. And in my head I was like, just faxing dimensions dimensional modeling, just faxing dimensions.

Shane: Well actually, I'll tell you what, you've just got me thinking again, so you know how we're working on that data layer checklist. Yeah. I'm wondering if there's like a dimensional checklist when you walk in as a consultant to a site you haven't seen before. You just have a little checklist where you go through and you say, here's the patterns.

It could be, let me just tick some boxes so that I'm thinking about it. The checklist is just helping me think to ask those questions. And I'm like, yeah, I've gotta reset because I've come off a gig for another customer that's got a slightly different Pattern and now I've gotta reset my brain. And as a consultant, that flipping between organizations, that change of slight variation in Pattern sometimes.

It gives you a problem because you haven't reset your brain to the Greenfield.

Johnny: Yeah, I've struggled with it recently with a client.

There's a couple of things that they've done. First of all, are you familiar with this? If you were to look it up on the internet dimensional modeling and fact tables, the common answer say there are three types of facts table.

You've got transactional, you've got accumulating snapshot, and then you've got snapshot. Transactional is almost as it is. It's just pretty much a pen. Only as a new action happens, you keep adding to it. Accumulating snapshot tends to be more sort of process based. If we took booking this podcast as an example, I think you probably asked me in about March.

So you'd create a record that said Shane invited guests for podcasts 1st of March, and then the ball was in my court in terms of committing to a date, and I sat in my hands for a long time, and I think last week was when I was like, let's do it. So that would've been mid-July. So we'd update our record to say, date invited.

Date confirmed and then the date it happened, argument's sake. So 21st of July as it is now. So that's one record, but we've updated it three times. So, uh, accumulating snapshot has been updated based on dates. A clean snapshot, you just take a full date dump every single day. So things like stock balances are normally quite good for that.

Bank balances, maybe things like that. Finance teams are quite keen on the month end closing balances and things like that. So you take a snapshot of the data at point in time. So you've got those three types, but actually there is a fourth type that just doesn't get mentioned very often and I think it's 'cause Kimball only thought about it as a bit of an afterthought.

People refer to it as a type 1 4 5 fax table. So have you heard of this Type 1, 4, 5?

Shane: No, I haven't actually, but I'm like, wow, what a great name you already for. You already blown you had to give a thing a stupid name. Yeah,

Johnny: yeah. So the reason that, to be fair, Kimball didn't call it a type one four five, but it's just data models have, have gone with that because all of the supplementary information on the Kimball Group.

All of the blogs are numbered. So article number 1 4 5, if you were to look it up, basically refers to this fourth type of fact table, which is a accumulating time span snapshot. So we've basically taken two outta the three types of facts table and mashed them together somehow. The other thing that people call 'em, which really grinds my gears 'cause it's complete oxymoron, is they'll call it an an SCD type two factor table.

And it's okay. SCD is dimension tables and this is a fact table, so it can't be a type two, but the reason people get confused with it, 'cause it works the same way, it's like a process driven type fact table whereby the status of something changes and you get a new record every single time, but it's a change in status.

They're really good for processes. Things like a sales funnel or something like that. If you've got a particular opportunity that might be you get a lead and it's a lead between the 1st of January and the 1st of February, and then the 1st of February it becomes a qualified lead and then after two or three weeks of talking, it then becomes a proposal.

And then maybe it becomes a sale. So you've basically got the same record that goes through four different statuses. And then rather than capture that as making it wide and putting extra columns in to represent each stage of the process, you basically create new records and you have a start date and an end date.

A associated with a record, like a type two, slowly changing dimension.

Shane: That's what I call an administration event change. Invoice entered, invoiced, reviewed, invoiced approved, invoiced, paid. 'cause normally it would be a bunch of columns. And now I've got a whole lot of SQL Magic I've gotta do if I want to go and grab those columns and make them rows for whichever bi tool I'm using.

So what you are saying is effectively we get a new row for that dimension key. When there's a state change, we have a date for that state change. What about event slamming? So let's say I've got a, an event of something ordered. I've got an event of a payment, I've got an event of a delivery, and I've got an event of a refund.

In my understanding with standard dimensional modeling, I'd have four fact tables. I'd have an audit table, a payment table, a delivery table, and a refund table. Is that true?

Johnny: Oh, it's classic consultant answer at this point. And you definitely, it depends. My personal preference, when I design, and this is not so much a Kimball thing, but more of a Lawrence core and beam type thing, I always tend to conceptually model them as separate events and then having modeled them as separate events, if they're conformed dimensionality.

There's another great soundbite for you, conformed dimensionality. It's on my list. Yeah. If their conformed dimensionality is identical or so close to identical that it's not gonna make a difference. And if their grain is also identical, then I may well model them as a single table. But it depends.

Shane: Okay.

But if I had a fact table, that thing is a thing. One fact table and in my whole warehouse, 'cause one fact table, that's every event, all at the lowest grain. That's an anti-patent with, with dimensional modeling, we don't have fact tables that are thing is a thing. So let's say I've got a fact table with three keys.

Thing one thing two thing three. Yep. And thing one goes back to a dimension. And that dimension is a bunch of keys and then types. So that dimension holds employees. Suppliers, customers. And there's a typing on it. And then thing two is the event. So it goes back to another dim, and that DIM holds all the records and they're typed by order, payment, refunds, delivery, packing, all those kind of things.

And then we've got thing three, it's some kind of location dim. And so in, I go back to a dim table and I've got a bunch of keys and it's store every store in the world and then every website and every URL. So I'm modeling at the highest level of extraction where I've effectively got three dim tables and one fact table.

And everything's a thing, is a thing that is an anti-patent for dimensionally modeling. Dimensional modeling is designed to pick up some of the business language. Yes, I should look at a dim and actually understand. That's something I can look at and understand that it holds a bucket of things that are different from a bucket of other things in my organization.

Yeah, I agree. So do we still see dimensions of people, which is customers, suppliers, and employees? Or would you tend to model a customer dim and employee dim and a supplier dim?

Johnny: It always goes back to me to an extent in terms of speaking with my business users and how they reinterpret them for that kind of example, like almost unquestionably they're gonna be separate dimensions.

That's gonna be an employee, supplier and a customer. Yeah. Almost undoubtedly that's three separate concepts. Likely coming from three separate, possibly separate systems, but certainly if they're all in one system, separate tables. Where it gets fun is when you get into the realms of, so example I'm working with at the moment, and we've got this idea of a researcher dimension and a researcher is actually a subset of employees, but it's okay, should we still have an employee table and a researcher table?

We, we typically tackle things like that with role playing dimensions and having an employee dimension that can be filtered and may be obstructed in a view as different types and things like that.

Shane: Yeah, and it's the same with suppliers and customers. Do I have an org dimension that is effectively role playing customer supplier?

It is choices. They're, as long as they fit the dimensional modeling Pattern, you've got a dim, you've got a fact. You're deciding the grain of your fact. You're deciding what form of society changing your dim is. You've decided how you're gonna manage your keys. It's okay. Right? You now just come into some choices about which Pattern works best for you.

Let's go back to a couple of those things you talked about. Let's go through conform dimensions first and then role playing dimensions next, because they're key terms that we'll see in dimensional modeling all the time.

Johnny: Conform dimensions. So this idea that you can reuse the same dimension across multiple facts.

So if we had a sickness data model and we had. Periods of sickness, and we had our employees so we knew which employees had been sick. You could use that employee dimension with your sickness facts and use that as part of a modeled business process. And then you can do analysis on that. If you had a completely different business domain, let's go with sales, and you wanted to be able to analyze sales, and you wanted to be able to see which employees had sold the most of a particular product, you wouldn't remodel your employee for that sales domain.

You would use that conformed dimension. So this idea that this single entity can be used across many business events effectively. So the classic ones date. Like a date Dimension is, I think not far off every dimensional model ever. Design always has some kind of date dimension attached to it, and you're likely just gonna reuse that date dimension, be it a finance domain if you were doing financial reporting or if you're doing sales reporting, or if you're doing marketing or anything HR related.

Writing and maintaining a single date table means basically that date dimension's conformed across all of your potential facts.

Shane: And that's one of the things that's, I know if it's unique to dimensional modeling, but it's definitely one of the things that you do in dimensional modeling that you don't tend to do in some of the others.

I'm data vault modeling or I'm activity schema modeling. For me, dates are just attributes of a thing. I don't hold a hub and set for dates and data vault. I don't hold dates as secondary key in activity schema. So in dimensional modeling you will typically see a date D, which holds that is, and it's used across every fact table.

Yeah, so another kind of unique thing, I dunno if it's unique 'cause I haven't actually seen every model in the world, but definitely a Pattern of a dimensional model is a date dim and role playing. Talk me through roleplaying dimensions.

Johnny: Yeah. Roleplaying is the idea of a single dimension that can be reused for multiple different things in different contexts.

Date is actually quite often a role playing dimension because we took into account the example we talked about before, where you might have an order date, a delivery date, a refund date. Typically, you wouldn't create three specific date tables relating to that. You'd have one date table that you can relate to your fax tables via.

A variety of foreign keys and you'd reuse that single dimension in different contexts. Dates a really typical one, but even things like trying to think of good ones. I recently, I did a supply chain type thing where location was really important, but it was always from location and a there, or a shipping from one location to a different location.

But actually they could always be going in either direction. So rather than having a from location and a two location dimension, we just had a location dimension with everything in it. And then we just roleplay to it, depending on the context of whether it was the destination or the origin.

Shane: And so if I look at the pen itself, it's effectively in our effect table.

We have two columns with keys, but both of those keys come from the same dimension. And it's the typing of the dimension. So another one would be, if we ever wanna see customers and suppliers in the same fact, we might have an organization dim and it's typed by customer, supplier. And then we'll see those keys in two different columns in the fact table.

Possibly let's go out through the rest of the weird dimensional thing. So Junk dimension. Talk me through that one. That's a cool name, right? That's better than fact. 1 4 5. Junk Dimension.

Johnny: The junk dimension. At the same time it's a terrible name 'cause it makes it sound really throw away and not particularly valuable.

So going one back from Junk Dimension. Have you ever come across the concept of a Centipede factor table or is that a new one on you? That's a new one on me. Cool. So a send speed facts table is where you end up with a facts table that's got lots of different context around it and the context is very specific and granular.

So you end up with a wide fact table that's got lots and lots of dimensional keys in it. And then the dimensions, it's joining to end up being very narrow dimensions with only a few attributes on them. And you end up with basically to write any given query having to do lots and lots of joins all over the shop.

And they call it a center speed fax table because if you think about an ERD, the fax table ends up being very long and thin with lots of relationships coming off it like little center speed legs. And they're difficult to use because your dimensions are spread across so many different sort of categories.

They're difficult to navigate. You've gotta write these really unwieldy SQL queries where you've got lots and lots of joins and they only tend to be like joins that are one hop. We're not talking snowflake. And so they're not that bad from a performance perspective, but from writing them they become unwieldy and you can cure that.

By, there's a couple of things really. If you've got any sort of commonality between those lots and lots of small dimensions and you can perhaps denormalize them into a single entity that works nicely, but sometimes you just can't, and almost the way around that, the idea of this junk dimension is to take all those little titty, bitty, low cardinality type things and actually just create sort of the, the product of them all into one fat dimension that combines all possible given combinations of them so that you've got this one entity that you can navigate through.

It's almost like a miscellaneous dimension is almost one way that it feels could be a good way. In fact, miscellaneous dimension for me feels like a better description than a junk dimension, but it's almost this kind of just group all these things together because lots and lots of little. Homes for them doesn't make much sense.

So we're just gonna stick them in one big group together and allow people to query and buy that instead.

Shane: And so how does the key for that work? Because now we've got a bunch of things that kind of aren't the same. So we're still gonna surrogate it with an incremental key, but the business key's gonna have no real relationship.

Johnny: The business key ends up being just a composite key of every column, and that's how I've always done them. It's any given combination of a set of different things. I'm trying to think of a good example of it. So I would say in my life, I've only actually implemented junk dimensions. It might only be like twice.

I don't find it a particularly common Pattern, but I'd know how to do it if I needed to do it. And I think I'd recognize the need for it if I saw it as well. And sometimes I struggle to get my head around why I would understand that. I'd see a centipede fact table and be like, there must be a better way to consolidate this and make.

Easier to navigate.

Shane: So it's in your toolkit. It's not an anti-patent. Yeah, yeah. It's a Pattern you use, but you use it very rarely. It's only when you go, ah, actually this is gonna cause me a problem if I do it the normal way. Let me use that alternate Pattern and bring it in. And then degenerate dimension.

Johnny: Yeah. So again, degenerate dimension is like another one that I was gonna be on my blog series in terms of, oh, it's just facts and dimensions, isn't it? So degenerate dimension, different people seem to have different interpretations of them. My interpretation, effectively, it's a dimensional value, it's context that would ordinarily exist, auto dimension, but you just retain it on your facts table instead.

So you basic. Get rid of the need to join out to a separate table to use it. The reason for it being a piece of contextual information that is only ever relevant in the context of a given fact. 'cause at that point, if that given dimension is never ever gonna have any kind of conformity with all the things, then you may as well just eliminate the need for it.

Again, if I give you that description, theoretically you could end up with very wide degenerate dimensions, which I definitely wouldn't recommend. 'cause then you end up with a wide facts table. So if it's only a small dimension where the context of it only applies on a given facts, then yeah, you use 'em as degenerates.

Again, this almost feels like a bit of a personal preference type thing, but it's a Pattern. I really try and avoid where I come. 'cause the typical thing that happens for me is that you go through the requirements gathering and you come across this particular concept and it feels like it's a good fit for degenerate dimension.

And then you go to your customer and your client and you discuss it. Conceptually with the mental, does this thing only ever exist in this context? And they say, yep, absolutely. So you do it as a general degenerate dimension, and then the next requirement comes along and all of a sudden, oh yeah, we need that piece of information relating to this different factor, a different grain as well.

And at that point, people start trying to join facts tables together, which is a discouraged practice as well. I always try and even if it's quite a high cardinal analysis, even if it's gonna be almost one-to-one with a factor table, I will try to avoid degenerate dimensions if I can. Just, just promote that reusability and all the processes

Shane: at a later date.

Again, it's not an andan, but it's a Pattern that's used. Really, it's an exception. You have to justify why using that Pattern. It's a Pattern that I use rarely. It's a Pattern that I see everybody else use all the time, and then I bang my head against the wall. One of the problems with degenerate dimensions is you think about it as you are querying the data.

You're a user coming in and you don't have a semantic layer, so you're hitting straight against the dimensional model as your semantic layer, and now you've got another rule. So the first rule is you join your DIMS d fx. Grab your fact table, you join it to the DIMS you want and you're effectively just creating a de-normalized one big table is what you're gonna get back with those queries.

And then you are saying, oh, but actually you probably need to check the fact table to make sure there's no degenerative dimensions, because if there's an attribute you need, and it's actually in the fact table, not the dim, now you've gotta go and actually do something different to your query. So you are changing their query Pattern from the standard Pattern to a Pattern plus, and now they've got to remember to check whether that attribute's sitting in the fact table or not.

Versus attributes always sit in a dim. And so again, we don't have the ability to template our code as much. I suppose it's only a second Pattern, right? Run this query and it's got degenerates. But why, again, why are we justifying, slamming the attribute on the fact table when attributes go on a dim, maybe back in the days when we had constraints, but we don't intend to have those now.

Johnny: Yeah, I mean, the other sort of fallacy that I hear off spouted is that in the world of spark engines and parallel processing and those kind of things that oh, it's more efficient to keep it on the flat table. And I get the impression that maybe that was true. Four or five years ago. But all of the major players these days are optimizing their engines for that bi workload, for that kind of star schema type shape, and it, it deals with them absolutely fine.

I've had it a couple of times recently, it's, oh yeah, we need to reduce the number of joins, and it's like, why the engine can deal with it now. It's not as big a problem as people think it is.

Shane: I think, again, there's lots of those patterns where we have a preference and we try and justify it for the technology that we built that preference from.

But things have changed. So test it. Just run the experiment. See, simulate the volume of data you think you're gonna have and the types of crew you're gonna run and run them and see which performs better. Okay, so you, you talked about joining across facts. So again, there's this idea of was it a bridge table that allows you to slam.

Facts together or join them. I never quite remember that one. Talk me through. Yeah.

Johnny: I've never used bridge tables to span facts. 'cause ultimately that's just a conformed dimension that's effectively, Kimball will talk about this idea of drill across. And the way to drill across is, again, it's really strange.

I found myself in this position where I've lived and breathed this stuff for so long. I find it sometimes difficult to put into words exactly what's meant by some of it. Kimball puts it into a really great description in terms of this idea of drilling across factor tables and this idea of have you come across things like the fan trap and the chasm trap and all those kind of things like that.

And that fact tables tend to be at different grains, and if you join them together, the cardinality is not gonna match. But if you basically route your queries and your dimensions and then aggregate across facts, it works absolutely fine. It's always gonna depend on how the fact tables have been structured to an extent.

But as a rule, if you basically structure your queries that way, that's how they're designed to work and how they're decided to drill across, and that's what you conform dimensionality does. Bridge table's an interesting one. Bridge tables are more, again, one of the patterns I talked about and when we talked about defining grain, this idea that for every unit of measurement in a fax table for every transaction, it should have a one to one relationship with its dimensions.

It's not always the case. The classic example is bank accounts. So I have a joint bank account with my wife. If a bill goes out. On a direct debit for a mortgage, for example, that's two customers associated with it. It's one bank account, but it's two customers. So you've almost got to have a bridging table that can resolve that so that your fax table only has one transaction and it can basically join through a bridge table and resolve out to those two customers.

So that's where bridge tables mainly get used. So I went down a real rabbit hole, and again, this is for me the fact that I take pride in this sort of amount of experience and knowledge of a master round dimensional modeling, but I'm definitely not encyclopedic. So it's still for me, hang on. I've got a problem.

I dunno how to solve this, but I have got a shelf of books and in those books are patterns. So the one I was trying to solve recently was around hierarchies and the best way to resolve recursive hierarchies. You can just flatten 'em out, which kind of works. If they're not fixed depth, that can get a bit messy, but, but.

Does work, but there's also patterns you can use with bridge tables that will basically help you resolve recursive hierarchies as well. So that's the idea that we're talking about resolving many to many type relationships, and you basically end up that every level of the hierarchy becomes its own record, and then the bridge table resolves it back to the fact.

Shane: Okay, so bridge tables are a bridge between the facts and the dims. Not a bridge across facts is what you just said. That's how I've always used them.

Johnny: I feel like I'm gonna have to get my head in my books and see if I can find any examples of bridge tables between facts.

Shane: I can't even remember what they were.

And then I just, I just think about bridge tables from data Vault and then, so I'm, I'm applying a different Pattern for that name in my head.

Johnny: I can say it's so bizarre. My first foray into data platforms after having been a report analyst was Kimball. And every subsequent organization I've worked in, and I think you alluded to the fact that it's probably the number one applied Pattern in the data industry.

It's all I've ever known. I've got real blind spots for data vault. I can talk about hubs and satellites and vaguely sound like I've, I know a little bit, but I would not have. The foggiest how to start, they've had to do a data vault implementation,

Shane: and it's a different language and it's the same patterns to a degree.

So with data vault, effectively we just take the dimensional key and we make it a hub. So it holds the key only, and then we take the attributes out of a dim and we make those SATs, but we can add more than one SAT table for a hub. So again, it's a very similar Pattern, but it's different and the language is different and the patterns are slightly different.

And so you've gotta reset your brain and then you go to activity schema. And that's, again, it's very similar but very different. And again, like you said, there's uh, the depths and the breadth. You can do dimensional modeling with some of the core patterns. You'll do really well and then eventually you'll hit an edge case where you need some of the more of skill bands you need to know they exist.

But as you said, there's some good books, there's all the Kimball books, there's all the blog posts. And then with Lawrence Coors stuff, half his book is Beam, which is the stuff I use around the who does whats and effectively understanding requirements and concepts and conceptually modeling. And then the other half of his book is how to apply all that to Dimensional modeling.

And I remember in the course there's quite a large part of the course is around ragged hierarchies and yeah, how you model those in dims and I'm, I don't care. I'll just use an OLA queue back then. It takes care of it for me. So yeah. Alright, let's get onto what I think will probably, the last one I can remember, which is Factless facts, which kind of sounds dumb.

It's kinda like factless facts. Yeah, totally.

Johnny: I'm trying to remember who I was talking to this about the other day and we just basically decided that's just a nonsense, not just thing is a factless fact table. The fact table still has facts in it. It's just that there are no values that you're gonna a.

That you like. So the fax basically represents the intersection of all the dimensions and ultimately you end up pretty much counting the rows. That becomes the measure at a given intersection. Quite often when I do basic star scheme examples, I'll fall back on a sales example. It's in online retail for a long time.

And so a typical sales fax table might have sales amount, it might have cost, it might have margin, it might have order quantity. These are all things that you're gonna be able to add up an average, things like that. A factless fact table doesn't have any of those things. It basically just stores the intersection of the various dimensions and ultimately end up counting rows on it to get the facts.

Shane: With a factless fact table, would it just hold the keys or would you have a column with one in every row?

Johnny: I would just have the keys again. So I've seen that Pattern as well when people just put a one in. So from a power BI perspective, that's considered an anti-pattern that's just bloats that you don't need.

It's just a count of the rows. It's you don't need the one 'cause you just do account. Account star or

Shane: account one. So let's go into that for a second. So everybody tells me, not everybody, but most people tell me that Power BI is far more efficient when you use the star schema versus one big table or anything else, but they never gimme the context.

And I'm like, is that when you're not using direct query and you're actually bringing the data back into the Power BI layer? Or is that when you are using DAX or when you're creating a semantic model? Where is the thing that says Power BI works best with a star schema?

Johnny: So I feel like I'm gonna have to shout out a couple of Microsoft Oh Form MVPs now I think.

So there's a Chap Kon kbi. Dutch guy and his catchphrase is that you must star schemer all the things. And he's like a massive advocate of it. And he made stickers and t-shirts and all these things. Star schemer, all the things. And then there's a chap bent, I'm gonna butcher his name, which he'll kill me for if he listens back to this.

So he is Belgian Benny Dre, he is on the power bi cat team and he did a conference session that was taking the mick out of K bit. Basically the session was called Star Schemer, all the things. But why? And it was really fascinating 'cause it actually dug into it and did a load of tests. So some people were like, oh you need to use a star schemer 'cause you'll get better compression out of the, so Power BI is built on the Verta pack engine, but it's basically the compression engine for it.

Oh. You get better compression so you'll have a lower memory footprint and you a load of tests versus one big table where you basically proved, yeah that's not really the case. And it was like, oh start scheme will load quicker. 'cause if you've not got less data redundancy and you did a load of tests and it, that wasn't really the case.

The main thing is that DS is a language. Is structured to work with it. Well, it always strikes me as chicken and egg like in terms of is Dax built to work with star schema? Almost That kind of, what was the way around? I was thinking about it the other day, that effectively, did they make power BI to be optimized for star schema as opposed to people saying that our star schema is what's optimized for Power bi.

The other soundbite I came up with the other day is that Dimensional modeling's, probably the second best Pattern for everything, speed-wise. One big table, a former colleague who did his thesis on it, star schema versus one big table, and I was like, oh yeah, one big table is loads better. Was like, why is it better?

It's quicker. Okay. What about from maintenance and reusability and then when you get into the realms of that, actually, yeah, one big table's good and quick and easy to query, but I've got to have a different, one big table for lots and lots of different things. And then if I need to update a particular attribute, I'm gonna have to update it in lots of different places.

And then again, not being an expert on it, but my impression of data vault is that if flexibility wise, it's really good. It deals with change lots really easily. People say it's complex to implement, I've never done one. And that potentially querying it because there's a lot of joining. Your tables can be quite complex and difficult to navigate from a analyst perspective.

Shane: Yeah, so let's talk about that one. 'cause that's a really interesting one. And it's true. You typically use data vault in a layered data architecture. What Joe Reese calls mixed modeled arts. So we would typically never expose the data vault structures as the core reporting layer. We would dimensional it with one big table it, we would activity schema.

We'd do a whole bunch of things to make it easy because joining lots of tables together as an analyst is an anti-pain, in my view. You're getting 'em to do work that the machine can do for you. And then everybody goes, oh yeah, but everybody's can understand how to query a star schema. And I'm like, yeah, they can if you train them.

Yeah, and they can still get it wrong if I give them one big table, if I give 'em the table with a grain and all the columns in it. As long as I don't give them 2000 columns, it's much easier for them to query. Now. Yes. What happens now is if my only Pattern is one big table, if I write 5,000 DBT models that do all the transformations in code with no segmentation layering, no shared reuse, no shared context, and I'm creating nothing but 10,001 big tables, that's a bad Pattern.

But if my code is effectively my model, the context holds the model, and I'm just hydrating the one big tables at the end, and every time I do a change, those tables are automatically refreshed with those changes. Not a human, then that's a Pattern that works. If I'm data folding and then dimensioning, and then one big tabling.

It's a Pattern. I can automate that and I can hold context and I can get the machine to do all the changes for me when I change that context. So that's my view. And I was always intrigued with power BI dimension. That's the norm. And that's fine because with the norm there's lots of good articles, there's lots of things that have been written.

There's lots of people that can help you if you get stuck. And if you don't follow the norm, it's a little bit trickier, but why is it the norm? And the same with DBT. For people that now consciously model rather than unconsciously model dimensional seems to be the flavor they use the most. Now why is that?

Is it because the information is easily accessible? Is it because that's the things that people are being trained on the most? And our days Dimensional became so popular because Ralph Kimball did a lot of training. You could always go on a dimensional training course. It was easy to get hold of somebody that would teach you that.

Not so much with the other courses, but he doesn't do the training anymore. Yeah, he's been retired like a long time. Yeah. And then Margie Ross took over, but she's retired now. So actually, who's doing the training? 'cause it's not the people who invented the patterns, but maybe that's it. Maybe the training is still more accessible or the books are accessible.

It's intriguing. How is that still the modeling Pattern and it has value. It's a really valuable Pattern. It's not my favorite Pattern. I'll be honest about that, but that's just my opinion. Like you said, you, you don't use a lot of junk dimensions or degenerate dimensions. That's just your choice. That's how you mod.

Yeah, and that's fine. You're making conscious decisions around that, which reminds me, there's what I missed a late arriving facts. Sorry. That was the other one that we probably need to talk about. So

Johnny: the way I always interpreted them was this idea that you are, and, and I guess this is a Pattern that we've not really discussed, is this idea that you'd always load your dimensions first and then load your facts afterwards.

And part of that is to guarantee you've got that sort of relational integrity. And again, this goes back to that Indem key argument and the fact that I prefer to look up my keys after the event. So if you're gonna look up your keys when you create your facts, tables means you've always got to load your dimensions first.