Patterns to define the ROI of a data product with Nick Zervoudis

AgileData Podcast #74

Join Shane Gibson as he chats with Nick Zervoudis about patterns that you can use to quickly and easily define the ROI of your data products, before you build them.

Listen

Listen on all good podcast hosts or over at:

Subscribe: Apple Podcast | Spotify | Google Podcast | Amazon Audible | TuneIn | iHeartRadio | PlayerFM | Listen Notes | Podchaser | Deezer | Podcast Addict |

You can get in touch with Nick via LinkedIn or over at:

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

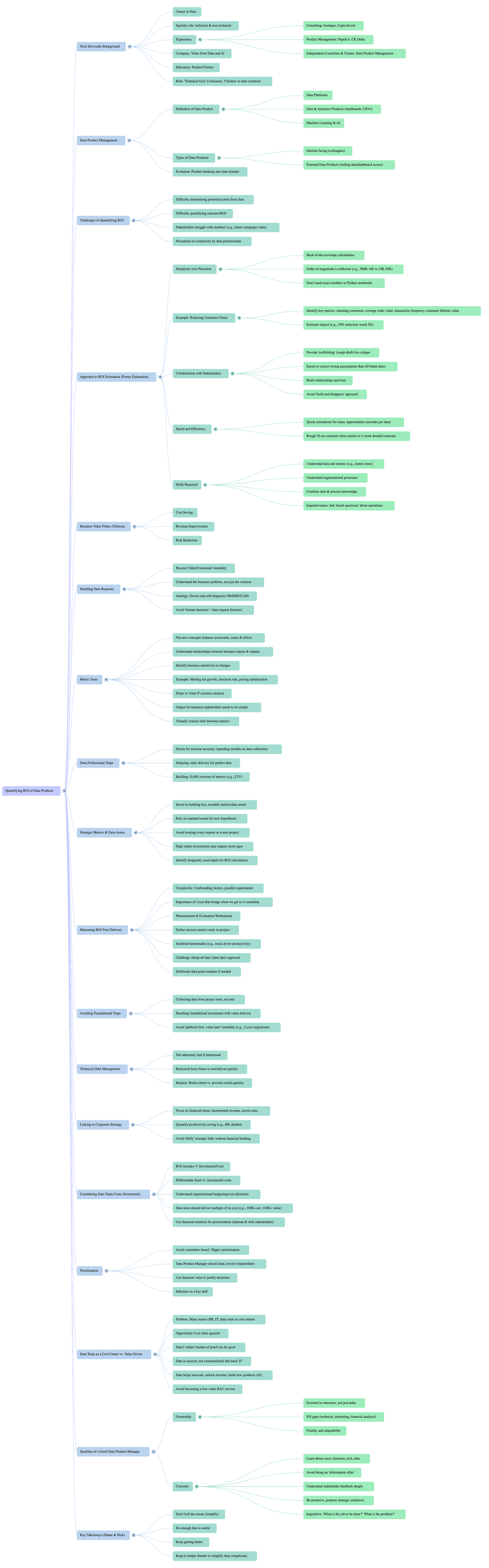

Google NotebookLM Mindmap

Google NoteBookLM Briefing

Briefing Document: Quantifying ROI for Data Products

Source: Excerpts from "AgileData 74 - Patterns to define the ROI of a data product with Nick Zervoudis" Speakers: Shane Gibson (Host), Nick Zervoudis (Guest, Independent Consultant and Trainer, Founder of Value From Data and AI)

1. Introduction: The Challenge of Quantifying Data ROI

The podcast episode highlights a common and significant problem in the data domain: the difficulty in quantifying the Return on Investment (ROI) for data projects and products. Organisations often struggle to move beyond identifying potential actions and outcomes from data to actually assigning a monetary value to those outcomes.

Core Problem: Stakeholders can describe the desired action and outcome (e.g., "reduce customer churn," "increase sales"), but "then you get crickets" when asked to quantify the financial impact.

Nick Zervoudis' Background: Nick has a career in data, bridging the gap between technical and non-technical people, initially in consulting (Capta Invent) and then in data product management (PepsiCo, CK Delta). He's now an independent consultant, emphasising "value from data and AI." His experience spans internal and external data products, including data platforms, analytics products (dashboards, CSVs), and machine learning applications.

2. The Shift to Data Product Thinking and Value-Centricity

The speakers note a growing, but still evolving, trend towards applying product management principles to data. This "data as a product" approach is seen as crucial for addressing the ROI challenge.

Product Thinking for Data: "It's interesting that there's this move in the last couple years to bring product management thinking or data as a product that way of working from the product domain into the data domain. And I think it's been great. I think we've seen some real changes..."

Value and Customer Centricity: While some companies have embraced this for 25 years, many are "lagards" slowly adopting "value centric and customercentric way" of working with data.

Moving Beyond "Feature Factories": Data teams often act as "feature factories" or "data request" fulfillers, building what stakeholders demand without understanding the underlying problem or value. This leads to unused dashboards and wasted effort.

3. Key Strategy: Fermi Estimation for ROI (Back-of-the-Envelope Calculations)

A central theme is the importance of using quick, rough estimations – "Fermi estimations" – rather than striving for perfect precision at the outset.

Fermi Estimation: Named after Enrico Fermi, who made quick, order-of-magnitude estimations (e.g., the TNT equivalent of a nuclear blast). The goal is to get the "order of magnitude right," not exact numbers.

Simplicity is Key: Data professionals often overcomplicate ROI calculations, thinking they need "exact numbers" and "the same rigor as a lot of the other data work." Instead, "a lot of the time all you need is a back of the envelope calculation."

Example: Churn Reduction: If stakeholders want to reduce churn, even rough estimates of customer lifetime value, churn rate, and potential reduction (e.g., 10%) can quickly reveal if the opportunity is worth $500, $5,000, or $500,000.

Prioritisation Tool: These rough estimates allow for quick comparison of many opportunities (e.g., 15-150 ideas) to identify the most valuable ones, or those with the highest value per unit of effort.

"S*** First Draft" Approach:** Instead of asking stakeholders for a number on a blank sheet, provide them with a "s***** first draft" of your calculation. This makes it "so much easier for both technical and nontechnical stakeholders to basically critique something or provide me with an input I'm looking for if I give them the scaffolding."

4. Collaborative Approach and Stakeholder Engagement

Quantifying ROI and building effective data products is not an isolated task for the data team; it requires deep collaboration with stakeholders.

Internal Consulting: Nick's experience in consulting and acting as an "internal consultant" for business units has taught him the value of asking "dumb questions" and drawing flowcharts with stakeholders to understand processes.

Building Trust: Constant engagement and collaboration build "better relationships and trust." Disappearing for months after initial requirements gathering, only to return with a "homework" product, often leads to building the "wrong thing" or stakeholder resistance.

Bringing Finance Along: Involving finance colleagues in the financial estimation part ensures that "when the business case shows up on their desk they don't go who is this what is this thing," but rather recognise it as something they "worked on together."

5. Understanding the "Why" and the "Trifecta" of Benefits

Data professionals should push beyond simple data requests to understand the underlying business problem and how data can contribute to key financial benefits.

Beyond Data Requests: When a stakeholder requests "weekly sales data," Nick's response is, "no that that's not what we're here. That that's just a solution you have in mind." The data team should act like a doctor, probing symptoms to understand the actual problem.

Focus on Business Outcomes: The goal is to understand "the business problem" and how data can influence "the business outcome."

The "Trifecta" of Value: Most benefits can be categorised into:

Cost Saving: Reducing operational expenses.

Revenue Improvement: Generating more sales or income.

Risk Reduction: Mitigating potential financial or operational risks.

Probing Questions: By asking "what action are you going to take?" and "what outcome do you think you're going to deliver?", data professionals can uncover the true need.

6. Metric Trees: Visualising Business Relationships and Sensitivity

Metric trees are presented as a valuable tool for understanding the interconnectedness of business inputs and outputs, enabling more informed decision-making.

Understanding Relationships: Metric trees help to "understand the relationship between the different inputs in my business and how these translate into outputs."

Business Sensitivity: They reveal "what is my business's sensitivity for those different things." For example, how a 10% increase in mailing list subscribers cascades through click-through rates, conversion rates, and profitability.

Simplicity for Stakeholders: While the underlying calculations might be complex, the visual representation and the "output that a business stakeholder sees has to be super simple so that they can also understand this whole concept of making datadriven decisions."

Avoiding Over-Engineering: Data professionals' tendency to seek extreme accuracy (e.g., "spend 3 months grabbing it, modeling it, getting the actual abandonment rate") can delay value. Metric trees support the "light touches" of the discovery/ideation phase.

7. Measuring Success and the Measurement & Evaluation Workstream

Proving ROI requires a deliberate plan for measuring the impact of data products, ideally integrated from the project's start.

Pre-emptive Measurement: "It's so much easier to actually figure out the ROI of something if we've done this exercise that goes, what is the business outcome we're going to be influencing here?"

Dedicated Workstream: For significant projects, Nick recommends an "insist that there needs to be a measurement and evaluation workstream as part of the project."

Defining Success Metrics: This workstream defines "what are the metrics for success." If the necessary data isn't readily available (e.g., in a metrics tree), "we need to set up some kind of measurement for this new thing."

Beyond Usage Metrics: Simply measuring dashboard usage (e.g., "opened and run") is often insufficient. Qualitative feedback (interviews, surveys) is "so much richer" than viewing time or open rates.

Linking to Action: True value comes from enabling "better decisions" and influencing specific actions. Dashboards should be integrated into workflows (e.g., "every Monday morning I open this dashboard... and I make one two three actions off the back of it").

Deliberate Data Collection: The "big data data lake approach" often fails because crucial data points are missing. Being deliberate about the business problem helps identify necessary data points, and if they don't exist, "we need to create those data points. It's not a nice to have, it's a must-have condition."

8. Balancing Foundational Work with Value Delivery

The discussion touches on the age-old tension between building robust data foundations and delivering immediate business value.

Avoid "Platform First" Pitfalls: "We spend two years doing a big migration promising the business that after the big migration we'll finally be able to deliver value and what do you know two years later co gets fired new co comes in first thing they do they want to rebuild the platform..." This is a common and detrimental cycle.

Bundle Value with Foundations: It's crucial to "bundle any kind of let's call it technical debt or platform investment or foundational investment together with something that's going to deliver value to the business and deliver in minimum increments of value."

Intentional Technical Debt: Technical debt isn't inherently bad; it's "borrowed from our future selves... in order to usually test something." There's "no point building something super robust and scalable if we don't know it's worth scaling in the first place."

9. Quantifying the "I" (Investment) and Prioritisation

Understanding the cost side of ROI is equally important, particularly for internal prioritisation.

Beyond Value: ROI requires both value and investment. The "I part is basically the cost." This includes incremental costs (additional hours, contractors, compute) rather than sunk fixed costs.

Internal Accountability: Data teams should know their operating costs and aim to "be delivering more than that, like a multiple." (e.g., "If we're costing 100K a week, then any given week, we should be delivering at least 110 if not 200K back to the business").

Using Financial Language for Prioritisation: When prioritising, use "financial numbers" to justify decisions to stakeholders. For example, "your thing is going to cost the business 200K, but based on our projections, it's only going to make us an extra 100K."

Data Product Manager's Role: While committees often prioritise based on "who has got the biggest voice," a data product manager should ideally make the final decision based on value, involving stakeholders in the process.

10. Data as a Value Driver, Not Just a Cost Centre

The speakers challenge the notion of data (and even other shared services like HR/IT) solely as cost centres.

Opportunity Cost: Treating departments as cost centres can make "a lot of things become invisible to the business," particularly opportunity costs (e.g., the cost of using inefficient old software).

Innovating and Unlocking Value: Data is a "more nent profession" that helps "innovate," "improve the quality of decisions," "unlock new streams of revenue," and "build new products," especially with the rise of AI.

Avoiding Commoditisation: Nick doesn't want the data team to act like a cost centre because "then we're just going to default to doing bare minimum low value adding tasks that can be commoditized."

11. Qualities of a Good Data Product Manager

The episode concludes by identifying key aptitudes for successful data product managers.

Ownership: The most critical quality. Being "invested in the outcomes you're trying to enable," not just completing tasks. Good PMs "fill in the gaps" across technical, marketing, or financial analysis areas.

Curiosity: A "sense of curiosity to learn more about your users, about your business, about the technical underpinnings of your product, about what the data actually shows and means." This prevents becoming a mere "information sifter" and enables proactive, strategic impact.

Problem-First Mindset: "Be inquisitive around what the problem is understand the problem itself before you worry about the solution." This aligns with "product thinking" and "jobs to be done" frameworks.

Key Takeaways for Action:

Embrace Fermi Estimations: Don't strive for perfect accuracy upfront. Use quick, "back-of-the-envelope" calculations to get an order of magnitude for ROI, especially in the discovery and ideation phases.

Collaborate Extensively: Involve stakeholders (including finance) from the start. Share "s***** first drafts" of calculations and co-create understanding of processes and value.

Focus on Business Outcomes: Always ask "why" and link data requests to specific actions, measurable outcomes, and the "trifecta" of cost saving, revenue improvement, or risk reduction.

Implement Measurement & Evaluation: For any significant data product, build a measurement and evaluation workstream into the project plan, defining success metrics and how they will be tracked.

Balance Foundations with Value: Bundle foundational data work with initiatives that deliver tangible, incremental business value, avoiding lengthy "platform-first" projects.

Quantify Investment: Understand and communicate the cost of data initiatives alongside their potential value to inform prioritisation decisions.

Cultivate Ownership & Curiosity: For data professionals, especially those in product roles, these aptitudes are crucial for understanding complex problems and driving impactful solutions.

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

Transcript

Shane: Welcome to the Agile Data Podcast. I'm Shane Gibson.

Nick: And I'm Nick Zervoudis.

Shane: Hey, Nick. Thanks for coming on the show. Today we are going to talk about the thing that I never actually see, which is quantifying return on investments from data teams. Before we rip into that though, why don't you give the audience a bit of background about yourself self.

Nick: Yeah. And it's great to be here. So I'm Nick. I've worked my whole career in data, but always in that kind of squishy role that somewhere between the purely technical people and the people that haven't looked at an equation since about 25 years ago, at first, that was in consulting. First for a boutique consultancy, specialized in data science.

Then for Capgemini Invent. Then I made the leap to product management. I worked for PepsiCo and then for CK Delta's a. Subsidiary of a large conglomerate owning a lot of infrastructure businesses around the world, and now I'm on my own. I made the leap to be an independent consultant and trainer, , specialized around data product management because I see so many organizations still make the same , really basic mistakes around how should we get value out of our data investments, which is also why I've named my company value from Data and ai.

That's the short story about me. I'm someone that studied politics, but somehow has been in data his whole career. And even though I never considered myself technical, I've now gotten to the point where I'm often introduced at least by the business stakeholders as, oh, Nick's the technical guy.

He understands the data side. Except when I then talk to the data scientists, then, I don't think they think I'm a clueless person that doesn't understand anything they do, but that's how it feels compared to them. So here we are

Shane: Yeah, I'm a big fan of Hitchhiker's Guide to the Galaxy, so I talk about people like that as Babelfish, depending on which part of the organization you're talking to, you're interpreting for the other part , that's not in the room normally. When you're in product management for those companies, was it physical products or digital products .

What part of the product management were you in?

Nick: always data products. And here when I say data product I'm mish mashing a few different types. Like you've got data platforms that are enabling other use cases. They're not necessarily delivering value end-to-end themselves. Data and analytics products, things like. A dashboard or shipping out CSVs to end customers.

All the way to using machine learning and olms. And that's been the types of products I've managed my whole career, including when I was in consulting and was an undercover data PM and we didn't call it data pm 'cause that title didn't exist and we didn't even know that product management was a thing.

And then the other thing that's worth calling out is that I've also dabbled in both internal facing products where your customers are colleagues of yours in different departments and external data products where we're literally selling data sets or selling dashboard access to customers that are interested in buying that data.

Shane: I think it's interesting that there's this move in the last couple of years to bring product management thinking or data as a product. That way of working from the product domain into the data domain. And I think it's been great. I think we've seen, some real changes to the way we work with data and the processes we use and everything we do. Still quite lacking. There's still a lot to learn and a lot to bring to the table. And one of the things, that I wanted to talk about is this idea of return on investment because. When I'm working with organizations, it's hard enough to get them to get to the stage where they'll determine the potential action that will be taken from the data or information that's gonna be delivered and what the outcome to the organization might be from that action. But it's very rare, if ever, that I can see them make the next jump to actually quantify the return on investment for that outcome. They may say, I need some data. I wanna understand all the customers that haven't bought something in the last six months. And you say, great. If I answer that question with data and information, what are you gonna do? Ah we know that they're likely to leave, so we're gonna go and do a churn offer, send out some emails, give them a discount. Okay, if that churn campaign is successful, what's the outcome? Oh we'll retain some customers, we'll increase our margin, get some more sales, and you go, great. If you had to quantify that as a number, what would it be? And then you get crickets, and. Maybe it's because they don't actually have the data to input to help them make that decision, and understand what that would be. But there just seems to stop, so how have you dealt with that, this idea of return on investment. How do you actually approach it?

Nick: Yeah, it's a great question and I wanna answer it by going on a bit of a tangent first because I wanna comment on even what you said that, oh, in the last couple years, companies are waking up to the need for product thinking. And it's true, like a lot more companies are doing it. And then there's other companies or other data teams that have been doing it for the last 25 years.

So I feel like there's a very skewed distribution. In terms of which companies are doing things in that, value centric and customer centric way versus all the laggards in the same way that if you look at how many people are using maybe LLMs in production today, it's a very small number of companies around the world.

If you looked at it two years ago, it was an even smaller number. And we're in that stage where the laggards and even the people in the middle of the adoption curve are slowly getting into it. And I think that's relevant because for me, a lot of , these let's say, best practices and now how do we figure out the ROI, it's not something that's super complicated or cutting edge or, oh, you need to have all your ducks in a row, all your data in a beautiful metrics tree before you can start asking those questions.

Because let's jump into your example, right? I thought you'd give me a harder case to start with, right? From what you've said, it sounds pretty straightforward.

Shane: let's start with the simple ones,

If we see the how you can apply the patents to a simple scenario, then it gives you the sense of the pattern. And then from there we can go into the horrible edge cases where we know it's a little bit harder. But again, I still find people struggle with the simple cases.

Nick: So here's why. And I'm, not just saying it to be smug or anything, but why I think the example you gave me is straightforward because the link between the data request, let's say, and the outcome, the business outcome that the customer is looking to achieve is pretty straightforward, we wanna reduce our customer churn, that means that even roughly, , I'm sure even if their BI is not very good and there's some data quality issues, they'll have some idea of, okay, how many customers are churning today? What is our average order value? What is our transaction frequency?

And therefore, based off those numbers, I know my customer lifetime value roughly. I know my number of churn customers roughly, and I can go, okay, what if I can reduce that by 10%? What is that worth? Is that worth $500? Is it $5,000? Is it $500,000? For me, that's the starting point.

And I think the mistake we make in terms of these kind of ROI and value estimations as data professionals is we think it has to be something that, we're gonna calculate with our Python notebook that we need the exact numbers, that it needs to be precise, and it needs to have the same rigor as a lot of the other data work that we do, when actually a lot of the time all you need is a back of the envelope calculation,

I like using the term Fermi estimation, named after Enrico Fermi, who allegedly just before the Trinity nuclear test. Whipped out on a piece of paper, his estimation of what's the TNT equivalent of the blast that they were about to witness, and he got it. I think it, it was, he estimated 10,000. It was 24,000. I've probably missed the exact numbers. The point is he got the order of magnitude right? He was just off by a factor of two. And for these ROI estimations, it's the same thing. It's I'm not looking to understand is this gonna make us 600 K or 650 K or 700 k?

It's more, okay, this opportunity, roughly speaking, is in the 500 K to 1 million, and this other one is in the 10 K to 20 K. And this optimization, one of my engineers wants to do, to bring down costs is gonna save us $500 a year. And so if I look at those three examples, it becomes very easy for me to go, okay, roughly speaking, which of these is more valuable?

Or maybe more valuable per unit effort we're gonna spend. 'cause maybe the optimization will take one day and the trend modeling thing will take, I don't know, one year. And so that, that is a very good starting point. And then the other thing I wanted to comment, 'cause you say, oh, I ask my stakeholders, how do I go about doing this or give us a number and they're like, oh, I don't know. Do you know what makes it so much easier for them to give you a number? If you make that back of the envelope calculation, super basic, you sketch it out on a slide, on an Excel sheet, whatever, and then you show it to them. You're sharing your screen or you show the piece of paper and then they go, oh no, that's not right because this is not our lifetime value.

Or, oh no, that's not right because whatever other assumption you've made that's wrong. I found that it's so much easier for both technical and non-technical stakeholders to basically critique something or provide me with an input I'm looking for. If I give them the scaffolding, I'm like, here's the shitty first draft.

Now you tell me what's wrong. Instead of, Hey, here's a blank sheet of paper, please fill it in. Even if the blank sheet of paper is like a template for them to fill in, it's still harder to get an answer from them compared to, here's my wrong assumptions. Now, correct them.

So much easier if I go, Hey, here's the rough firm estimation I've done where I've assumed your lifetime value is this order times, this order frequency, and then that your churn rate is this much and that your growth is gonna be like that.

And then becomes real easy for them to start giving me an answer, because you said, oh I asked them, and how do we quantify that? And they don't know. I think a lot of the time it's that maybe when we ask, especially a non-data savvy stakeholder, that kind of question, they might think that we're asking for something much bigger, oh, these data guys, they're here to do smart analytics and statistic stuff with their fancy PhDs and computer science degrees and whatnot. It's no. Actually it's, some of these models are super simple. And for me, what I love about this approach is that it also means that it's so easy to do really quickly for a large number of opportunities, right?

I considered, let's say we've got 15 ideas or 15 requests or even 150. And if you can spend just a few seconds for each one to figure out, okay, roughly speaking, this is gonna save three hours a week from this employee who on average gets paid $50 an hour. So the value of this automation is this much, versus this is a cost saving that'll save this much.

And sometimes you might be missing one of the variables, right? Like in the churn model, it's okay, will this reduce churn rate by 5%, 10%, 1%? And you can just put a number that feels reasonable and what I found is usually that rough, 32nd estimation. Is almost exactly the same as the two week version of that estimation where we build the prototype churn model and we estimate what it's gonna be when it's in production and we test a bunch of different data sets.

And then all those times this happened in that order, I felt a bit silly when my manager may have been, look just plug in, 10% uplift. And I was like, no, but where did you get that number from? What if it's not 10%? And then I, come back two weeks later. 'cause I was insistent that this is not a case that we can just do a firming estimation and come back.

And actually it was pretty much the same number. I was like, damn, okay. Not necessarily wasted two weeks, but wasted two weeks.

Shane: I agree with that. I mean, One of the things I say is when we work on the canvases we always get asked for how long is it gonna take to deliver that product. And yeah, typically we are doing this discovery really early. So any. Detailed estimation is waste because let's face it, humans are really bad at estimating anyway. But even if we weren't, we're at the discovery stage, we're still ideating, like you said, there could be a hundred ideas going and spending all that time estimating how long each one of those is gonna take 'cause waste at this level. We haven't prioritized that. We haven't said this is the top five so let's not worry about it.

Let's just do a quick t-shirt sizing, pull a number out your bum, the number will actually be quite good 'cause we're good at guessing and then move on, and we can do more detailed estimates if we have to at a later stage. So I'm with you on that. Do it light, do it quick and use it where the value is. But to do that is quite a skill. So if I think about it, you have to understand how data works, how effectively metrics works, and potentially metrics trees like you mentioned. And we have to understand the. , Organizational processes. And we have to be able to combine both of those to be able to quickly articulate how lifetime value works or how a churn number will work and what the impact of that is.

And that is a skill, and it's not a skill that I see a lot. What's your thoughts? Do you see it a lot?

Nick: I think for sure being comfortable doing it. It takes some practice, like anything else, and it's gonna feel harder, even at least just mentally. But for me, it comes naturally because I've spent most of my career either in consulting or in data teams where we acted like internal consultants for our different business units.

So I'm very used to not really knowing very much about a domain, and asking a lot of dumb questions to my stakeholders about, Hey, how does this operation actually work? I draw the flow chart as we go. Maybe I'm even sharing my screen as I'm drawing out a process that a stakeholder's describing and they go, oh no, you forgot about this.

Or no, I forgot to mention about that step. Or actually, this part is more complicated. 'cause when A happens, we do B, and when C happens, we do D or whatever else. So the point I'm trying to make here is this is not an exercise that a data professional needs to do on their own . It is a collaborative exercise we need to do with our stakeholders for a couple of reasons.

Number one, because like I alluded to, we don't have the full picture. Even worse, we might think we have it based on the explanation we were given. And actually it turns out our stakeholders didn't mention a whole bunch of other details that were really important. But also, secondly, for me, it's also about building better relationships and trust with our stakeholders, i've seen this happen so many times when, and it's in data or more tech more generally, where the kind of tech team comes, they have some kind of discovery workshop and then they disappear for six months and then they show up with the thing they've built and they go, here you go. Please test it for us.

And one, what happens then is you often end up with having built the wrong thing. Because again, the picture you got during that initial requirements gathering exercise was incomplete. But also too, let's say you actually nailed it, right? You built exactly what was needed. You estimated the value potential perfectly.

Then that stakeholder turns around and goes who are you? Who are you to tell me that I should be using this dashboard? Now I've been doing this job the way I've been doing it with Excel for the last 20 years. So it's also about bringing our stakeholders with us on the journey, and that's just as true about the financial estimation parts. That's maybe we need to bring our finance colleagues along the journey, or the client's finance colleagues so that when the business case shows up on their decks, they don't go, who is this? What is this thing that these consultants or that the data team wants to do again?

They go, oh yeah, this the thing that Shane and I worked on together when I gave them the numbers from the budget to plug into the business case. And then I learned what a, I don't know, random forest algorithm is. 'cause I was curious and it becomes so much easier to work together. And the same is true on the user facing side.

Jono is a slightly different topic, but for me it's actually conceptually the same thing. It's so much better to build together with our customers, be they external or internal, than to do something on our own and then show up and say, here's my homework. And then you find out that the homework is wrong or that they just don't like the font you've used. 'cause that's not the font they're used to.

Shane: I agree. I think that constant feedback helps us iterate and, figure out where we've heard wrong or where they forgot to tell us something. Or as you said, if you wait six months, something's gonna change anyway. That may be the most burning problem six months ago, but there's a real big chance that when we go back with the answer, six months later, they've moved on.

They've fixed it with Excel, and there's something else far more important, so it's no longer top of mind. So that idea of, constant engagement and collaboration is so much value. And one of the things I think you were just talking about is this, back to old school, almost business process mapping, this idea of nodes and links and saying to the organization how does the process work? Tell me something happens and then what's next? And let me draw a circle and say, this is the thing happening and here's aligned to the next thing happening. And from there we can start identifying those measures that can form that return on investment. , And one of the things I think you talked about was, looking at it from, cost saving, revenue improvement. And there's always a risk one as well, that's the trifecta that you can use is, are we gonna save money? Are we gonna make money? Are we gonna reduce risk?

That's the three that they always tend to come back to in my view. So I think, yeah, combining all those patterns together is really valuable.

Nick: A hundred percent. And for me, exactly that trifecta is the starting point of what is the benefit we're trying to influence at the end of the day, because when someone says, Hey, I need a data set showing weekly sales. I'm like no, that, that's not what we're here. That, that's just a solution you have in mind.

You're asking the doctor to prescribe you specific medication, and then just like the doctor goes, okay, I understand, you went on WebMD and you've self-diagnosed that you have this, but. Let's just check your symptoms to be safe. For me it's the same thing, where we go, okay, let's understand the business problem.

And this is for me, super simple thing, but that so many data professionals get wrong about it. They see any request that comes from stakeholders as a, an order a command. And a lot of the time, one it absolutely is not like that. And the person making the request is clueless about what they need and they're coming to you for help, but maybe they've also not learned the right way to do that, to say, Hey, our sales are down.

I need to figure out why. I have a hypothesis that maybe it's because one of the regions is underperforming. That's why my request on the Jira ticket said, give me a sales breakdown by region. But then when they ask that you can start asking more probing questions to figure out, okay, what is this hypothesis exactly?

How can we test it? Maybe actually there's an element of, statistical know-how that this test is gonna require that a dashboard with a line chart is not gonna solve for the stakeholder. Maybe we can test alternative hypotheses in parallel that, if you know how to write code and to use different models is maybe trivial versus you make the dashboard, it spits out the line chart, the stakeholder looks at it, doesn't see an obvious pattern, or thinks this is gonna be too hard to make sense of, and they give up on it.

And you've ended up with dashboard number 952 that no one in the business is ever using.

Shane: There's a couple of things embedded in there. So one is, if we look at product teams and the software industry or software domain often they talk about feature factories. Where somebody comes and demands a feature doesn't tell you what it's gonna be used for. Our equivalent is a data request, Here's a data request, give me the data. Don't ask me what I want it for. I still blame Jira for both. 'cause basically both of those problems are managed in stupid Jira ticketing systems. I think the other thing you mentioned is, , a doctor saying you've gone and Googled WebMD or whatever. I think we're hitting that new world, right? Where actually our stakeholders are gonna LLM the answer and come to us with a data request that's based on a AI bot telling them what the answer is. So it's gonna get worse before it gets better. , But one thing you mentioned was this idea of metric trees and, I'm old people mentioned now that I bring up ghosts of data pass and I remember a while ago we were doing balance scorecards cause and effects.

This idea of saying if we have a bunch of things we measure and we understand the business processes, then effectively we can see some causation or relationships between those metrics and where we see those causation or those relationships has some value, we can infer some things and help us make better decisions. What's your view on that is metric trees another. Good thing, but just a reincarnation of things we've done before or is it something different?

Nick: I guess 'cause one, I've not been around this game for as long and two, I wouldn't call myself, someone that's gone super deep into metrics trees and understands them deeply. So I'm probably gonna give you a very lay person's answer. But it's that, yeah, a lot of the core concepts are not new, 'cause for me, fundamentally it's just about saying. I need to understand the relationship between the different inputs in my business and how these translate into outputs and to basically figure out what is my business's sensitivity for those different things, so for example, let's say I've got three potential initiatives I'm considering.

One is I want grow my mailing list. The other is I wanna improve my checkout rate, and the other is I wanna optimize my pricing to make more profit per sale. If you just tell me those three things, unless if I have a deeply intuitive metrics tree in my head, I'm not as the business owner gonna automatically know which one of these is gonna make the biggest difference for my business, whereas if I have a, forget about the fancy terms, if I just know , that flow. Of, okay, how many people do we have in our mailing list? What is our click through rate? Every time we send an email, , and then of that, how many people that land on our website actually go and convert?

And then what is the average profitability of our products? If I have this information somewhere, and if I know , those relationships, then I can plug in different assumptions and go, okay, what if I were to increase my mailing list by 10%? How would that cause a cascade in all the other numbers?

Okay. If you get 10% more people in the mailing list, because you actually have a super low click through rate and most of your traffic comes from other sources, your revenue would only go up by 0.1%. Whereas if you were to improve checkout rate because you're getting traffic for all these other sources, but your basket abandonment rate is quite high, actually, that would translate into quite a bit more revenue.

Then lastly, if you know the average margin you're making per product, if you were able to increase prices for certain strategic items, actually that on its own would lead to more profit than the other two things combined times 10, made up example. But for me it's more about the high school economics , not even high school, more like middle school mathematics that you might have to do.

When we did the few exercises around firming estimation with my students as they were doing it, I was like, oh my God, this feels like I'm an elementary school teacher. 'cause I'm just asking these guys to literally, they're doing multiplication. There's nothing more to it. I've given them all the assumptions and all they have to do is pick which numbers to multiply where.

And that's how I want the data team to show it to their business stakeholders. Even if there's a lot of complexity behind how we calculate basket abandonment rate or margin or whatever else. At the end of the day, the output, especially the output that a business stakeholder sees, has to be super simple so that they can also understand this whole concept of making data driven decisions.

That's ultimately what we're trying to do. I think metrics trees are great because even just visually, they help us understand that relationship between different metrics instead of, here's our metrics report. It has multiple tabs and lots of charts, and you basically can't really figure out how to mentally connect the dots between all these things unless if you have that metric strain in your head.

Shane: What I can see is data people. We love the detail, so as soon as you say card abandonment rate. I can imagine a data person going we can't just use an estimate for that. 'cause we know the data's there, so we'll just go and spend, three months grabbing it modeling it, , getting the actual abandonment rate.

And then that'll make our firm aim model so much more accurate when we try and determine the ROI and I can see that logic, but then it's about time to market. It's about, again, we don't know that is the most important information or data product to build next. We're trying to use this technique in the discovery ideation phase not furthered down, and therefore light touches have value. But do you find that, do you find that data people naturally want to go and grab the data, do a whole lot of work, and make it as accurate as possible?

Nick: For sure. And I fall into that trap too sometimes, because I've been among data. People for long enough that now I do the same. But look, at the end of the day, there's gonna be some things where actually knowing the real value is quite important. Either because your estimate might be super off or because actually if we're doing these small optimization things where we're just trying to increase one thing by 1%, 'cause that 1% is still worth a big number, actually being off by a couple percentage points could make the difference between something being a super profitable investment and something setting money on fire.

But for me the key thing here about both product thinking and data and building data products instead of data projects and also metrics trees, which in a way it's just the type of and collection of data products is that we don't go, oh, there's a new project we're doing now. The team needs to go and figure out the basket abandonment rate and figure out all these other metrics. cause we're trying to do this one-off kind of project. And even if it's not a one-off project, but it's a specific use case. We shouldn't have to build all these data models. And then you have 10,000 versions of what lifetime value looks like in your business. You go, we will invest a lot of our time as a data team.

Exactly. Because it is complicated and a few of these things will take many weeks to do into building out these key metrics. And so we have our collection of metrics that form into a tree and basically that you then go, anytime I want to test a new hypothesis, I wanna explore something, I can rely on these core standard reusable data assets, instead of going, everything is a new project and everything is a new DBT model and everything is a new Tableau dashboard and whatever else. For me, that's where the power of it lies. It's a slightly separate topic to the ROI estimation part. I still think a lot of the time you're better off starting with your firming estimates, and then of course if you have the real data to plug into one of those assumptions, great.

But if you don't wait until you do. Unless if it's a really high stakes investment, , if you are gonna be committing to a seven figure investment in your business, then yeah, maybe back of the envelope isn't good enough to get fully started. But if, as you said earlier, if we're just trying to figure out what are the top four opportunities right now out of the 15 we have, and then once we've zeroed in on the top four based on our Rough and Ready firm, me estimates, then we can do more homework.

Then we can send the data team to go and do some more calculations if needed.

Shane: I can actually imagine that as you do the firm a calculations, you're gonna find. There are certain inputs that you use on a regular basis. Especially if you are working in a specific domain, so let's say the domain boundaries are all based on organizational hierarchy or maybe business process.

So , we have a sales organization that deals with the sales side of things and they're the highest value part of the organization that we've been asked to help right now, because we are bound within that domain. I can imagine that, the inputs we use for the estimate, that ROI calculation are sometimes gonna be reusable, we're gonna go, ah, maybe it's lifetime value. Maybe it's the funnel and how many people we're converting from, , suspect to prospect, there's gonna be this thing where we're constantly using that as part of our calculation. So we can say because we're using it so often,, seems to have value.

Therefore doing a little bit more work on what the actual number looks like is gonna be really useful for us going forward. So again, we can use it as a way of figuring out what's the valuable thing to build effectively.

Nick: For sure and I wanna make two comments about this. First is it's not like a lot of these metrics we are calculating specifically to work out ROI, these are also gonna be the metrics that are part of the data product or related to adjacent data products, if for example, we don't know our cost to fulfillment, but we're doing a use case that relates to reducing our cost to fulfillment, , getting to the precise number of what is our fulfillment cost is also part of the business case, so we start with our rough estimate, 'cause we have an average provided by finance, and then when we wanna break it down by product, that's part of the optimization project. That means that next time round we actually have a data product that is our cost of fulfillment per ku, , as an example.

Shane: The other thing I can imagine people wanting to do then is go into the detail to say if this is the return on investment that we've estimated for that information product actually we should measure it at the end to see whether we actually did deliver it. And that makes sense. But then we come to a whole lot of complexity because there's a whole lot of other factors that will influence whether we are getting a reduction in churn or whether we are, reducing the cost to serve or the cost to produce. And it's really hard to isolate those other factors, to say this one thing we did. Now my view is I don't care. If we do some effort and it looks like it's moving the lever, as long as we keep doing effort and the lever keeps moving, we are always getting to a better place. But how do you find it? Do you find that some organizations or some teams want to then prove the ROI six months down the track?

Nick: For sure. And I agree that sometimes the rigor needs to be more than a simple pre-post analysis, especially when you've got dozens of confounding factors or if you're at a place where actually you're carrying out many experiments in parallel. for me, that's one of those things.

It's let's cross that bridge when we get to it, we don't need to assume that by default we need to carry out randomized control trials and super rigorous ab tests in order to figure out what's moving the needle. But then the other thing I wanna call out is that everything you've just mentioned is something that typically does not get talked about at the start of one of these initiatives.

And so the data team, 'cause they've received a request in Jira, they go and fulfill it. There's probably a million back and forths because the request initially that was made, actually, that's not exactly what the stakeholder wanted, but you built it. Now that they've seen it, they realize they wanted something different.

Anyway, eventually you get to your done state and then you go, oh man, it would be great to know the ROI of this thing we've built for this stakeholder. And then you realize that it's unclear what the definition of success is. You've not done that exercise to go, okay, what is the exact decision that's gonna be made by which person and how will we know that they're making this decision because of this data?

And for me it's a little bit like how I'd have classmates in high school who would write their essay first and then go look for citations to prove it. And you know what? In high school that worked But a little bit later down the line, it, it can't, 'cause you need to build up the essay, so to speak, off the back of citations.

Unless if you're just making things up. And similarly, I find it's so much easier to actually figure out the ROI of something, if we've done this exercise that goes, what is the business outcome we're gonna be influencing here? Because when you ask that question, you very often realize that, okay, the thing we thought we needed to build actually is a little bit different, or how do we make sure that we embed this decision support information into the decision making process of a stakeholder? How do we then measure or have some even approximate way of knowing when someone took a decision using that data that we gave them, as opposed to they just went with their gut, same as they have been, but they also opened the dashboard just to see what's there,

Shane: I was gonna say our best measure at the moment is that somebody actually used it, that the dashboard's not sitting there being unused for six months. The best we typically have is it got used lots. Not that it was used for anything, but it was open and run..

Nick: Oh yeah. And for me, even though I think it's useful, especially it's useful to know that something has not been opened, actually the usefulness of that telemetry drops off very rapidly after that. Because you very often have situations where someone is opening the dashboards, but then you don't know exactly what they're doing with it.

If it's even useful for them. And especially if there's some kind of top-down pressure of, guys, we spent all this money on this new set of mi, , you all need to be accessing it, or it'll affect your performance score. Then easily someone can just open the thing, not even have their eyeballs at it, and then close it a little bit later, or they open it and maybe they actually, viewing time has increased because your dashboard is more complicated and the user's not able to actually get things out of it.

And for me, the reason I think it's a mistake is because in most businesses, the number of users you have is just not that big, which means you don't need to rely on quantitative cold-hearted information when you can just ask people, you can have one-to-one catchups.

You can interview people, you can even have a survey form where you ask a few open-ended questions like, Hey, what do you like about it? What do you not like about it? Do you have any ideas for how we can improve it? And use that qualitative data because it's so much richer than, okay, average viewing time has gone up by three seconds.

What does that mean? How does it relate to the business? Also really importantly, 'cause I feel like whenever people are telling me they're struggling to connect the work they're doing to business outcomes and to ROI, it tends to be bi, because the way a lot of BI is built and structured in most organizations is in a way that I just think is wrong, because it's not actually helping enable better decisions. It's just, Hey, let's build a dashboard about this. It's not, okay. As part of my workflow as an operations manager, we're gonna change it. So actually now every Monday morning I open this dashboard, which has these specific KPIs and I make 1, 2, 3 actions off the back of it every week.

That we've built instrumentation to measure how those actions play out, and so we can have an idea of how those actions improve in quality over time. Most of the time you don't have that kind of mapping. You don't have any measurement of either the action itself or the thing the action is looking to influence.

I'm taking an action to improve our copy so that next week's newsletter gets a higher click through rate, which means then, yeah, it's literally impossible to measure retrospectively the impact of that dashboard that you built that someone maybe looks at. But it's very unclear what the benefit is.

It's either unclear because you as the data professional just don't know exactly what they do with it and it's a black box, or it's unclear. 'cause actually there is no real benefit. Or at least it's super, super fluffy.

Shane: what would you recommend to an organization, let's say the organization's got to the stage where the data team are engaging with stakeholders. They're thinking in terms of products. They're asking those questions of, with this data, what action are you gonna take?

And if that action successful, what outcome do you think you're gonna deliver? And then they've led them and done some, whiteboard numbers to say, okay let's quantify this in a really rough way, we think the value to the organization's this. And then they go and build it, and they build it quickly and lots of feedback and it's actually what was needed? How do they deal with that last bit, how do they deal with now looping back and saying, we actually want to know whether it was used, whether it helped that action, whether it helped deliver that outcome.

Nick: for me, generally speaking, unless we're talking about a super small, trivial requests that we're just gonna turn around and it's not a big project. I would basically insist that there needs to be a measurement and evaluation work stream as part of the project. To use the analogy I used earlier, we need to have a kind of research and citation work stream happening in parallel as part of the project.

In the same way that there's gonna be a sort of scoping phase design. We're gonna get approval for the wire frame, then in parallel. We're building the data model at the same time as building the dashboard. At some point in that parallel stage, we're also defining what are the metrics for success.

And if those metrics are not, based on numbers that are readily available because we've got our metrics tree because it's part of another, report, an mi, a dashboard, then we go, okay, now we need to set up some kind of measurement for this new thing that is gonna be part of the success metric.

And then as part of that, we're probably gonna build a second dashboard. That's gonna be measuring that effectiveness, i'll give you an example from a project we were doing a few years ago with a container terminal. Really complex operation where actually anything you try to change is gonna have knock on consequences on many other parts of the operation.

But after doing a combination of qualitative and quantitative analysis, we're like, okay, one of the things that we can dramatically improve for the overall productivity of the port and the north star metric of the port, which is the productivity of the big cranes loading and unloading vessels as they come in, is actually if we can optimize the way we allocate drivers in the trucks that can have a big impact on the overall productivity of the port.

So in that case, there were some metrics that we had already, like the big crane productivity, that was a metric that was firmly established in the organization 'cause they reported on it constantly. Then there were some truck driver productivity related metrics that basically didn't exist in the existing MI suite.

So it was like, okay, if we're gonna build this product to optimize driver allocations, then we also need to add something to the management information that we produce so that we can be measuring the effectiveness of this model, so we can see is it actually delivering the value we were expecting it to deliver?

And for me this points to a really important problem that a lot of organizations face, which is it's not just that, oh, if you have more data that's better and you can make more decisions, you need to have the right data. And it's why I really hate the kind of big data, data lake approach of, oh, let's just dump all the data we have.

Then the data scientists or the data miners will find value out of it. 'cause then usually what happens is when you start a project and you look at what's in the data lake, you realize that actually the super important column for the model you're trying to build, actually it doesn't make its way into the data lake.

Or it never existed in the source system in the first place. And so when we're deliberate about the business problem we're trying to solve, we can also be deliberate about what are the data points we will need and do they exist? And if they don't exist as part of this project, we need to create those data points.

It's not a nice to have it's a must have condition for the success of the project.

Shane: then I'm gonna jump naturally to the next step, which is, okay, so let's say that it's optimized for the big crane and therefore we are going to affect the optimization or the workflow of the drivers. I'm naturally then gonna want to build out , that metric first so that I can benchmark the current state. So then when we make the changes, we can then see have we had a positive or negative impact? And then I can see how that would then potentially delay the production of the information product that has value, because now I'm spending time getting ready for the benchmarking data before I then go and build the thing that actually is gonna make the change. Is that a natural trap to fall into or is that something that If you can, you should do.

Nick: Obviously we're talking about it hypothetically, and it's gonna be a little bit different in each organization, but even in this hypothetical, the two objections I'd have to, that criticism would be one. Okay if this is gonna be a project that'll last several weeks, let's just sequence it.

So that we start collecting this data from the very start of the project. We make it the first thing we do, not the last thing we do, actually, we've done this a bunch of times where we said, you know what? You guys aren't collecting this data 'cause it gets deleted at the end of the day. But you know what, if we start collecting it now, by the time we will need to use it for the model we're building, we will have just enough data.

So that's one thing. And in some cases maybe this will mean, yeah, the project will take longer, but if that's what's needed, then that's what's needed. It's the kind of objection that if it was a let's say a technical objection to do with the core of what's being built, it would be so much easier mentally to say, look, this is a must have, right?

I cannot build a model without this data. Whereas it's very tempting to say, okay, fine. I guess we can build the model without also collecting success metrics. Yeah, maybe you can get away with it once or twice. Orric. Actually, if you're systematically doing that, yeah, you're systematically not gonna know the benefit of what you're doing.

Which for me,, it's so weird because the whole point of having a data team is to make an organization more data-driven. And what being data-driven means to me is basically being evidence-driven. We're not making decisions because of just gut feel or copying our competition blindly, but we're using evidence.

But then we are so bad at basically following our own advice and using data to inform what on earth should we be doing and is it worth doing? So that, that's the kind of first objection. The second objection is you probably should be collecting that data anyway, same as what we were talking about with metrics, trees, the things that are gonna be your success factors.

Not always, sometimes they will be super specific to a project, but other times they're gonna be core metrics that you should start tracking anyway because there's gonna be other initiatives later down the line that we should be doing, but. I would rather let's say, collect that metric about the truck driver productivity as part of this project.

That is a specific optimization we're trying to do instead of do. The other thing data teams often say is, which is, oh, we need to invest in data foundations. Oh, let's build out all the metrics first so that then we can track success later. For me that's bad for , two reasons. Number one, maybe you will track the right success metrics or the right metrics, or maybe when you get to your new use case, you realize this wasn't the right thing anyway.

But also, secondly, you are proposing building something that delivers no inherent value on its own. Which is all too common. All too common that we spend two years doing a big migration promising the business that after the big migration we'll finally be able to deliver value. And what do you know, two years later, CDO gets fired.

New CDO comes in first thing they do, they wanna rebuild the platform and promise again that the results will come two years later. So for me, it's also how can we bundle any kind of, let's call it technical debt or platform investment or foundational investment together with something that's gonna deliver value to the business,

and delivering minimum increments of value. Not, oh, let's do the platform stuff first and the value making stuff later when we've left the company and it's someone else's problem.

Shane: And got the new tools on our CV and got promoted in the next job, 'cause that two years was really great fun. It got paid a fortune and got a better job. We used to blame Waterfall for big requirements up front and foundational builds.

And then lots of organizations went down the agile path and we started seeing six months sprint zeros, where again, it was just pure foundational build. No, engagement with stakeholders, no value. We do have to balance it out between ad hoc behavior where we don't do any foundational work. So there is that, horrible balance of building the airplane while you're flying it, that is the balance. But it is balance, it's about the context.

Nick: I agree. I'm not advocating for basically being the clueless business person that does not understand what the engineers are on about when they talk about technical debt and just wants ship me these features 'cause they're gonna make us money. But we'd need to be intentional about it.

Any piece of technical debt for me is not inherently bad. It's debt I have borrowed from our future selves, from our future data team. In order to usually test something, there's no point building something super robust and scalable if we don't know it's worth scaling in the first place.

But also maybe it's because tactically actually it's gonna really help if we can use this to provide some results for the business before the end of the quarter, either because there's a financial upside or just because it's gonna help us get more trust with them so then they can spend more time with us, involve us more early on in decisions around what we should do and all that good stuff.

Shane: of the things that naturally people want to do is take those outcomes and those ROI statements and link it back to the corporate strategy. There's a strategy, PowerPoint somewhere with four boxes, or there's the top, 11, 15, 25 initiatives that are the most important in the organization. And, it's easy to gamify, it's really easy to say , these metrics support the strategy. It's actually damn hard to find metrics that don't support your strategy in some way using creative language. Do you ever worry about it? Do you ever bother saying that, these ROI statements, these metrics line up with the corporate strategy and the initiatives, or do you just ignore it?

Nick: because I'm not advocating for just, oh, we should link the initiatives we're doing to some other broader business initiative called, I don't know. Be loved by our customers or get trusted by our partners, whatever. For me, that's fluff.

Sometimes it's very useful to link it maybe so it falls under the right OKR or that it gets the attention of the right people. But at the end of the day, most things, you should be able to express their value in financial terms, it's either money in the bank, we have generated incremental revenue, or we have saved costs that definitely would've accrued otherwise.

In other cases, it's a little bit fluffier, but still has a financial number next to it. If we build an AI chatbot that helps our employees get questions about HR policies faster it's not necessarily that, okay, we've saved 10 hours a week from our HR employee, and that's money in the bank because we're still paying that HR employee full time.

Now, if you have a massive organization actually go, no, we will downsize that team. That will be money in the bank. But in other cases, you can just quantify the productivity saving, you go, we have saved the legal department 15 hours a week times 20 employees paid a hundred dollars an hour, and you go, okay, that's not money in the bank, but that is the value of the productivity that the legal team now can redeploy elsewhere. And for me, it's not as good as money in the bank, but sometimes it's necessary that's where our estimate stops. But that estimation is still so much better than just going, we are making the company more productive and AI ready.

Shane: then do you ever bring in the cost side of the data team? One of the things people often say to me is, the data team are trying to do the right thing, but nobody from the organization will engage. The request gets handed across, they. Want somebody from that part of the organization to work with them as subject matter expert, whatever. And I often say to them have you worked out what the data team costs every week? And have you gone back and said, Hey, this is four weeks worth of build. That's a hundred thousand dollars of time. Is it worth a hundred thousand dollars to the organization? And often data teams don't do that, they don't quantify their own costs. Do you do that as part of understanding the ROI or do you not worry about it as much?

Nick: Of course, 'cause ROI is not just what is the value, it's return on the investment. So the I part is basically the cost. And here it can get complicated because what are the fixed costs that we're just assuming are sunk in? Very basic example, will I consider the Microsoft teams license of each of my data scientists as part of the investment?

Or do I just go, look, this is a fixed cost the business has made and we're talking about incremental return on investment. Meaning how many additional hours of a data scientist time are we gonna spend? Or maybe if we need to hire contractors, it's about the cost of those contractors, or it's about the incremental compute that this will cause because we're now gonna have to pay Databricks a bunch more money because we're doing all these, building all these new models so that takes some.

I think finessing and it basically, it requires you to understand what, I don't wanna say what you can get away with in your organization, but more how does your organization think? Are there some costs that ultimately they're subsumed in some centralized budget? And so one, you'd be doing yourself a disservice if you included those because the rest of the business isn't.

And two would maybe skew the picture of whether this is a worthwhile activity for me I also think the thing that doesn't really work as effectively is to just turn around to the business and go, this request is gonna cost a hundred K. 'cause usually it's not like they will pay for that if you have some kind of cross charge model or you're like, look, we would have to hire new people to support this new initiative.

And so we need to work together to make the business case for finance to give us this additional budget. Then you can definitely do it and it makes sense and it potentially will come out of the marketing team's budget not out of yours. Again, depends how your organization does budgeting. But for me I think it more in terms of if I'm running the data team and I know that cost, I know how much it's costing us to keep the lights on every week.

First of all, I wanna make sure that at the very least we are delivering more than that, like a multiple. If we're costing a hundred KA week than any given week, we should be delivering at least 110, if not 200 k back to the business. And to be reporting that upwards on a regular basis. 'cause it's sometimes also easy to forget.

And if it's not about that, if it's not about the kind of holistic picture of what is the data team doing, at the very least, using the, even without the cost part, the value part to figure out, okay, my team could work on any of these three things for the next two months, which one is worth doing?

And then using that language, not just to decide internally amongst ourselves, which use case are we gonna push for, but also if that involves telling two stakeholders that their baby's gonna get deprioritized because we need to work on the other use case, point them to those financial numbers.

Be like, look, this will cost, your thing is gonna cost the business 200 K, but based on our projections, it's only gonna make us an extra a hundred K. And the way I usually frame it is I go, look, if you can find a way to get acceptance for that kind of thing, maybe we can do it. But it's really hard for me to either deprioritize someone else.

10 XROI initiative that they've put in front of us. Or just to justify it to my boss that hey we're gonna be costing the business money because Bob really wants us to build churn prediction model version 57. 'cause version 56 wasn't good enough.

Shane: that comes back to that really interesting question of who does the prioritization. Because often in an organization, it's a committee, it's a group of stakeholders , whoever's got the biggest voice or whoever's the hippo gets to choose and it gets given to the data team. Whereas if we have this idea of a data product manager, or data product leader or whatever you wanna call them, then often they are the ones that should be making the final decision, they should be saying, okay, based on the value then these are the ones that are the most valuable to the organization.

So this is the one we're gonna build. But often data teams don't work that way. Again, it comes back they get given a data request and somebody outside the team effectively prioritizes and tells them what's the next most valuable thing is, but that person's not held to account for the value.

Nick: I think my answer is to which model works best. Also depends on the organization and the politics. Who controls the budgets, the power, what happens when, but in general, I'm against either extreme. I'm definitely against the business, has decided the priorities and they just chuck them to the data team who have data product owners whose job is actually to just decide maybe the exact sequence of delivering these things.

And there's no product work being done there. We can rant about safe and scale agile another day. But then secondly I think it's also not good if especially if it's framed as the data PM is the final decision maker. And for me it's fine if maybe me as the data pm I've got all these requests from different departments and I do that prioritization, but then I wanna involve my stakeholders in that.

And here it, it gets a bit murky. It's not, I'm gonna give everyone a vote. I'll be like, look guys, we've got these five initiatives and they all have a different number of zeros attached to them in terms of what they're gonna bring to the business. And so maybe if Bob says, look, I know mine is only a six digit opportunity compared to everyone else's seven digit opportunity, but actually for whatever let's say strategic reason or because it might cause bigger problems later down the line, it's more important and there it's gonna be a balance between, sometimes we're gonna be more consensus driven depending on how the organization works.

And other times I go, look, Bob, if this is so important, basically you need to escalate upwards because my hands are tied. I cannot put your a hundred K thing in front of someone else's 9 million opportunity, assuming they're gonna take a similar amount of time Anyway,

Shane: I think we see that in the software product domain. We see that product managers often are reliant on influence to get the right things built, and that's effectively their job, I find it interesting that if I look at other shared service type , part of an organization, so if I look at HR and I look at finance. They are a cost, and yet they very rarely have to do ROI statements for the value they deliver. And for some reason data does. And my hypothesis is because data typically came out of the IT side of the org. And that was always, seems to be somehow having to justify what they're spending their time on more than those shared service organizations.

What's your view on that? Is that what or do you not see that?

Nick: Great question. The kind of thing that comes to mind first is actually the fact that we see, for example, HR as a cost center, and often it also as a cost center, especially the more base it functions is a problem, it's a problem because it means that a lot of things become invisible to the business, the fact that we see our expense tracking software, that's just a fixed cost, it's a cost center. It's the cost of doing business. And that's why it has selected the most competitive offer in terms of which horrible expense software we're gonna use. And what doesn't happen then because it doesn't have to prove the value or the return of using that software is, there's no, explanation of opportunity cost we're not looking at, for example, okay, on average, because we're using our. Shitty home-built software from 20 years ago that our employees need to use Internet Explorer taxes to submit their expenses instead of just giving Concur some money and using that instead, or something even newer that can than Concur that wastes on average five hours a month from 20% of our employees that do lots of business travel.

And when we add up the total cost of that, actually it's a multiple of using the fancier software as a service expense software that it doesn't wanna use. So my first challenge is actually, I think a lot of teams and departments are seen as cost centers when actually that's not right either.

Because we're not evaluating opportunity costs, we're just evaluating costs in a vacuum. But then secondly, I'd say, I think it's fine and good for data to have this slightly unfair expectation of proving our value. The reason is maybe you tell me. Look, by and large, we don't need to think about the ROI of the laptops we have and of using Microsoft Office because it's well established, it's commoditized.

We know that we just need to have it in our business. We just need to have some HR information software. We just need to have a payroll provider. And for some of those actually, yeah, cheapest is best. Doesn't make a big difference. If we're using the newer fancier payroll provider, its job is to just get money from A to B, in data, that's less the case, in data. Partly because we're more nascent profession. We haven't figured out how to professionalize our industry, if that's even the right thing to do. There's a lot more question marks. But also, secondly, we're not just providing BAU services to the business.

We're helping innovate. Or at least for me the more value additive things a data team can do. It's not, we're gonna churn out bi dashboards, showing metrics for the sake of metrics. It's, we will help the business use data to improve the quality of decisions to potentially unlock new streams of revenue, to build new products.

Now with AI and the fact that you basically need your data function to build a lot of the infrastructure, if you wanna have an AI feature in your product, that's becoming more obvious. But it was true before that as well, it was true before that machine learning could be used not just to recommend a decision in a BI dashboard that someone can ignore, but actually automate a certain part of the process, to automatically spit out recommended content for someone looking to watch a movie or looking to buy something from an e-commerce store or whatever it might be. I don't want the data team to act like a cost center, because then we're just gonna default to doing. Bare minimum low value adding tasks that can be commoditized and expressed in the form of ServiceNow or Jira Analytics and maybe we need to do a little bit of that, but if that's our main focus, I'm gonna change careers,

Shane: yeah you made me giggle on the expense one. I remember it was probably two examples actually. One was somebody working the defense forces that could go out and spend billions of dollars on new tanks but then had to go through seven layers of approval and the expense system to get their parking ticket. The cost to park their car paid and then somebody that was, Working for a software company and used to do seven digit sales and again, same thing would spend four hours of their own personal time on the weekend going through the expense system to claim back the coffees. It's just amazing, when you think about the cost of that. Alright, just looking at time, I just want to close it out with a question around what makes a good product manager and let me frame it in a certain way. Back in the day when I was working, with teams that were trying to adopt Agile and we're primarily going down the scrum path, so picking up the patents from Scrum and yeah, good point on safe. We probably don't have time for me to rant enough about that one, but in Scrum one of the roles that was common was the idea of a scrum master. And what I found was business analysts for some reason. Naturally did the scrum master role , if I was looking for somebody to come in as a new person into that role and somebody had a business analyst background, I found they approached that role in a certain way that made them in the team successful. Whereas in my experience, and this is my experience, if somebody came in from a fairly long project management background, they didn't.

After a while I saw the Pattern and the Pattern really was a project manager wanted to stand in front of the team, and a business analyst was happy to stand at the back. , But what have you found if you were looking and saying, there is a natural set of skills or a natural role that lends itself to adopting the role of a data product manager. What have you seen? Where would that be? I.

Nick: I've thought about this quite a bit. I'd say it's two things that we can call aptitudes, but I think they're learnable as well. It's not, oh, inherently you need to be like this. It's not about are you technical, are you not, it's not, have you worked in data? It's not, have you worked in product before?