Can AI tools bring back data modeling with Andy Cutler

AgileData Podcast #78

Join Shane Gibson as he chats with Andy Cutler about the art of data modeling and the potential of AI tools to improve the art.

Listen

Listen on all good podcast hosts or over at:

https://podcast.agiledata.io/e/can-ai-tools-bring-back-data-modeling-with-andy-cutler-episode-78/

Subscribe: Apple Podcast | Spotify | Google Podcast | Amazon Audible | TuneIn | iHeartRadio | PlayerFM | Listen Notes | Podchaser | Deezer | Podcast Addict |

You can get in touch with Andy via LinkedIn or over at https://linktr.ee/andycutler

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

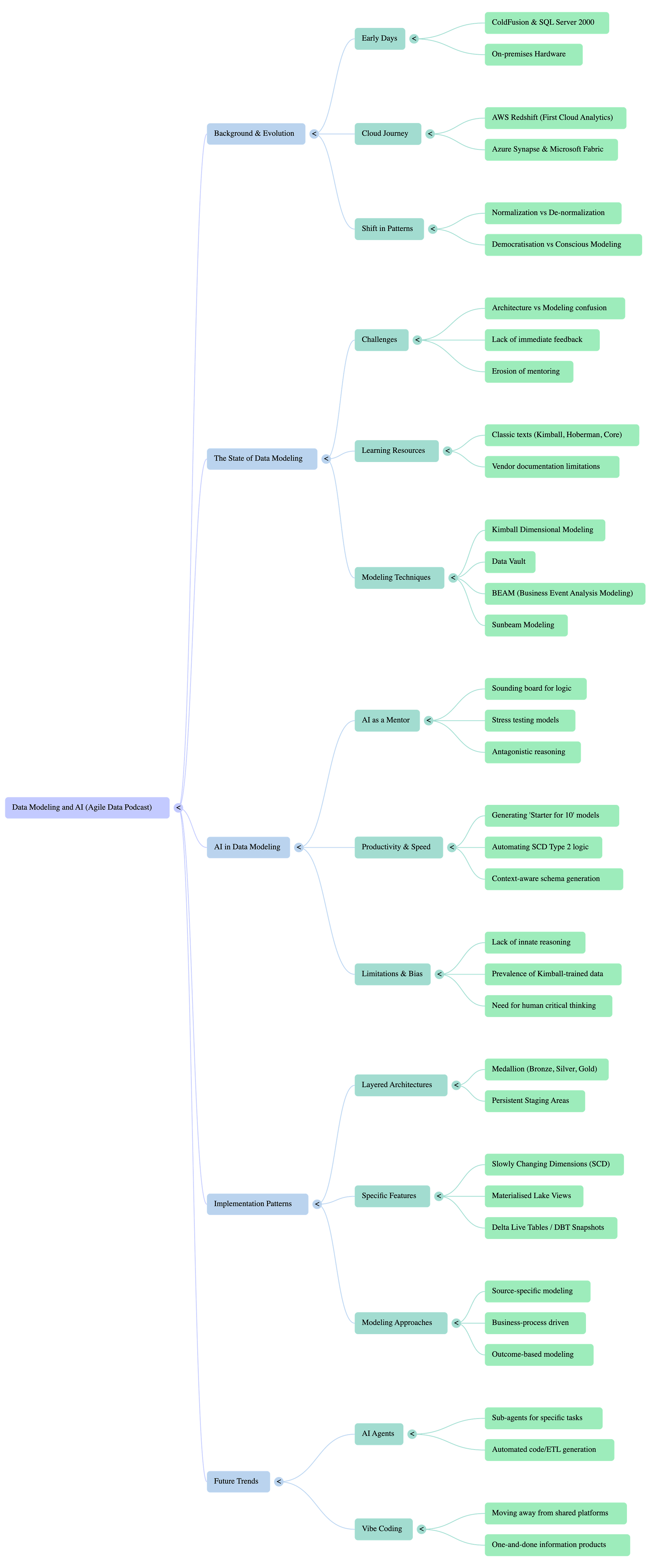

Google NotebookLM Mindmap

Google NoteBookLM Briefing

Executive Summary

This document synthesizes a discussion between data experts Shane Gibson and Andy Cutler, focusing on the persistent challenges and future direction of data modeling within the modern data landscape. The central argument is that while data technology has rapidly evolved toward accessible, powerful cloud platforms, the discipline of data modeling has been neglected, creating a significant knowledge and practice gap.

The conversation identifies a “repeated and constant battle” to prioritize modeling over the immediate appeal of technology, which provides instant feedback that modeling processes lack. This issue is compounded by a decline in traditional mentorship, where senior practitioners historically guided newcomers. Modern data platforms from major vendors like Microsoft, Snowflake, and Databricks are criticized for lacking integrated, opinionated tools that guide users through modeling processes, forcing practitioners to manually implement common patterns like Slowly Changing Dimensions (SCD) Type 2.

The primary conclusion is that Artificial Intelligence, particularly Large Language Models (LLMs), presents a transformative solution. AI is positioned not merely as a code generator but as a new form of mentor and assistant. It can educate novices, generate starter models, and, crucially, act as an “antagonistic” agent to stress-test models for future flexibility—replicating the critical feedback once provided by experienced data modelers. The effectiveness of these AI tools, however, hinges on providing them with opinionated constraints and clear business context to generate practical, fit-for-purpose models rather than theoretical, unimplementable ones.

The Evolution of Data Platforms and Recurring Patterns

The discussion begins by contextualizing the current data landscape within a 25-year evolution of technology. This history highlights a recurring cycle of platform development and a significant shift from capital-intensive, on-premises infrastructure to flexible, cloud-based services.

From On-Premises to Cloud: The journey is traced from early-2000s technologies like ColdFusion and SQL Server 2000, which required purchasing and managing physical hardware (e.g., “compact three eight sixes”), to the advent of the first cloud data warehouses like AWS Redshift, and finally to modern platforms like Snowflake, Databricks, and Microsoft Fabric.

Democratization of Compute: This shift democratized access to powerful computing resources, moving from multi-thousand-dollar hardware purchases to pay-as-you-go cloud services.

Recurring Cycles: A pattern is noted where the industry moves from installable software to pre-configured appliances and now to cloud-native databases. Despite these technological waves, fundamental challenges, particularly in data modeling, reappear. As Gibson notes, “every technology wave it seems to become hot and then cold.”

The Persistent Challenge of Data Modeling

A core theme is the struggle to maintain the discipline of data modeling in the face of rapid technological advancement. It is often seen as a difficult, time-consuming process that lacks the immediate gratification of working with new tools.

A Constant Battle: Andy Cutler describes a “repeated and constant battle to make sure that data modeling is at the forefront of a data platform project.” He argues that modeling is frequently deprioritized in favor of focusing on technology.

Architecture vs. Modeling: A common point of confusion is the conflation of data architecture patterns with data modeling patterns. Cutler clarifies this distinction: “The architecture enables the modeling. The modeling is put over the architecture.” He notes that patterns like the Medallion Architecture are data layout patterns, not a substitute for disciplined modeling techniques like Kimball or Data Vault.

The Lack of Instant Feedback: A key insight is that technology provides immediate, binary feedback (it works or it doesn’t), which is psychologically rewarding. Data modeling, in contrast, does not. As Gibson puts it, “I can’t get instantaneous feedback that my model is good or bad or right or wrong... a model that you’ve created six months, a year down the line when all of a sudden something happens... the model isn’t flexible enough.” This delayed feedback loop makes technology more appealing to practitioners.

The Decline of Mentorship and the Knowledge Gap

The conversation highlights a critical loss of institutional knowledge transfer. As tools have become more accessible and projects faster-paced, the traditional mentorship structures that trained previous generations of data professionals have eroded.

The “Grumpy Old DBA”: Learning was often driven by experienced seniors, colloquially the “grumpy old DBA,” who provided critical feedback and guidance on performance, design, and best practices. This hierarchy of mentoring was essential on expensive projects where mistakes were costly.

Erosion of Foundational Concepts: With modern, abstracted tools, new practitioners are often not exposed to foundational concepts. The example cited is a user asking, “what is data persistence?”—a concept ingrained in older professionals who used tools that required manual saving (e.g., pre-cloud Excel).

Lack of Accessible Learning Resources: While foundational books from authors like Steve Hoberman and The Kimball Group still exist, formal courses and guided learning paths for modeling are less prevalent. Unless actively guided to these resources, newcomers may not discover them.

The Inadequacy of Modern Data Modeling Tools

A significant contributor to the modeling gap is the lack of robust, integrated, and opinionated modeling tools within major data platforms.

Vendor Agnosticism: Vendors like Microsoft, Databricks, and Snowflake avoid baking specific modeling methodologies into their platforms. They provide a “canvas” and “paintbrush” but “don’t help you draw the picture.” This forces users to bring their own process and often use disconnected, third-party tools.

The SCD Type 2 Example: The implementation of Slowly Changing Dimension (SCD) Type 2 is a prime example of a common, well-defined modeling pattern that largely lacks out-of-the-box support. Practitioners are still required to write custom code to handle historical tracking, even though it’s a fundamental requirement in dimensional modeling. Databricks (Delta Live Tables) and dbt (Snapshots) are noted as exceptions that offer some built-in functionality.

From Conceptual to Physical: There is a lack of end-to-end tooling within platforms like Microsoft Fabric that facilitates the entire modeling lifecycle, from conceptual design through logical design to the automated generation of the physical model.

Artificial Intelligence as the Future of Data Modeling

The discussion concludes that AI, particularly in the form of specialized LLMs, is poised to fill the void left by declining mentorship and inadequate tooling. AI can act as an expert assistant, a sounding board, and a critical partner throughout the modeling process.

AI as Educator and Mentor: For those new to the field, AI can act as a guide, explaining different modeling patterns (e.g., Dimensional, Data Vault, Third Normal Form) and helping to translate business requirements into an initial model. This helps bridge the knowledge gap. The tool Ellie AI is mentioned as a specific example of an LLM-powered tool focused on guiding users through data modeling.

From Generation to Antagonism: The most powerful application of AI is not just in generating a model, but in stress-testing it. The concept of using an AI to be “antagonistic” is raised, where the user can prompt it to find weaknesses and potential future problems.

The Power of Opinionated AI: An unconstrained LLM may default to the most prevalent pattern in its training data (likely Kimball modeling, due to the volume of public content). The true value emerges when the AI is given specific constraints and opinions. Key inputs that improve AI model generation include:

Source Context: Providing the AI with source schemas and metadata.

Design Patterns: Instructing the AI to use a specific, opinionated modeling pattern (e.g., “concepts, details, and events”).

Business Boundaries: Using artifacts like an “information product canvas” to define the specific business outcomes the model must support, preventing it from over-engineering.

Multi-Agent Approach: A proposed advanced approach involves using multiple AI agents with different perspectives (e.g., one focusing on source systems, one on business processes, one on reporting outcomes) and having them “antagonize each other” to arrive at an optimal, pragmatic model that balances all constraints. This mimics the cognitive process of an experienced human modeler.

Tired of vague data requests and endless requirement meetings? The Information Product Canvas helps you get clarity in 30 minutes or less?

Transcript

Shane: Welcome to the Agile Data Podcast. I’m Shane Gibson.

Andy: And I’m Andy Cutler.

Shane: Hey, Andy. Thanks for coming on the show. Today we’re gonna have an intriguing track around data modeling and whether it’s been done not being done, gonna be done and how tools and AI can fit into, it’s it’s a passion of mine. But before we jump into that conversation, why don’t you give a bit of background about yourself to the audience.

Andy: Yeah, sure. Thanks Shane. so my name’s Andy Cutler, and I’ve been working. In the data space for about 25 years. So back in 1999 when I was working for a tiny little UK drum and bass record label called good Looking Records I was given the role of updating the company website and that ran on a technology called ColdFusion and I got quite into web design.

So I then managed to get myself a job, and I guess it was the first IT job that I had designing websites. Then that website agency bought a SQL Server 2000 license, and I know there’s older versions of sql. I’m, really aware of that. But that was the first version of SQL that I got my hands on, and I was tasked with building the data models and the store procedures to run CMS systems.

And then five years later, so mid two thousands, I am working in the data warehousing space. So I’d learned everything about database normalization, third normal form, so on and so forth. And then I was told to unlearn that all because I needed to de-normalized everything for data warehouses. So I’ve literally worked for the last 20 years in data warehousing.

The last few years has all been cloud-based. I actually started my cloud journey with AWS and Redshift. That was, when we’re going on for sort of 12, 13 years ago now. And then when Azure really started to motor with the products and services, I was then using tools like Azure, SQL data Warehouse.

That then moved into Synapse Analytics and for the last couple of years it’s been Microsoft Fabric. So really it’s been predominantly the Microsoft data space that I’ve been working in. .

Shane: Excellent ColdFusion and SQL server in the early days. That was back in the days where we had to buy our own hardware and put it under our desk or in a cupboard or, pre-data center. I remember back then I, we were doing some stuff and my first job and, we were buying compact three eight sixes and the argument was do we get an SX or a dx?

And I, I can’t remember. I think. Had , four mega memory and it cost the organization $35,000 New Zealand dollars back then. Yeah, times have changed. Right now you can spin up those kind of things by just putting your credit card in or even getting your free tier and . You got a massive amount of firepower in there.

Andy: Yeah, and that’s the thing is it’s also a little bit disconcerting now because all that compute is sitting in the cloud. Certain vendors like to show compute in certain ways and show you cause and other vendors, they like to obfuscate that behind other kind of terminology, which yeah, guess Microsoft do.

So you’re trying to map the cloud compute with what you’re doing with on premises, right? And saying, okay, I’ve got a certain amount of cause and I’m running this certain amount of workload on premises. What does that look like when. I go to the cloud and I’m then dealing with a service that I can’t exactly a hundred percent map to those on-premises calls, but yeah, I to, I totally get it.

You’re choosing, hardware to run that software. You’re configuring that software as well. You’re configuring that software to death to get as much as you can out of that hardware.

Shane: Yeah. And I think like you, I, when I had my consulting company, when Redshift and AWS came in we jumped on board really fast. ‘cause in those days, you’ve had to buy a big million dollar Teradata box or you had to buy some Oracle database and again, rack and stack it with some leased hardware. And when Redshift turned up, that really was the first cloud database for analytics. And it’s interesting how, it’s lost market share. It was first to market and it’s obviously been taken over by Snowflake and Databricks and a few others. So what’s interesting for me is we see these patterns getting repeated.

We see, databases where we used to have to install them, and then we see databases as an appliance where you buy the hardware and the database used to come with it pre-installed. Now we’re seeing cloud databases, Redshift being the first and some more oh, should I say, modern. So ones that solve some of the problems I’m gonna have to vacuum your database for anybody that’s dealt with a Redshift cluster before. and so one of the things that’s interesting is this idea of data modeling. Because, I’ve been in the data space for 35 years and we’ve always modeled data, but every technology wave it seems to become hot and then cold. It’s yep we model, and then no we democratize.

And people can do all the work without any conscious data modeling. So what are you seeing in the modding space and in your part of the world at the moment?

Andy: I am seeing, and I’m gonna speak honestly, and off the cuff here. I’m seeing a repeated and constant battle to make sure that data modeling is at the forefront of a data platform project. That it isn’t just about the technology and it isn’t just about the data layout patterns that we’ve seen.

One of my sort of bug bears is that I’ve seen a lot of articles on LinkedIn comparing architecture patterns with data modeling patterns, and I’m thinking, hang on, you are really comparing apples to oranges. The architecture enables the modeling. The modeling is put over the architecture. I can’t imagine a scenario where any CIO or CTO is gonna talk to, members of their team and ask them, should we architect our data a certain way versus modeling it a certain way?

No. They are complimentary. So in the last few weeks, I’ve been thinking about the data modeling side of things and asking myself is it because it’s hard to do? Is it because it requires thought and time and collaboration? Where sometimes technology is a little bit of an easier thing to do.

There’s still lots of human elements in using and deploying and working with technology, but I just feel that data modeling, you really have to have that community grounding where people are working together to get what they need from the data into a shape that’s actually useful for their business. So yes, that was my framing on that, .

Shane: It’s an interesting lens and for me I’m with you, right? I talk about. Data architecture layers. . So how we are laying out the architecture of our data across our platform. And I’m a great fan of layered architectures. I’ve used them for many years and I see massive value in them. And then once I have , an idea of the layers, then I can talk about what data modeling patterns we want to use in each layer. And I’m a big fan of mixed model arts, which Joe Reese talks about in my experience, I’ve never actually used a single data modeling Pattern. Even back when I was dimensionally modeling I always had a persistent staging area that was some form of native relational model that met the structure of the source system before I moved it into the dimensional model. So if I think about that in terms of data architecture, and then I think in terms about data modeling, then I think in terms of technology, and I asked myself this question if I was moving into the data domain. For the first time at the beginning of my career, where do I find any information around data modeling? Like how do I learn? Because the courses and the books that we had when we started have the books are still there, but the courses have disappeared to a degree. I think part of the problem is lack of accessibility , to that content for some reason. But the other thing that you just raised, and I’ve never thought about it this way, is as a technologist, if I want to earn a product, the product, I give it a go, I read some of the documentation. Like these days I’ll probably perplexity it. And I get to have a go and I get immediate feedback. So I can, install the software or turn it on in the cloud, and then I’m immediately able to log on and then I can give it a whack. I can try to use it and it will give me feedback. If I’m doing that with data modeling that’s not true. I can’t just turn something on. I can’t get instantaneous feedback that my model is good or bad or right or wrong or doing what I want. And yeah. It’s an interesting point you raised. Maybe that is the reason that people love to play with technology because they can just learn it against an instantaneous feedback and probably that adrenaline rush.

That first time you load the data up and your dashboard turns up with a pretty graph, you’re like, eh, that was pretty cool. That’s a bit of an endorphin hit. Whereas I created a data model in Miro. Yeah. That’s a picture. It’s interesting lens.

You’ve got do you agree that one of the problems is lack of access to content and ways of learning what data modeling is and how you use it?

Andy: I think it always has to be a constant conversation with the data people around modeling, and it always has to be brought up, but it always has to be. Marketed and communicated that the technology is just the starting point. Modeling comes into it as well. You are delivering a product that is the amalgamation of the technology.

Of course, it is the process that the organization requires that data technology to do, and you’ve got there through modeling and landing the data in the right way for that organization. If we go back to resources, and you touched on a point there around the books that we had access to and the resources that we had.

So yeah, I remember Steve Hoberman’s data modeling books. I then remember. The Kimball Group books. I mean they were, and I’m, looking at the Kimball reader on my shelf right now and the Dimensional modeling toolkit because , yes. You required database technology to implement that model, but the technology could be from Microsoft, it could be from Oracle, MySQL, Postgres.

It didn’t need to be a specific vendor. You were applying a process to a technology. So unless you are guided towards these resources, and I was told, I remember being told in the early two thousands to go, I think it was a database administrator who told me to go and learn about normal form.

Database normalization because the data had to be structured in a certain way to facilitate a transactional system, right? Database normalization. And then when I started to move into more of the data warehousing and analytics side of things, again, I was guided. I was told it was a MicroStrategy consultant that I was shadowing so that I could understand the data warehouse that he was implementing.

Again, that consultant pointed me in the right direction. He pointed me to towards books and said I’ll, I can teach you the basics of this now, but of course you are going to have to get hands on. You’re gonna have to learn this. And at at some stage, I was told that I would have to learn these things and.

Get hands on. And I think that unless you’ve got those people now who are guiding people to do that, people have gotta be very proactive and they’ve gotta be going out there and they’ve gotta be saying, okay, I’m building a data platform. I’m using this technology.

What else do I need in terms of my knowledge that I need to apply to that as well? So there is a certain amount of guidance, and this is, I hope, being passed down by people. There are, great people out there like Johnny Winter who are talking about data modeling and, we’ll get onto the data modeling AI topic in a little bit that I.

Essentially took from Johnny, right? Johnny was talking about this and I looked at this and thought, wow, this is, yes, this is relevant. I like this. But yeah, so that’s what I feel. The other thing is, if you look at vendors, are vendors necessarily pointing people in the direction of the modeling processes?

I’m a Microsoft data developer, and throughout the years there have been various books around how to apply things like dimensional modeling, a modeling patterns to Microsoft products, right? And even now, you can go onto the Microsoft Fabric learn documentation, and in the warehouse, the fabric warehouse documentation, there’s resources around dimensional modeling.

They will tell you how you can do that.

So the vendors, like I said, have been providing some of this guidance and some of this documentation, but it’s very it’s small fry, right? It’s a few pages in their documentation on how to do it. So you’ve gotta, you’ve gotta be mindful of the fact that when you are coming at these data platforms, that you’ve got to be doing the modeling behind it as well.

And it has to be front and center to a project. Yeah, so that’s what I think about that.

Shane: Yeah. And I agree with you that I, if I remember a lot of my learning was from that grumpy old DBA. When you used to deploy something and it ran like shit I remember the standard response from the grumpy old DBA is when you said, oh, my, my ETL loads are going too slow. They used to always reply with, compared to what, that was standard response. And then, eventually they go and help you tune it. They typically tell you it was a data model or your code that was wrong, not the database. And then, again, if I think back, I think you’re right that often the projects we are doing were expensive and expensive ‘cause the people and time. Therefore there was a whole hierarchy of mentoring because you couldn’t afford to have somebody just rush off and do something that didn’t fit the way everybody else was working. So there was always a mentoring process where somebody more senior experienced than you, took you on the journey to learn to do things the way they did. And I think what’s happened is as we’ve got new technologies, we’ve got new forms of democratization, and therefore people are able to do the work quicker and faster using those tools. And we’ve lost that mentoring process, that knowledge transfer outside the tool itself. And also people aren’t being taught what we got taught in the early days.

We did a podcast or a webinar and we’re talking about data layered architectures. And a template that I’ve been working on. And one of the questions in that was, is the data persisted in the layer or is it virtualized, right? Or is it temporal memory? And one of the questions we got online is, what is data persistence? And I sat back and went, holy shit. And one of the comments from Chrissy was doing the webinar in Ramona with me was, know what persistence is because we’ve worked with tools when Excel didn’t save. And if you didn’t hit the little dis get and persisted that data down to your laptop and your laptop crashed, you’d lose it. Now we’ve got Google sheets where you type, it saves, it persists the data. We don’t have to care about that. And so I think for some of us, we forget some of the core foundational concepts that we just learn by doing things outside of data to a degree that we’ve applied. So if we think about that, then. Is the problem with data modeling right now? Lack of tools really there is still a lack of data modeling tools in our data stack. Or is it a lack of mentoring and therefore is AI the answer? Do we actually now see AI bots or AI clones or whatever we wanna call them, that actually become the daily modeling mentors for people that don’t have a physical mentor like we did? What do you think?

Andy: I think that the technology itself, and I’m talking about it could be Microsoft technology, it could be Oracle, it could be Databricks, snowflake, so on and so forth. They don’t have any guided ways of creating a model. I reckon the last time that I used a piece of software that guided me through the process that was very aligned with a very specific data modeling technique was analysis services multidimensional, where objects inside this model.

Were named after a specific modeling Pattern. So your facts, your measures, and your dimensions. If we’re talking about Kimball dimensional modeling, so that was really the last time that I used a piece of software that was quite aligned and essentially had a wizard that would take you through a modeling process.

‘ cause of course now we’ve got different modeling processes we could model. Yes. Third normal form. We could use dimensional modeling, we could model it data vault, and no vendor is locking themselves into a specific Pattern, right? Vendors are saying, Hey, you can bring this specific type of modeling to our software so they’re not baking in a specific modeling process.

One of my real asks in terms of software is to start to bring in some of these modeling aspects. I would love functionality, like slowly changing dimensions. So this is a dimensional modeling concept, which adds historical records to a, a reference table, to a dimension table.

And this is just my experience with the software that I’ve used. I really only see, Databricks that have done this, slowly changing dimension type two in their Delta live tables or lake, Lakehouse declarative pipelines. DBT, they’ve implemented slowly changing dimensions in something called snapshots.

So it is there, but it’s a little piecemeal and it’s not necessarily being massively called out in terms of this is the modeling Pattern and this is the feature that we’ve implemented for you to be able to realize that modeling Pattern. So then you were talking about ai, right? And this is where we start to get into productivity.

This is where we start to get into how can someone with not much experience of something ask AI to help them. And this is a classic case of where AI can help and start to move someone towards understanding how they work with the business. How they would evolve a Pattern as well. And like you were saying before, , people can get hands on with technology, they can get cracking with technology.

It’s binary, something’s gonna work or it’s not. If you model some data, you might not know whether that model works that you’ve created six months, a year down the line when all of a sudden something happens. That means that the model isn’t flexible enough. It hasn’t been thought of it, it hasn’t, there hasn’t been enough collaboration to understand the impact Act.

I went to the Fabric London user group. I was talking at the user group, but I was talking on technology, right? I was talking about a specific feature within fabric called materialized Lake Views. A little, segue from our conversation, but it’s a feature. It’s a feature in a piece of software from a vendor.

Johnny Winter was there, and he was talking about data modeling. He was talking about, sunbeam modeling. And this is a modeling practice that I’ve used in the past only because Johnny Winter has been talking about it, because it brings together a couple of areas of data modeling that I have used.

I just never thought there was this, port manto of these things. Beam business event analysis, modeling from, Lawrence Corr and the, the great Agile, data warehouse or Jim Stato and Mark Whithorn, which I now know is the pronunciation who was championing sun modeling, in terms of, a central event and then your sunbeams were your reference data in terms of how you brought context to that data.

And of course that was just the modeling aspect. But Johnny then started to show a tool called LE ai and that really got me thinking. So this is a data modeling tool in which. It’s focused on data modeling. It’s focused on understanding how to build enterprise data warehouses, how to help someone and guide them through the process of creating a data model.

And it’s very different from something like a data warehouse automation tool, right? So there’s data warehouse automation tools out there, either wcap and such in which you pretty much have to know the modeling Pattern while you are using these tools. Whereas all of a sudden I’m looking at Ellie AI and thinking, oh, okay, I get this now.

It is essentially an LLM or maybe multiple LLMs that’s been trained on data modeling, perhaps on architecture, layout patterns, technology even. ‘ cause it can help it then shape how it. It helps the user as they prompt their way to a data model. And at first I looked at it and thought it’s just another AI tool.

There’s millions of AI tools out there now. But I did think, hang on, if this is an AI tool that’s helping people data model, this can only be a good thing. This could only be of benefit to people to use something like this to help them through the process of modeling. They’ve got the technology they can use, co-pilot or chat GPT to help them write code.

But they’ve got these tools with the subject matter expertise of data modeling to help them and guide them through getting to a state where they might not have these problems in 6, 9, 12 months time of a model. The isn’t flexible enough because they can prompt their way into flexibility. So that was what I was looking at specifically with the AI tools.

Shane, so I’m curious to think about what you think about somebody who doesn’t have much domain expertise in data modeling, but is working in the data space using , these AI tools for modeling.

Shane: there’s a lot to unpack there. I’ve just made a whole page of note. So let me go through from the beginning of what you talked about and replay it back and my thoughts around it. I liked your point around opinionated tools. If I think back in, previous of technology for the data space, the tool really supported one modeling Pattern. Yeah. And realistically it was Kimball, Kimball was the number one data modeling Pattern that I ever saw in data warehousing in the old days. And that was because wrote good books. He shared his content online with his blog, which was free and easy to access, and he ran great courses. So getting access to how to model, he was the most accessible piece of content that you could find, and it made sense. I’m a big data vault fan. I like the physical data vault modeling Pattern. I’m not a fan of the data vault bi methodology but I find that content and access to how to model using the data vault Pattern of hub sets and links is incredibly hard to find.

It is poorly written. It is pay. And so I think that’s why we see with the advent of DBT and when people started to realizing they had to model data consciously, we saw Kimball take off again, right? Because the old content is still valid. Just on that though, I was really intrigued about your SCD two comment. So let’s take a segue on that and I’ll come back to the AI stuff in a minute. Because I remember with the original ETL tools that we were using, there was never a native CD two node. We used to always have to bloody well write that node ourselves. then when the cloud analytics databases came out, would’ve just made sense for me for CD two behavior.

That Pattern of historical recording of change data to be a database feature. . Not be a piece of code to detect the change and store it, but just make it a feature in the database to say, this table is SCD Type two table, because the database could take care of that change detection the, end dating or the flagging of a current is record. And so it’s really interesting that SCD type two is one of the patterns that you use for dimensional modeling all the time. When you’re physically modeling using that Pattern, but somehow we still seem to be lumbered with write some code that deals with it. Is that your experience? Are you still seeing people having to write code to, to implement the type two Pattern?

Andy: Yes, I am basically, and this was even talked about a few days ago, so I was at a little conference in Birmingham called fab Fest, which was focused on Microsoft Fabric. I was speaking about a function called, or a feature called Materialized Lake Views, which is essentially like Databricks is Delta live tables or lake flow declarative pipelines as they’ve called now.

‘cause they’ve, they’re abstracting it away from Delta because it’s not just Delta that this technology supports, but also DBT and someone said, why have we not got this SCD outta the box functionality? Because it’s almost like slowly changing dimensions.

Have they transcended the specific methodology in which it would be used in? And what I mean by that is when you started learning Kimball and you started learning dimensional modeling, one of the sub-categories. Was dimensions and one of the sub subcategories was slowly changing dimensions and all of these different types.

So type two has probably now become the champion, right? It’s the one that will track changes over time by adding new rows of data and the associated metadata to keep it. Yes, there are, type four and type six and type three, and all of those have their reasons to implement them. Type three, you’ve got multiple columns which can store, the current data and then the previous amounts of data, but they are a little bit more difficult to implement.

So most of the time people will use slowly changing dimension type two, because that’s the one that is the most. Relevant, the most prevalent and the ones that’s easy to implement. However, unless you are using some tools that have this functionality in built, like I said, DBT have functionality called snapshots, Databricks in their, lake flow declarative pipelines.

You can declare an SCD type. It’s not massively prevalent and soaked in to the data landscape. So this person I remember at this conference was saying I’m still having to write my code to implement my slowly changing dimension. I’m still having to say these are the columns that I would like to track.

This is the key that I would like to join on. These are my columns that I’ve defined for my metadata, my from my two, my is active. If you have that, any other metadata columns that you want to be able to track your changes. Then, and my last point on this is we look at the medallion architecture right now, I’m not gonna go into the ins and outs of, should we call it the medallion architecture, because to my mind it’s a data layout Pattern.

It’s not necessarily an architecture, but it has these different zones of data. We know raw is bronze and silver is cleansed, and gold is modeled, but silver. Which is the cleansed data. It hasn’t yet got to a stage in which it’s been modeled to a specific Pattern. There’s a lot of advocates that want to apply slowly changing dimension functionality to that silver data, but it’s not modeled yet.

It’s existing at the same granularity as the raw data. Obviously it’s gone through dedupe, it’s gone through cleansing and all that kind of stuff, but it’s not modeled yet, but we’re applying something that was a modeling Pattern or a modeling feature into this store of data. So I find that quite interesting as well, is that this SCD has almost been extracted as a feature of a modeling Pattern and can just now be used as a way of tracking changes over time.

But To your point, I just don’t see enough of that functionality automatically added to database and data products. People are still having to do it themselves.

Shane: And that affects adoption because if we say that a simple technical Pattern of type two implementation, where we know what the patterns are, right? We know that is detect change, insert row, start date, end date. If that , what you like is active or as current as a flag. We could actually just add all of those, and I remember in the early days, when we were constrained on database technology where the cost of those servers was expensive, the cost of the licenses were horrendous. We had to optimize to reduce costs. You probably remember it, we would argue type one versus type two. And we would type one by default, and we would type two where we knew there was value because the cost of type two was higher than type one. Now with cloud analytics databases, we really don’t give a shit, we just type two everything because we can. And it saves us problems later. And people are more expensive than those databases. And so we can be lazy to a degree, but there’s value in being lazy. What’s interesting for me is that Pattern is a very well known Pattern, yet it hasn’t become opinionated in tools. And when we talk about data modeling patterns, do we go. Snowflake versus star versus data vault versus anchor versus hook versus unified star schema versus anchor, there’s all these other patterns where actually they’re a little bit harder to be opinionated about. and therefore they’re harder to bake into a tool. So if we can’t do the simple stuff, how do we expect to do the hard stuff? And then the last thing I’ll say before we move on to the next part of your point that you had earlier was your silver’s, not mine. I think medallion has been great because it’s reinvigorated the conversation around layered data architectures and the value of them. But when you talk about your silver as being cleansed, I talk about my silver as designed and I model. We have a an opinionated data modeling Pattern, which is concepts, details, and events.

That’s how we model and what we call our silver, our raw is historicized. We are effectively applying an SCD two type Pattern on our raw data for a whole lot of reasons. So again, the problem with medallion is, it’s a nice way of describing a layered architecture. But soon as we get into any detail of what you’ve got in your layer, it’s not what I’ve got in my layer, and that’s okay.

As long as you tell me that you are cleansing in silver and it matches the structure of your source, and you tell me you model in gold, I get it. Now I get your architecture by you just using those words that opinion you have applied. So I think that’s the key, is no more, there’s only one way to Medallia.

It’s what do you mean by silver? So let’s jump on then, and then let’s talk about tools because. In the past we had tools like Irwin, oh God, ea sparks. We had some really hard to use data modeling tools to draw diagrams of what our models look like, and typically they were completely disconnected. We found it really hard to draw a diagram for our conceptual and physical model and then get that model as substantiate in our database easily. And the modern data stack, in the previous wave, now that it’s dead, we saw tools like Ali and sql, DBM, we saw visual modeling tools come out. But what was interesting is they became category, they were a party or stack.

So if you had a on data stack, you’d end up with five to 10 different tools to do your end-to-end processing. And we’ve seen a lot of consolidation in the market. We’ve seen a lot of those tools that are part of the stack disappear or get acquired or become features in, in one of the other tools, we haven’t seen that for data modeling. We haven’t seen the data modeling capability being bought back into those end-to-end stacks. And again I don’t do a lot of work in fabric, but I don’t think, apart from the power bi SQL server analysis services part of the Microsoft stack, they’ve never really had a modeling tool, have they?

There’s no tool I would go into that would help me create a conceptual or a physical data model and instantiate the physical model in a database within the Microsoft stack, or is there.

Andy: So this is probably one of the most asked questions in forums around the data modeling aspect because as you’ve said, we’ve had, tools over the years that have enabled us to do data modeling. Even SQL Server Management Studio, which you can download for free has got this almost live data modeling process attached to it where.

It’s very much a physical design Pattern. You can’t logically design something in its interface in fabric. We’re still there. We haven’t got anything that’s gonna help us logically design a data model outta the box. There’s nothing that we can start with and say, okay, I wanna start with the conceptual data model.

I wanna go down into a logical design, and then finally a physical design of my lake house, of my warehouse. we’re having to still use other tools to be able to do that. So whether people still use, Visio, whether they’re using, other things, AATE or SQL database modeler.

I think even Toad, for these kind of modeling tools, one of the things that I didn’t like is when Microsoft did deprecate some of the data modeling tools that were available within Visual Studio, I just thought you’ve got to have something that can help a process. When I look at Fabric or even Databricks, snowflake, all of these sort of cloud vendors is, they are the canvas, they are the paintbrush, but they don’t help you draw the picture.

You need a process to go and help you draw that picture. And there are, there are cloud tools that help you do the data modeling. Some of them, I think a lot of them are paid because. To go from conceptual to logical, then physical is a natural progression. People want to be able to create the physical data models.

Ultimately, yes, some people might be a little bit annoyed that they’re forced to go through, the conceptual and the logical modeling processes before they get down to the physical. But then they want to be able to click a button and it generates the code necessary for them to run and create that physical model.

No, I don’t see anything. And if we’re talking about Microsoft Fabrics specifically, I don’t see anything in Microsoft Fabric that’s gonna help you from the conceptual all the way down into the physical.

Shane: And again, you’ve gotta be, software should be opinionated. So the way we do it is I create the conceptual model and then it generates a physical model without me doing anything because our physical model is opinionated. So our conceptual model is. open in terms of, the things you create, the concepts, the, who does whats, ‘cause I’m a Great Beam fan as well,

lawrence CO’s book is one of the ones I, read early. We used to, when I had my consulting company in New Zealand, in pre COVID, when things went online, we used to fly ‘em over as often as we could to teach our customers how to beam and event model their data.

‘cause it was so useful. And that idea of, your conceptual model of who does what, your core concepts, depends on the industry, depends on the business case, the usage, the actions and outcomes you want to take. So it is a, a little bit less opinionated to a degree. but once you’ve got that sorted, you can make your physical modeling Pattern incredibly opinionated. And that’s why I think these disconnected data modeling tools are struggling from what I can tell. And you’re starting to see them now bring in the ability to actually generate the ETL, the code to, deploy the model and load the model.

Because that’s the space you have to be in. You have to be able to create the model and test it, that it has value. And so when I come back to the AI tools. I’ve played a lot with the lms played a lot with the bots, and I can see as a helper friend, as an assistant, it allows me to ask questions and provide some context around the industry, the use case, and get back a starter model for 10. And that’s really useful. But if I think back to this idea of the mentoring I had earlier in my career by those grumpy data modelers what they used to do was they used to stress test the model. There was something magical about the way you could give them a data model they could just look at it and then they could call bollocks on the things you got wrong.

It was just that innate Pattern matching in their heads. ‘cause they’d done it so often and they could go, yeah. That relationship’s not a one to many. It’s a many to many. It’s not gonna survive. Okay. The rate of change on that table is gonna be horrendous. You’re gonna go blow out your Oracle instance. Yeah. Or you have to upgrade to Oracle Rack, which will cost you, two legs, one arm and your three newborn children. I wonder if that actually is where AI tools have to take us is effectively an agent model, right? Where there is one that helps us build the model and one that helps us stress test the model, which goes back to that point you made a long time ago which was, when we work with technology, we get instantaneous feedback that the technology’s working.

It’s not when we work with data modeling, we don’t. So maybe that’s where the AI tools need to take us, help it to create it. And then another agent, which is, grumpy old data modeler that tells you where you got it wrong. What do you think?

Andy: So I think AI can help all the way through that process. And this is where we start to look at AI as less as a technical tool that will help us do something and more as a sounding board, as something that we can ask it to be quite antagonistic with. As you said, and like I, I raised the subject earlier about AI tools and helping with data modeling is you could go to an AI tool and say someone has told me to design, x, y, z system for argument’s sake.

Let’s say it’s a data warehouse or it’s a lake house and we’re gonna use, a data modeling technique that is best for reporting and analytics. And the LLM might reply and say, okay, well here are a few. Modeling patterns and, dimensional modeling is the one that you would use for a data warehouse and so on and so forth.

So let’s say they then pick that and, carry on doing that, they’ll then ask you questions about, I’ve got all these different entities in my source system. How will a dimensional model help me? So it’ll then work through those entities and say, okay, you’ve got these entities that look like they can be grouped together.

Perhaps that’s a dimension and it’ll work through the process with you. So let’s say you’ve got that sorted, so you’ve applied your critical thinking and not just accepted everything that the LLM has given you. You’ve gone back and dah. Maybe you’ve Googled, maybe you’ve asked other people that are experts in that area to say, okay, you know what?

I’ve spent a whole day generating this model. It would’ve taken me two weeks if I had to learn the theory and then do it. What do you think? I’m sense checking it. Someone might come back and say, okay, we can tweak a couple of things, but actually that looks pretty good. Great. So we’ve got our starting point then.

You talked about stress testing and Yes. So this is where you can then ask the LLM to be antagonistic and say so this is my data model we’ve got here because the business want to be able to report on these X number of attributes and this is how they want to measure it. But I know that there are other source systems and things like that.

Can you tell me what problems this model might have in the future? And of course you essentially, you are asking the AI to try and do some future proofing for you and some troubleshooting. And it might come back with some generic questions about perhaps your product dimension isn’t, deep enough.

What about other entities that you might need to bring in that you are, that you haven’t yet got links in your fact tables, but it’s gonna surface, it’s gonna help surface potential problems for you to then deal with. So I totally get that as well. And then the third point that I wanna add is about anticipating changes.

We touched on, Lawrence Core and, the Agile data warehouse book a little bit earlier, which Yes is, something that I go back to constantly and, we’ve got beam in there to help us do this. But the agile data warehouse is also there to help us iterate over a model as well.

So perhaps we then say to the LLM, look this first version of the model, okay, we’ve gotta set it in stone now because we’ve got project deadlines we need to get the data in because the business are gonna build X amount of reports. And we tried to make the model as generic as possible.

‘cause we don’t want a report driven model. We want the data driven model in here, but help us understand what we might need to do to modify the model and be a little bit agile. So I would say that the usage of the AI tools to help us generate the model is just the first part. Most of it is gonna be about asking the model to be quite antagonistic about the model it’s generated.

Yeah I think that it’ll help us build the model, but the most important thing is antagonize the model, test it hopefully point people in the right direction in terms of future proofing and certainly surface issues that might happen in the future. They might need to fix those issues. I don’t think I’ve worked with a dimensional model yet that doesn’t incur a certain amount of technical debt in how it’s implemented.

But if you can mitigate those things earlier on, that’s just gonna be of benefit. Yeah, so that’s my thoughts on that, Shane.

Shane: Yeah, and that’s that problem between, doing a model quickly that gets value now and trying to boil the

Andy: Yeah.

Shane: for all changes in the future. Or an enterprise data model. Again, going back to that anti-patent, we have these days of, a data modeler sitting in a room for two years doing , one enterprise data model to rule them all that nobody implements. One of the things we’re doing is we’ve been experimenting in this space and we have a bunch of partners use our platform and we got one of them to experiment. And we had a use case around Google ads, so the partner needed to bring Google Ads data in and deliver it for a customer. And so that first part of the agent that opinionated agent that’s gonna help you do the initial model was really interesting. we found was, first thing was we were lucky that Google ads gave us effectively context about the tables. It brought in metadata that described the tables quite well. So that was really valuable for the agent because it now got a bunch of hints of what the source data looked like. The next thing was we have an opinionated design Pattern for the way we model.

And so , our agent already knew about that, so it knew what the rules of the game was, it knew it couldn’t dimensionally model it, it knew, it couldn’t anchor it, knew all the patents it couldn’t use. It knew what our patent looked like. So that opinion was effectively already in the agent. As a a bunch of rules. We also gave it the information product canvas that a partner had done, which is a description of what the actions and outcomes and business questions that need to be answered first. And what that gave was a boundary to the agent to say, don’t model all the Google Ads data. Only model the data that’s gonna support this outcome. So again, it gave it a boundary that led a lightly model. Now, what we didn’t do was, the bit that you are just raising is then stress test change, right? We didn’t say what’s gonna happen next, but deal with that in different ways at the moment. But I’m gonna think about that one really well, so boundary of opinion that was given to the modeling agent meant it did a good job, If I had just said to a, I’ve got Google Ads data and model it, I have a theory and I’m gonna go test this. I reckon every time I ask it that even though it’s non-deterministic, it’s gonna kimble model it. The reason I say that is if you think about why has Kimball and dimensional modeling become the number one modeling technique for DBT? Because if you are an analyst and you are moving into the engineering space and you hear that you need to model some stuff and you use an LLM or you go Google search, you’re gonna come back with dimensional modeling every time. ‘cause as I said, it’s the most freely available. Describe content in the world for data modeling in an analytics space, in my opinion. so therefore, the lms, who trained on everybody else’s content without paying for it, won’t go into that one. It’s gonna have the richest piece of content in the LLM for dimensional modeling. interesting question on that one is actually, if I go and ask an LLM to model it with no constraint, no opinion, I bet it’ll come back with Kimball Modeling.

What do you think?

Andy: And I think the LLM would probably not be displaying any emotion. So let me expand on that. So you touched on data vault earlier and you say that’s a, that’s a data modeling Pattern that you like. If we go back several years, actually decades we’re talking about Kimball versus Inman.

We were talking about dimensional modeling versus third normal form. Then Dan Linted comes along and we’ve got data vaults. There was lots of emotion involved in people comparing these technologies. In fact, all. Of those people, bill Inman, Dan Linted, Ralph Kimball, they all said, and it’s in their books, that these modeling patterns are complimentary.

Bill Inman would say your enterprise data warehouse can be third normal form, but for reporting and, for feeding into analytical tools. Kimball model, the dimensional model is great. Kimball would say, ah, okay, you can do that. But yeah, you can also do your, your enterprise data warehouse and dimensional modeling.

Okay. There might be a little bit difference of opinion there, but they were still complimentary. Even data vault, Data vault, you can’t just plug straight into analytics and tools. and I will. Admit and agree that it’s great for tracking changes over time in the lowest level of granularity, giving you the most flexibility to do what you want with it afterwards.

But a dimensional model is very good in plugging it in. But of course, we see all those debates out there. This versus this, the versus this, the LLM doesn’t will take those arguments into consideration, but it has no bias. It has no emotion attached to any of those modeling patterns. So I would say that LLM will probably come out with dimensional modeling because it’ll reason that, okay, you can store your data this way, but it’s not going to be what you need to design the model that’s going to be delivered to the business.

And I would like to test this as well and antagonize an LLM around these modeling patterns and ask it. Okay, I’m gonna be designing this. What do you think the best model is gonna be? And then I might add in a few, trip wires to it and say what about data vault? And what about this?

And I’m hoping that the LLM would say, yes you can use those modeling patterns, but it’s generally agreed that they are complimentary and that you can add on a dimensional modeling Pattern to a third normal form or a data vault. But I am hoping that the AI has less shall we say, emotion attached to picking that data modeling Pattern.

Shane: if it’s got no emotion, we should ask it for a definition of data, product, semantic layer

Andy: yeah.

Shane: We’d love to argue. I’m gonna, I’m gonna disagree with you on that one. And the reason is I don’t think LMS are reasoning, they are Pattern matching and tokenization based on a bunch of content. my hypothesis, and it is just a hypothesis, is the Kimble and dimensional content is being far more widely available. And therefore that model has a bias towards using it However. It’s just a hypothesis. And one of the things that Joe Reese has been doing as part of the practical data modeling community is just testing stuff live with a bunch of people. I’m gonna suggest you and I do that. I suggest that we figure out how to do a live session. bring up and, multiple LMS and with a bunch of other people watching or helping us. We just bash the snot out of it and try and see actually is there a bias for a modeling technique? But if I take that away for now your comment around some being really intrigued me, because Johnny’s mentioned it before and I struggled to find any content around it, it’s one of those data modeling that is actually quite hard to find anything about it. And good point. I need to get somebody on the podcast to come and explain it. So if I wanted an LLM to assist me in designing a Sunbeam model because I’m opinionated that’s the model I prefer for whatever reason, I think it’s gonna struggle and that will be another test, maybe we can give it a bash on that to, to see , so I think if you’re using an LLM to assist you in modeling and you are using a well-known Pattern that you are opinionated about, so data vault dimensional, third normal form potentially, you probably don’t need to use a lot of reinforcement with the LLM because it knows what you’re talking about.

If you wanted to bring in some of the more obscure modeling patterns, like something, I think you’re gonna actually have to pass it a reinforcement of that content. So one of the interesting things about Joe recently, what he does with the practical data community is he does live sessions where we all jump on screen share and we actually test out a hypothesis. And so one of the ones that he did was this idea of could we start with a business problem in an industry none of us knew. Get the LLM to help us understand the industry, create a conceptual model, move it to a logical model, move it to a physical model, and actually implement that physical model in a database. And that was fun. But what was interesting for me was observing the way Joe approached it, and he comes from more of a data science background than I do. So he tended, in my view, what I saw was approach it from an EDA, an exploratory data analysis approach. So he would be looking at the data sources and trying to understand the data that’s coming in, because that’s how we thought. Whereas for me, I come from more of a business background. That’s how I’ve been trained.

So more of that. Who does what business process stuff. A lot of the beam first part of the book. And so for me, I typically wanna understand the who does, what’s the core business events, the core concepts, and that’s how I model. so again, I think in terms of an AI assistant that helps you model, you probably want to train it around your modeling process.

Or maybe you don’t actually, maybe the LLM should decide what inputs it needs. Rather than you being opinionated on how you typically model, what do you think? First of all, which way do you model? Do you think source specific and understand the data first, or do you try and understand the core business events?

And then do you think you should be opinionated to the LLM about which way it should approach it?

Andy: I’ve always modeled from the source system side of things and then taken into consideration the requirements from the business. And I know why that is, and that’s because I am fundamentally a technical person. So I’ll always want to default to looking at the technical aspects of things. Hey, I can look at source systems, I can understand schemas, I can understand tables and columns and the domain values within that, and then I can look at matching that to what the business wants.

And I’ve worked with people that work the other way around. They are interested in the art of the possible. They’re interested in what do the business need to make the decisions, and then let’s go and find what we need from those source systems. So I’ve moved my needle a little bit more towards the art of the possible, right?

This is what the business ultimately needs in terms of decision making. If they need to be more operationally efficient, if they want to, take advantage of opportunities in the market, challenge competitors, whatever, I then go and look at those source systems and see what’s available. And then sometimes you really can’t realize.

Some of that data that is needed for the business, right? Because it’s just out of the hands of those source systems. And I suppose you could calculate it and you could infer it from that data, but I’ve tended to work that way. And then interestingly, when you were, talking about AI and Joe working through the kind of modeling aspects from that perspective, yes, I can totally see someone asking the model to work with them in a specific way and say, right here is my source system.

This is what I need to do. Now when those source systems, and if you’ve got access to schemas and you can provide that schema to an LLM and let’s, let’s say it’s An LLM that’s secure, it’s within your organization or it’s within your data boundaries. So you can give the LLM that schema and then you ask it to model that for you.

You may then ask that LLM I need to join it with other systems as well, and this is the system and this is the schema. So it could then help you generate the model to incorporate multiple systems or showing you some examples about how you can, join those systems together.

What I wouldn’t want an LLM to do is just go crazy with the business requirements and say, okay, this business operates in. This domain. So this is all of the data that they need to be ultra competitive and at the top of their game. And then I’ve gotta scrabble around trying to desperately find where I’m going to get this data from.

That the model says is going to help me build the perfect data model for the business. I guess I’m a data modeling pragmatist. I would look at the source systems and I would look at how they support the business in what they want to do. And then I would probably work with the LLM in that fashion.

I’d be saying okay, here’s the framing. Here’s the context. This is what we need to measure, but this is what we’ve got in our source systems, and this is the hard facts about what we’ve got in the source systems. Help me build that model that can, realize what the business wants, but work within the limits of what I’ve got with those source systems.

So that’s what I would do.

Shane: I was just thinking then, back to my point about, enterprise data model is sitting in the cupboard for two years to come out with the most beautiful data model ever. That’s basically a person going into an LLM now and saying you have no constraints. Here’s the industry. Gimme a data model from scratch that does everything .

It’s quicker. It’s not two years, but it’s just as unimplementable, that’s a bad word. One of the things we found was if we think about chat GT five and this idea that, we didn’t get the a GI that we expected, but what we got was a better interface. So instead of having to decide what type of LLM foundational model you wanted to use, you tell it what you want to achieve and it works out which model is the best fit for you. And one of the things we found when we were experimenting was. If we had one agent, our agent’s called 80. If we asked her to do everything, she was okay at it, but she wasn’t great when we broke her out into sub-agents. So we had agent, 80, the data modeler, 80, the what we call change rules, like the ability to write ETL and we gave her a clearer opinions and a clearer boundary.

We, we got a really good uplift in the accuracy, right? And the the evals that we got back in terms of, we got better responses that made our lives easier. so of the things when I’m teaching my canvas I actually use something that Lawrence talked about, right? Which is modeling based on source, modeling based on report or modeling based on business process. It’s one of the things that stuck with me for many years. And so now I’m thinking based on that. What we really probably need is an agent that we can go to and say give us a model based on the source system. And another agent. We can say, give us a model based on the core business events or business processes, or the who does what. And a third one where we go give us a model based on outcome, and then we give it the constraints of what we actually wanna achieve in the next iteration. What do we actually have to deliver and we get it to model it for us. So based on those three, tell us what the actual optimal model is to achieve this outcome and then stress test it for me. So that is getting a bunch of inputs, right? Because if I think about it. That’s what great modelers do. they take a stance, but then they always jump, your technical you source first, but then you go right now what are the core business events?

Customer orders, product good. I don’t need to worry about store ship’s product in this iteration, So I’m only modeling in that boundary. And then, okay, what do we know we have to deliver? Is it a dashboard, is it a data service? And then you are using that to iterate initial stance of that model until you get something that is fit for purpose.

If I think about it, that’s what we do as humans. So that’s probably the process we need to encourage the L LMS to do. What do you think?

Andy: I like that idea of asking each of those models to come up with their specific version based on those constraints. You do it by source system, you do it by business process, and then getting them to antagonize each other in terms of. Getting to a realistic result, taking on board each of those aspects.

And what I did make me laugh in my head when you were talking about, a great modeler is that in the Rocky films there was Apollo Creed who said to Rocky that, you fight great, but I’m a great fighter. And I think that about the modeling domain as well.

you can point at a person and say, you model great, but I’m a great modeler. And that’s just built up through experience, That’s just built up through battle testing models that you’ve created over the years and iterating over those models over the years. And like you said, in a couple of points before.

About the LLMs generating these models and coming up with dimensional models because that’s what the prevalent documentation will have. Those models have been trained over that documentation, so those models will be like, ah, okay, this seems to be the most popular way of doing things. So then when those LLMs are generating a model based on a source system, it’s gonna be based on the context of them understanding that source system and what the output of that source system is.

I look at something like Dynamics, which has generally been something that is quite difficult to model when so much customization happens within the platform. There is no real vanilla implementation of dynamics, which means that the LLM is gonna have to. Understand business context, not just source system because I guess the source system is just going to have all these entities that it might think I don’t have any information, because those sorts of things haven’t been discussed before.

Before. And as human beings, I guess we can reason over those things and we can hypothesize and we can make a best guess and iterate over perhaps the LLM can’t really do that because it’s been trained on previous data, previous examples, and it doesn’t have the ability to think outta the box if it hasn’t encountered something before.

Yeah, interesting point there, Shane.

Shane: Although I would posit that it probably has encountered it because it’s got access to information outside of data warehouse, data modeling. So it’s gonna have all the books on Dynamics implementations, it’s gonna have all the blog posts of people who have customized it. But yeah, I get your point, especially things like SAP, where, who knows how that bloody thing works.

It’s it’s gonna have more knowledge than I do on that. But less knowledge than an enterprise. SAP data modeler, who does it for a thing. so again, I think it comes back to being clear about when you want to provide an opinion when you don’t. So when you wanna provide an opinion, ‘cause it’s important that the LLM or the agent stays within that boundary for you or where you just leave it because it in theory has access to more expertise and knowledge than you have in that, in a specific space. And so if we go back to medallion, if we go back to layer data architectures, that’s actually a really good place where you might wanna be opinionated. Because if you are saying I want you to help me do a conceptual model, you probably don’t care about your layered architecture. But if you are saying, I want you to help me create physical models that I’m gonna implement, I know that. My layered architecture is I have a designed layer that is concepts, details, and events. And my consume layer, is a one big table. And I know that your cleanse layer is source specific data structured with data being cleansed. And your gold layer is, I’m guessing, a dimensional model,

if each of us had the same agent, but we put in those opinionated boundaries, we are gonna get back physical data models that are more fit for purpose for us to implement in our platforms of choice. And, realistically, if the L and N came back to you and said throw away your dimensional models and do one big table from cleansed, you are probably gonna look at that and go the cost of change is quite high.

I really need to understand why you’re making me do that. Because I have to learn a whole lot of new things and I have to rebuild everything. So I think, again being opinionated where you want to be opinionated, that makes sense is important. And then being free and hippie and open to whatever, the great a, a I agent in the world tells us as a start for 10 and then stress testing it with expertise.

I think that’s where we’re gonna end up. What do you think?

Andy: So I think that opinionated aspect is quite important in terms of how you approach the LLM because being opinionated about something means that you’ve got strong convictions in how you want to do something. Which then also means that someone else could be opinionated and have strong opinions in how they want to do something.

And as we know over the years when you’ve been working with other people that have experience doing these things, there might be a crossover, there might be some differences of opinions. How is the LLM going to know those differences of opinions that you can work out and collaborate on? Yes, it’s got this body of knowledge.

Hopefully the people with the opinions have been provided information, writing books, writing blog posts that the LLM can take on board and learn and use. But if I come to a project and use an LLM to create my medallion. I also ask it to create my data model based on my biases and based on my experience and the way that I wanna do things, I’m probably gonna guide the LLM to a certain conclusion.

Someone else is gonna come along and say I wanna start this project. I wanna lay my data out. Do you have some thoughts about how I wanna lay it out? I have used this in the past and then I wanna use data modeling to do it. It might come up with a slightly different outcome based on your own strength of conviction and your biases that you’ve put into the LLM.

It might come back with something that is generic at first, but then you might say to the LLM, actually. I don’t want my ization in silver, I want it in raw because that’s traditionally where I’ve put it. Whereas someone else could say, okay, why have you asked me to put my ization in raw? I’ve learned that it’s supposed to be in silver, but if we remove the LLM from this conversation for a second, it’s almost like we’d get the same outcome if it was just humans.

Because humans would go into an organization, implement a data platform. and based on their experience and biases, they would implement it a certain way, a different set of people could go into that same organization and implement it slightly different.

So I don’t think we’re necessarily solving the problem of these differences of opinions or the convictions that you have. It’s just using another tool to help, possibly reason, to help possibly have this entire body of knowledge and experience our disposal that we can interrogate and hopefully gets to a more human slash ai reasoned outcome.

That’s what I use it for. So I would use AI to help me model, and I Based on my experience, but I’m also not going to be arrogant enough to think I know everything there is to know about data modeling. So I also want to ask it questions about have we thought about it this way or have we thought about it the other way?

Just so that I can bring a little bit of critical thinking to it as well, and then evaluate the outcome. Yeah, it’s an interesting point there, Shane.

Shane: I like that. We’ve got to is, ‘cause it goes back to where we started, which is if you are new to the data domain and you wanna understand data modeling, there really isn’t a lot of great content apart from the Kimball stuff around. And there are other techniques. So now LMS give us access to ask questions and effectively get educated on what the art of the possible is.

What could I do? The second thing is, the mentors, the grumpy people who could just look at your model and tell you where you got a. Wrong. They’re not really so prevalent in organizations anymore for some reason. So again, using the LLM to provide that expertise, that rigor, that stress testing, that feedback is really valuable. There is another lens, but we don’t have time to go into detail, but I’ll just drop it in here ‘cause maybe we come back and have another chat about this idea when you’ve thought about it a bit, which is centralized platforms may disappear. So if we think about CRMs, they are centralized platforms that everybody uses with a core bunch of features, they’ve being, built in a way that become reusable. And what we’re seeing now with vibe coding is actually you could build one feature, one app that does one thing really well really quickly. and it doesn’t need to be part of a shared platform. And that’s gonna be a really interesting change in the market when that happens. That also means that you can buy code something that has 30,000 lines of code. And while you should know what it does, potentially, you don’t need to care because if it’s safe and secure, it does the job actually. You don’t need the expertise to understand how it does it. we apply that to the data domain and this idea of moving away from shared data platforms, that I’ve created this information product that does one thing well, and I’ve got the LLM to design, five different architectural data layers using 17 different data modeling techniques and a bunch of code, I don’t understand, as long as it doesn’t have to be reusable, then maybe we don’t care and again, I’m old. I find reuse really valuable. I find expertise and shared language really important. But maybe, the New World is one and done information products where the LMS are. Doing everything completely different each time you deliver a product. But we’re out of time.

So I’m gonna leave that one on the table, maybe have a think about it, and that could be a, a good follow up conversation around how would we use AI and LLMs to remove the need for reuse and shared platforms in the new Age Worlds. But before we finish off how do people find you? How do they see what you’re reading, what you’re writing?

You’re obviously spending a lot of time going to some great conferences what those conferences are and how they can find you.

Andy: Yeah, so I think the first thing is Link Tree. So Link Tree is just is basically my go-to in terms of, I. A jumping off point for people to find me. So that’s basically, link Tree slash Andy Cutler, so A-N-D-Y-C-U-T-L-E-R ‘cause that’ll take you to my company. So data high.com.

That’ll take you to my community blog, which is serverless sql.com. There’s my Blue Sky account, there’s YouTube, which is data high, and then my LinkedIn as well. So the Link Tree, Andy Cutler will take you to everything You need to do most of the conferences. I am in the Microsoft space and predominantly fabric, which is, which is where I, I spend, as the Fresh Prince of Bel Air would say most of my days.

The next conference is over in Oslo, so that is fabric February. So that’s gonna be over in Oslo. There’s obviously SQL Bits, which is, one of the UK’s biggest data conferences as well. so I would say, I generally post on LinkedIn blog posts, opinions, lots of conversation as well.

And as I said, yeah, Tre Andy Cutler, that’s where you’ll find me.

Shane: Excellent. Alright, hey, thanks for a great chat around AI and LMS and data modeling and I hope everybody has a simply magical day.

«oo»

Stakeholder - “Thats not what I wanted!”

Data Team - “But thats what you asked for!”

Struggling to gather data requirements and constantly hearing the conversation above?

Want to learn how to capture data and information requirements in a repeatable way so stakeholders love them and data teams can build from them, by using the Information Product Canvas.

Have I got the book for you!

Start your journey to a new Agile Data Way of Working.